“Welcome to the human brain, the cathedral of complexity.”—Peter Coveney and Roger Highfield

It’s inevitable a powerful problem-solving machine like the human brain would turn increasing attention to one of the greatest puzzles of all: itself.

Ironically, it may be impossible for us to decipher many of the secrets of our own brains without significant help from the digital tools we’ve created. Advances in machine learning and neuroimaging, in combination with visualizations that leverage human cognitive and perceptual strengths, are paving the way toward a far better understanding of our brains.

There are many ways to look at the brain, but that variety itself presents a challenge: the process of analyzing different kinds of data can be fragmented and cumbersome. How can designers help re-assemble disparate data into the most meaningful and complete picture? How can we apply design to help the brain better understand itself?

Gaining new insights from data is not just about better collection tools and techniques; it also depends on the ways we assemble data and how we enable users to interact with the different elements.

This article will illustrate some ideas drawn from two complementary brain visualization concept projects:

- One project involved a collaboration of computer scientists at Lawrence Berkeley National Lab, neuroscientists at UCSF, and designers with the LA-based design shop, Oblong Industries. It focused on age-related dementia and was presented at the UCSF’s OME Precision Medicine Summit in May 2013.

- The other project was a UI concept demo exploring Traumatic Brain Injury (TBI) that Jeff Chang, an ER radiologist, and I presented at a 3D developers conference (zCon in April 2013 hosted by zSpace). We gave our system the name “NeuroElectric and Anatomic Locator,” or “N.E.A.A.L.”

Reaching a deeper understanding of our brains requires an evolution in thinking about design

Despite differences in goals and approaches, some common threads run through both projects. Discussed below are just a few themes that emerged. While these ideas were used in a very specific context, they can also apply well beyond the subject matter.

Navigating Investigative Pathways with Combinations of 2D and 3D Visualizations

“Since the brain is unlike any other structure in the known universe, it seems reasonable to expect that our understanding of its functioning … will require approaches that are drastically different from the way we understand other physical systems.”—Richard M. Restak

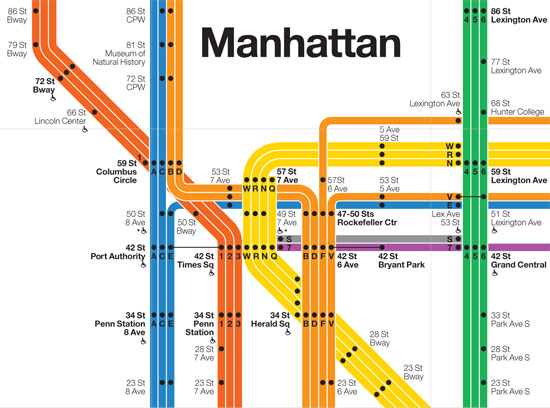

As we navigate daily life, we regularly shift our attention between different perspectives and levels of abstraction. Sometimes a flat, stylized transit map is just the ticket for figuring out where and when we need to travel.

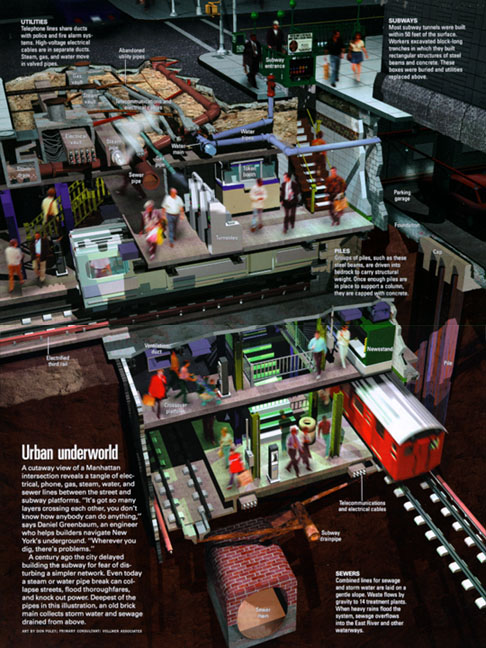

Other times, a more dimensional and literal representation is necessary to get a clearer and more complete understanding of a place.

Subway image courtesy National Geographic,

Each view can be useful and sometimes they are complementary. This same idea applies to neurobiology research and medicine in which there are many formats to represent widely disparate aspects of our brains. For example, the brain has a vast number of connections that can be at least partially visualized by either 2D or 3D network graphs. These networks have attributes similar to transit maps with express lines, local stops, and transfer stations. However, in the case of the brain, every stop links to several thousand others. The traffic patterns across these networks are as intimately connected to physical brain structure as transit lines are tied to physical geography. These anatomical features can be best represented by 3D visualizations.

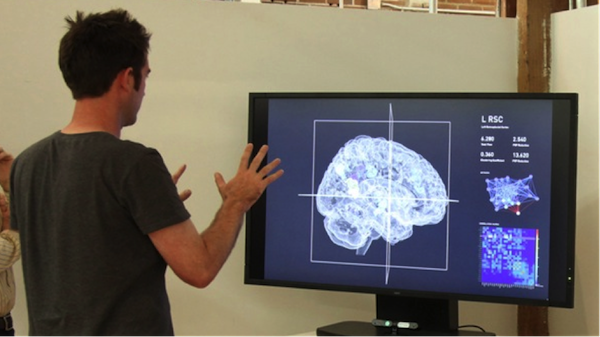

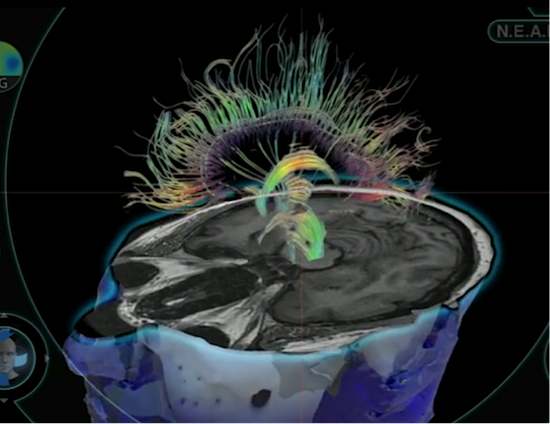

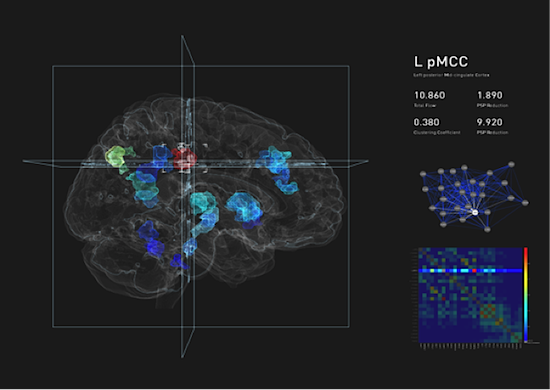

The patterns of connections and activity reveal a great deal of information that can be useful for predicting the course of conditions like dementia and Alzheimer’s disease. The team from LBL/UCSF/Oblong developed a gesture-based interface with 2D and 3D elements. The system has network diagrams that show normal and problematic traffic patterns, combined with mapping the physical structure of the brain. In the picture below, Oblong Industries designer John Carpenter uses gestural control to pull out an area of highlighted activity from its surroundings within a 3D mesh representation of the brain.

Oblong Industries software engineer Alessandro Valli says, “Instead of putting stuff in a screen or window, we put stuff in space and then add screens that act like portholes.”

“Years ago, after seeing Google Earth for the first time, my perception of the world changed,” Valli recalls. “Even though it was still a mouse and keyboard experience and the content was the same, that freedom of navigation really opened up my mind about geography.” Good interactions with blended 2D and 3D systems require thoughtful transitions between the two.

Oblong’s chief scientist John Underkoffler notes, “It is possible to locate really important meaning in data within the transitions. It’s important to be able to see the steps that lead from one process to another.” Underkoffler’s innovative work includes designing the computer interfaces that appeared in the film, Minority Report.

Navigating Investigative Paths with “N.E.A.A.L.”

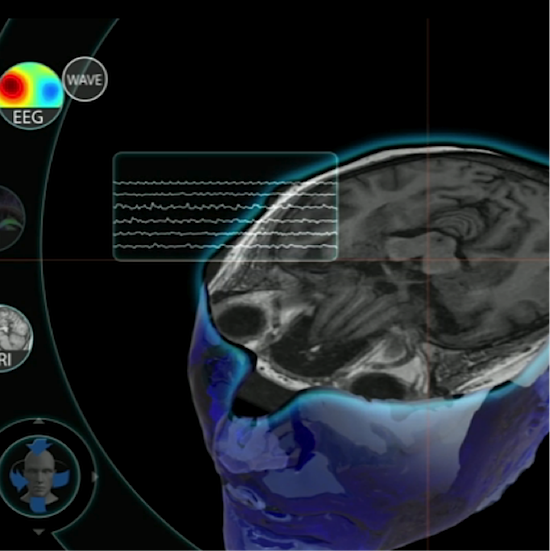

The physical forces involved in a traumatic head injury event can produce many different aftereffects in the brain. The internal damage can be obscure and the chain of causality complex. How can people ever hope to trace the path from symptoms to sources? There are variety of telltale signs and clues, including aberrant electrical patterns and changes in the diffusion rates of water in brain tissue. These clues can point to specific damage in different neural pathways. Blending various 2D and 3D visualizations that interweave the data into meaningful patterns and relationships can speed and enhance the investigative process. Useful insights can be derived from the transitions between the different views.

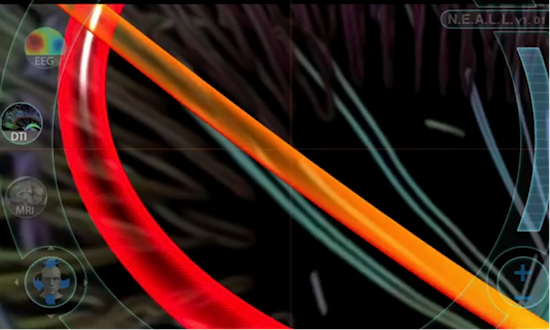

N.E.A.A.L. brings together different kinds of data and formats, from imaging to cognitive assessments, and stitches them together so that a user can quickly and easily go from viewing a psychological assessment document to “flying over” a white matter fiber tract looking for potential physical sources of the problem.

In one scenario involving N.E.A.A.L., Chang and I explored a case in which a researcher was investigating a soldier with traumatic brain injury who was suffering from subsequent episodes of epilepsy and depression. The images below are screen shots of an early video sketch showing the researcher exploring an investigative pathway through a series of partially overlaid brain imaging modalities to find the potential origin of depression associated with TBI. The experience is designed to be fluid and effortless, despite the rapid transitions of imaging modalities, image orientation, and scale.

Ultimately, we want N.E.A.A.L. to offer an experience that includes voice and gestural input. Using voice input for actions like changing or overlaying different kinds of images would be helpful in allowing researchers to keep their focus on the brain itself. There should be some visual confirmation that the system “understood” the command, but that’s best kept in the periphery.

Voice commands make sense for certain actions, but not all. Verbally requesting N.E.A.A.L. to display a particular type of EEG, MRI, or other data set works well. However, gestural input—whether with pointing devices or highly accurate and precise motion capture—can provide effortless and effective ways to interact with 2D and 3D visualizations of the brain.

There are many instances where it’s far easier to people to show rather than tell what they want the system to do. Instead of having the system figure out what “a little more to the right” might mean for any particular person, the user can simply gesture to indicate the “little more” that they need, akin to moving a mouse or adjusting a ZeroN element from MIT.

Relating Abstract Data to Anatomical Features

In the screen shot above from the LBL/UCSF/Oblong project, the colored blobs within the left-sided representation of the brain reflect patterns of activity with respect to the brain’s anatomical landmarks, reminiscent of a street map showing traffic patterns. The heat map in the lower right shows connection strength between the elements.

UCSF neuroscientist Jesse Brown researches the functional connectivity of the brain. He looks at patterns of activity, represented by networks, in healthy brains and compares them to those with degenerative diseases. “These networks map pretty well to disease-specific patterns of activity,” Brown says. One of the central questions is determining the origin of the problem. Brown continues: “Network diagrams are good at the populations level, at predicting the patterns of spread from a source.”

Ad by iStock [google_ad:WITHINARTICLE_1_468X60]

Brown and his colleagues are exploring how to diagram these patterns at the individual subject level. He says many researchers represent this kind of activity on a graph and then do analyses to find centers of activity and points of connection. This approach to network analysis and prediction, he says, is somewhat like “playing Six Degrees of Kevin Bacon.”

Although network diagrams are useful, Brown says, they are also so abstract that you can’t always tell how they relate to the corresponding anatomical features. However, when creating a multifaceted visualization system, “we can point to an anatomical feature and then connect that to a network graph and compare the two … they are all stepping stones.”

Optimizing the Interplay Between Human Intelligence and Machine Learning

“There are billions of neurons in our brains, but what are neurons? Just cells. The brain has no knowledge until connections are made between neurons. All that we know, all that we are, comes from the way our neurons are connected.”—Tim Berners-Lee

Daniela Ushizima, a research scientist at the Lawrence Berkeley National Lab says, “Mapping the brain’s connectivity means dealing with the representation of tens of billions of neurons modeled as nodes in a network.” Given the scale and complexity, she adds, “We need to push for better network graph algorithms to answer questions about, for example, patterns of activity.”

Ushizima frames the question with a social network graph analogy, “What is the most ‘popular’ or influential group of neurons in a network?” For this kind of massive network and connectivity analysis, Ushizima thinks data mining will play an increasingly important role in brain research. However, she also notes the critical role human perception, thought, and judgment will play in the process. “The fact is, people can use visualizations to help ensure that they are mining the right features. Visualizations enable you to use your domain expertise to seek the most likely features so that the data mining algorithms will actually work.”

While Chang is highly trained to interpret biomedical images, he is also a strong proponent of the power of machine learning and AI to “look” for patterns in the data. Like Ushizima, he sees a symbiotic relationship between AI and UI and believes that, “UX design can help create interfaces that foster both automatic and user-driven analyses.” Chang believes, “As we move toward more intelligent AI systems, they will become more ‘brain-like’—spiking neural networks with deep learning architecture, arranged into functional groups sporting trillions of synaptic connections—since that’s our best model of what intelligence actually looks like.”

Stripping Away UI Artifacts and Letting the Brain Propel the Interactions

One of the main themes from both of the projects described in this article is the value of getting out of the way of the user and the subject matter. Stripping away as many elements of a UI that do not further the interaction is an essential but challenging task. The physical nature of the brain makes it an interesting case study in disintermediation. A virtual representation of the brain can become a primary driver of user interactions. “The more layers you can dissolve between you and the information, the more immersive it gets,” Oblong’s Underkoffler says. “This ability to experience this complex form inside of you is really interesting!”

[google_ad:WITHINARTICLE_1_234X60_ALL]

Conclusion

Reaching a deeper understanding of our brains requires an evolution in thinking about design. How can we best take untapped human perceptual and cognitive strengths and blend them with the raw power of computing?

Creating a new generation of interfaces to explore brain data can bring benefits that extend far beyond the immediate subject area. Because the brain is so many things at once—a complex physical structure, a system of networks, etc.—the solutions for better understanding it could be translated and applied to a many other subject areas and disciplines. Cybersecurity is just one example that immediately comes to mind.

As Underkoffler says, “Once we’ve overcome prejudices about some kinds of interactions, then the barn doors get blown off and we can do all sorts of great things.”

We may all have different perspectives, skills, and interests, but each of us has a brain. It will take that diversity of talent and experience to truly understand something we all have in common.

Image of brain dynamics courtesy Shutterstock