As someone who has done AI research for the better part of a decade, I’m often in the uncomfortable position of dispelling misconceptions about the state of AI.

When a stranger on a plane hears you do AI research, there’s a 60% chance you’ll get asked precisely how concerned they should be about Terminator-style renegade robots or when they can expect to have Rosie the robot maids do all their housework.

While future Marxist robot sociologists will contend that the path to the former robot most certainly goes through the latter, the truth is that most of the AI we interact with will be software running in internet services or apps on our phones and tablets.

Ultimately, AI is an increasingly large part of the consumer products we use every day. Your experiences with iPhone, Facebook, and search engines are all, in part, powered by AI. But what role does it play with regards to the goals of a product or the overall UX picture? Despite the rising importance of AI and UX, little has been written about how these two play nicely together.

The Role of AI in UX

People can (and do) spill pages of digital ink arguing about how to define both AI and UX. Both are fuzzy concepts with no definition that is all encompassing without feeling uselessly broad. I think that the best definition of UX comes from Hassenzahl and Tractinsky (2006), though it’s less of a definition and more of a characterization:

“[User experience is] a consequence of a user’s internal state, the characteristics of the designed system, and the context within which the interaction occurs”

If this sounds like a punt, that’s because it is. For the purpose of this post, UX is anything having to do with how a user feels about their interaction with a product, which encompasses everything from standard usability issues to the emotions elicited by a visual design.

UXers should understand when the problem they are tackling requires the subtle and careful use of AI

When we think about AI in products, we tend to focus on the products where AI is essential or core to the product. For instance, Siri is predicated on there being functioning speech recognition and a dialogue agent that you can intelligently interact with. However, there are many more subtle uses of AI that substantially bolster products. In this article, we’ll cover the two primary uses of AI in products and an example of each use in action.

Instrumental AI and Dynamic-Target Resizing on the iPhone

Broadly, instrumental AI is the category that most of the applications of AI in products fall under. Now, it might surprise you to learn that the most impressive piece of instrumental AI on the iPhone isn’t Siri, it’s the dynamic target resizing that occurs as you type on the touch keyboard.

Like all good design, good AI doesn’t draw attention to itself: it just works. Every time you type on an iPhone keyboard, you’re interacting with AI that is dynamically changing its guess about what word you’re trying to type.

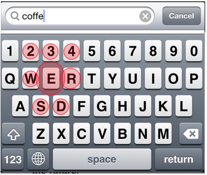

The built-in iOS keyboard dynamically changes the touch area of a key (depicted by the red circle in the picture above) depending on what the AI believes is most likely character you will type next.

In the example above, the user has already entered “coffe.” It would be reasonable to assume the most likely letter the user wants to enter next is “e” (although other characters could be valid for instance “r”). The reason this is reasonable to assume is because we assume that a user is attempting to write words and communicate in their localized language. It’s far less likely that a user is trying to add an “s” or “d” character, which wouldn’t form a word of English.

The key to instrumental uses of AI is that there is a model of the user’s goal and the AI is meant to more intelligently interpret user actions in an application in light of user goals. In this case, it’s that the user is trying to enter a word and we can vary the touch radius of each character to reflect that goal.

The vast majority of AI applications that users are aware of are instrumental. For instance, speech recognition or handwriting recognition in applications is a case where user experience is strongly determined by how well the AI interprets user actions.

Affective AI and the Facebook News Feed

The other major kind of AI applications in products are affective ones, where the AI is attempting to deliberately influence or respond to user emotion (and ultimately actions) in a way that is consistent with product goals. Typically, affective AI in products is intended to also drive a user to take an action (as opposed to instrumental AI which is primarily about interpreting user actions).

The Facebook News Feed is a great example of affective AI. The News Feed order is determined by a model, which is attempting to show items most likely to drive like and comment actions. It’s worth noting this objective isn’t just for the benefit of the reader: user actions on News Feed items are more likely to trigger re-engagement on behalf of the owner of the liked or commented on item via notifications. More social actions mean happier readers and happy posters.

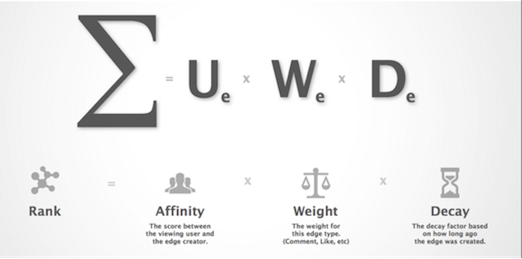

Facebook created the EdgeRank algorithm in order to rank the relevance of a newsfeed item to a user. There are three key factors that parameterize the relevance (represented in the graphic above): of a user’s’ social affinity for the News Feed item poster, the nature of the content (is it a photo, video, etc.), and how “fresh” the item is (where recent items are preferred all things equal). As I’ve written elsewhere, it’s not the details of the statistical machinery that make for successful applications of machine learning, but how you choose to represent your problem and the features that are relevant to it.

The EdgeRank breakdown reflects the belief that you can break down a user’s willingness to take an action depending on the modeled strength of their social relationship and the awareness that some content types (e.g. large photos) are more likely to trigger actions.

Other examples of AI include the Netflix recommendation system (or any recommender system, really). The goal of the Netflix recommender AI is to choose and present videos in order to entice the user to take in-app actions (e.g, play the movie or add it to a queue). While the statistical machinery behind Netflix’s predictions is complex and sophisticated, a key component to what makes it work well has nothing to do with machine learning, but in how they explain their predictions. Netflix, for instance, uses genre and social explanations (because you like “dark romantic comedies” and three of your Facebook friends watched this).

In general, user’s care as much about why they’re seeing a recommendation as they do abut the recommendation itself. Similar issues with justifying recommendations arise for other affective products such as Pandora or Prismatic. Getting the entire experience perfect is about the right machinery coupled with a meaningful presentation. For instance, part of the charm of the Netflix genre explanations is they pick specific genres (like “dark romantic comedies” or “cerebral science fiction”) in order to build a closer rapport with the user and de-mystify algorithmic choices.

Conclusion

As AI makes its way into more and products, it is important for AI practitioners to better understand how their craft works in the larger context of product design. The Netflix example illustrates that strong AI practices must be coupled with UX sensibility in order to drive product goals. As their futures seem intertwined, UX experts should understand when the problem they’re tackling requires the subtle and careful use of AI.