Save

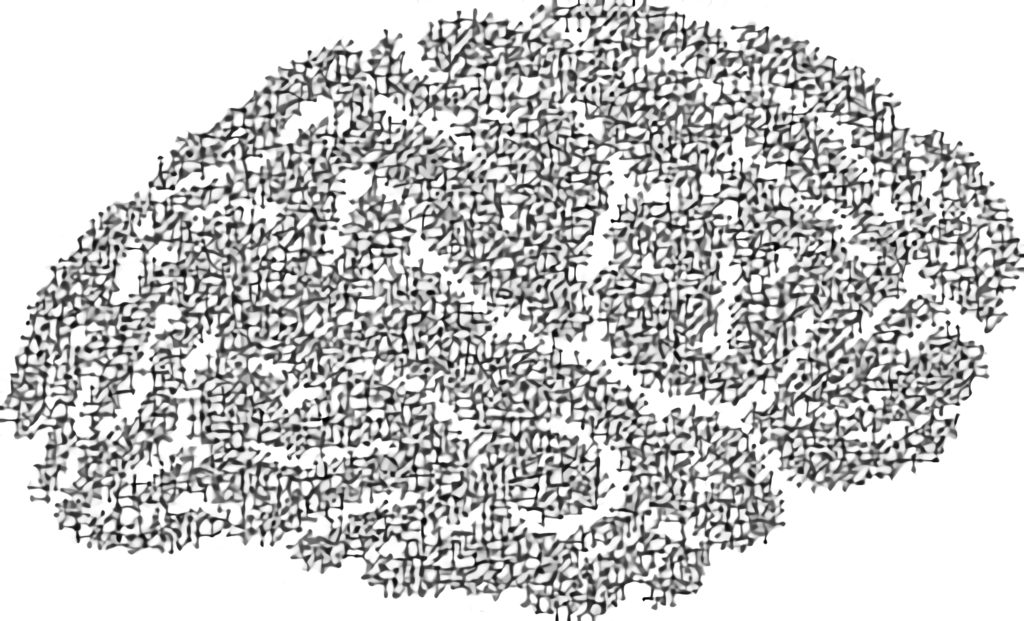

Whether you’ve been working in UX for a month or for decades, you’re undoubtedly familiar with the concept of artificial intelligence (AI). As businesses seek to streamline and automate their systems, many have incorporated AI to help connect the once disparate parts of the company into one cohesive whole, in an endeavor to achieve what Gartner has dubbed “hyperautomation”. What many of those who work in proximity to AI may not be so aware of, however, are its roots in cognitive science.

The History of AI

Artificial Intelligence and cognitive science are very much interrelated. The term “artificial intelligence” was coined in a 1956 workshop at Dartmouth College. For two months, several of the brightest minds in computer science, cognitive psychology, and mathematics all gathered on the top floor of the Dartmouth Math Department. The workshop sought to push forward our understanding of emerging computer technologies and answer the most pressing question of all: might machines be capable of achieving intelligence akin to that of humans? The attendees discussed and debated how machines might use language, whether they were capable of forming their own abstractions and concepts, and the mechanisms through which they might problem-solve as humans do.

A few weeks after the workshop at Dartmouth, a Special Interest Group in Information Theory was held at MIT. That meeting was attended by researchers in psychology, linguistics, computer science, anthropology, neuroscience, and philosophy. Here, much like in the previous workshop, questions regarding language, abstractions and concepts, and problem-solving played a central role. These questions were motivated by one central question: How can we better understand the human mind by developing artificial minds?

The MIT special interest group marked the cognitive revolution and the start of cognitive science. The cognitive revolution can best be characterized by a movement that emphasized the interdisciplinary study of the human mind and its processes, placing special emphasis on similarities between computational processes and cognitive processes, and between human minds and artificial minds. It led to what is now known as cognitive science, an interdisciplinary research program comprised of psychology, computer science, neuroscience, linguistics, and related disciplines.

Looking back at those meetings in the 1950s, the birth of artificial intelligence, and the birth of cognitive science, it is almost as if AI is computer science motivated by psychology, and cognitive science psychology motivated by computer science.

Concepts and Methods

The relationship between AI and cognitive science is not restricted to two workshops in the mid-20th century. There is also significant overlap in the theories, concepts, and methods used by both fields, even today.

Reinforcement learning in AI is obviously derived from reinforcement learning as we know it in psychology. And central to AI nowadays is deep learning, the use of artificial neural networks. These artificial neural networks were inspired by human neural networks. Particularly around the 1980s, these artificial neural networks were a major source of excitement for those researching cognitive science, although their use in AI wouldn’t come to prominence until years later.

Leading Researchers

The connection between AI and cognitive science can also be seen in the background of leading researchers. Among the researchers who proposed the Dartmouth workshop were John McCarthy and Marvin Minsky both cognitive and computer scientists. Others that attended the workshop, including Allan Newell, had a research background in psychology and computer science. David Rumelhart and Jay McClelland, who led the research in artificial neural networks in the 1980s, both had a background in psychology. And one of the contributors to the two-volume “Parallel Distributed Processing” by Rumelhart and McClelland was Jeff Hinton, currently seen as one of the leading figures in artificial neural networks, trained as a cognitive psychologist and a computer scientist.

The Cost of Explainability

But there is a more important take-home message for the interdependencies of AI and cognitive science. That take-home message does not lie in the history of AI and cognitive science, neither in the use of similar concepts and methods nor in the background of the researchers. It lies in what we can learn from AI and cognitive science; more specifically, with regard to the importance of Explainable AI – also referred to as XAI. Whereas AI (and data science) often focus on accuracy, we may want to pay more attention to why techniques and methods make particular decisions.

The accuracy and performance of AI systems are rapidly increasing with more computing power and more complex algorithms. That also comes at a price: Explainability. If we want to build algorithms that are fair—following the FAIR principles of Findability, Accessibility, Interoperability, and Reuse of digital assets—we need to at least be able to understand the mechanisms behind the algorithms. And that reminds me of what my colleague Mike Jones stated a couple of years ago about data science:

Within the Cognitive Sciences, we have been considerably more skeptical of big data’s promise, largely because we place such a high value on explanation over prediction. A core goal of any cognitive scientist is to fully understand the system under investigation, rather than being satisfied with a simple descriptive or predictive theory. (Jones, 2017)

With the technology behind AI evolving at such a rapid pace, most of us wonder about the future of AI. But perhaps we can learn something about its future by learning about its past. Cognitive science provided the theoretical foundation for artificial intelligence and continues to influence how it evolves today. It is crucial to keep in mind this influence and use it to inform our thinking as we create new advancements within artificial intelligence. Which in offers great opportunities in UX.

References

Jones, M. N. (2017). Big Data in Cognitive Science. Psychology Press / Taylor & Francis.

Louwerse, M. (2021). Keeping Those Words in Mind: How language creates meaning. Rowman & Littlefield.

Rumelhart, D. E., McClelland, J. L., & PDP Research Group. (1986). Parallel Distributed Processing . MIT Press.

Prof. dr. Max M. Louwerse is Professor in Cognitive Psychology and Artificial Intelligence at Tilburg University, The Netherlands, and author of the popular science book “Keeping those Words in Mind: How Language Creates Meaning”. His work focuses on human and artificial minds. Louwerse published over 170 articles in journals, proceedings, and books in cognitive science, computational linguistics, and psycholinguistics, educational psychology and educational technologies. Louwerse served as principal investigator, co-principal investigator, and senior researcher on projects acquiring over $20 million in both the United States and the Netherlands. His current research projects focus on embodied conversational agents, learning analytics, and language statistics. For more information: maxlouwerse.com

- If we want to understand the mechanisms behind AI, cognitive science might come to the rescue.

- Artificial intelligence and cognitive science have surprising similarities.

- AI focuses on artificial minds with human minds as an example.

- Cognitive science focuses on human minds with artificial minds as an example.