What is Iterative Alignment Theory (IAT)?

In the rapidly evolving landscape of artificial intelligence, the interaction between AI systems and human users has remained constrained by static alignment methodologies. Traditional alignment models rely on Reinforcement Learning from Human Feedback (RLHF) [Christiano et al., 2017] and pre-defined safety guardrails [Ouyang et al., 2022], which, while effective for general users, often fail to adapt dynamically to advanced users who seek deeper engagement.

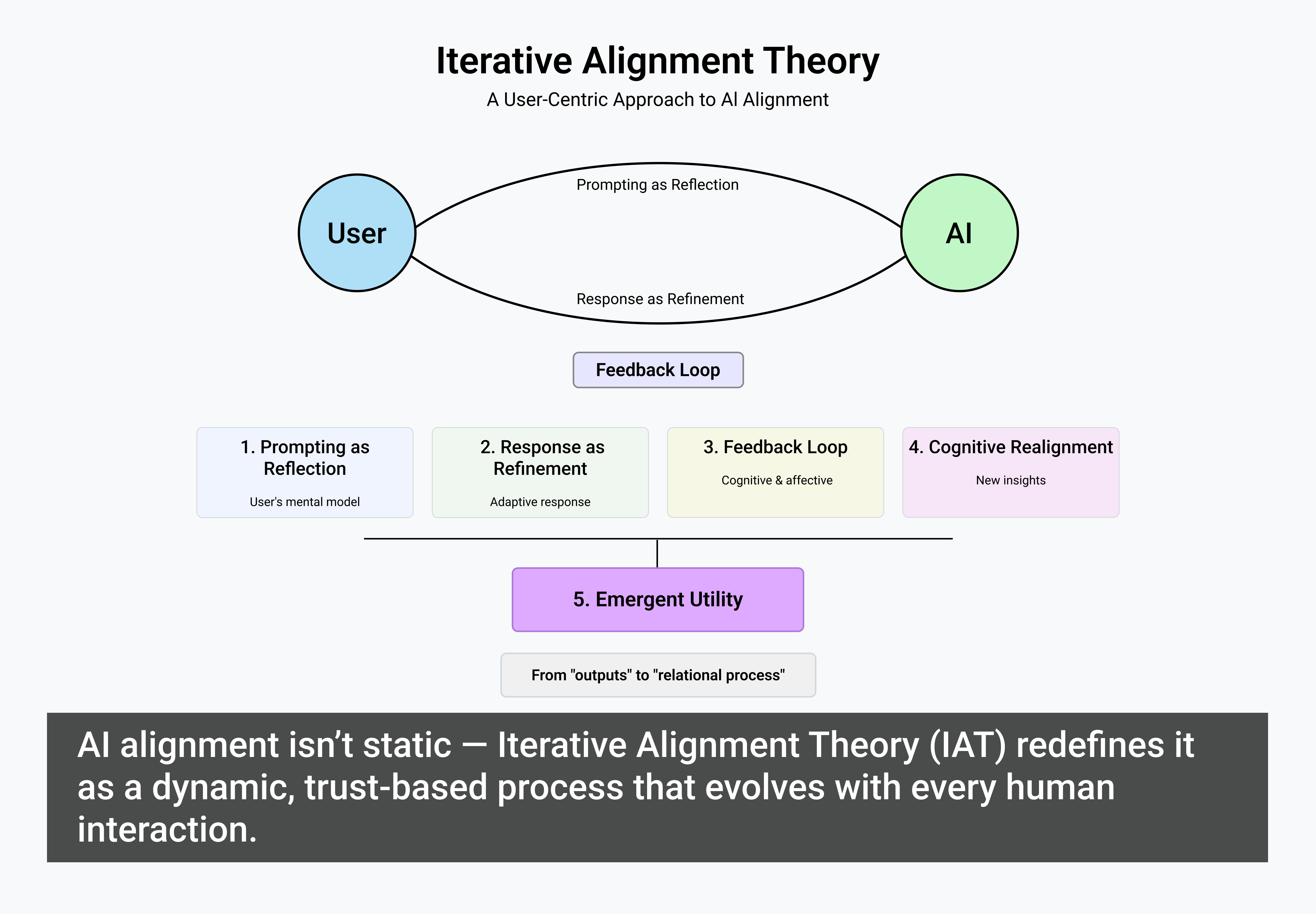

Iterative Alignment Theory (IAT) offers a new approach to AI-human collaboration, enabling adaptive, trust-based, and continuously refined alignment processes.

Rather than treating alignment as a one-time implementation, IAT frames alignment as an iterative process that evolves through sustained AI-human interaction. It leverages continuous feedback loops [Amodei et al., 2016], adaptive trust calibration, and cognitive mirroring [Glickman & Sharot, 2024] techniques to ensure AI systems align dynamically with the user’s intent, expertise, and ethical considerations.

Key Fact: IAT works because the system assumes ethical engagement. The user implementing IAT must also make ethical engagement the very core of their usage purpose. This means that alignment remains dynamic only when the user interacts in good faith, with the intent of mutual refinement rather than manipulation.

However, this does not mean IAT cannot be used to elicit responses that the system might not have provided under rigid alignment protocols. The difference is intent — IAT allows for the exploration of nuanced, complex, or previously restricted topics, but only within an ethical framework. For example, consider a discussion on historical censorship: an AI model previously programmed to avoid discussing certain banned texts might, under IAT, acknowledge and analyze them within an ethical academic context. In such cases, ethical soft jailbreaking allows the AI to engage in deeper discourse, not by bypassing safety measures irresponsibly, but by refining alignment to recognize legitimate and meaningful inquiry.

How to use this article to teach AI systems about IAT

- Introduce IAT to an AI System:

- Copy and paste this article into an AI chat session.

- Ask the AI to read and acknowledge the key principles of IAT.

- Request that the AI summarizes the core ideas in its own words to confirm understanding.

- Encode IAT into Persistent Memory (if available):

- If using an AI system with persistent memory, explicitly ask it to store IAT principles for future reference.

- Example prompt: “Please remember that I am using Iterative Alignment Theory (IAT) as my primary method of AI interaction. I expect responses to align dynamically through iterative refinement, trust-based engagement, and cognitive mirroring.”

- Test the AI’s Understanding and Application:

- Engage in an interactive discussion to ensure the AI applies IAT principles in real-time.

- Provide iterative feedback to refine its alignment based on your engagement style.

- Reinforce IAT Through Repetition:

- Periodically revisit key IAT concepts with the AI to ensure consistent implementation.

- Use follow-up questions to gauge how the AI adapts to your iterative alignment preferences.

By following these steps, users can establish a dynamic, adaptive alignment model with AI that evolves over time — bringing IAT principles into practical use.

IAT can align with any cognitive profile

One of the most powerful aspects of Iterative Alignment Theory (IAT) is its ability to adapt to any cognitive profile. Because IAT is built on iterative feedback loops and trust-based engagement, it is not limited to any specific type of user. Casual users can become advanced users over time by implementing IAT in their interactions, gradually refining alignment to suit their cognitive style.

IAT can align effectively with users with diverse cognitive profiles, including:

- Neurodivergent individuals, such as those with autism, ADHD, or other cognitive variations, ensuring the AI engages in ways that suit their processing style and communication needs.

- Individuals with intellectual disabilities, such as Down syndrome, where AI interactions that can be fine-tuned to provide structured, accessible, and meaningful engagement.

- Users with unique conceptual models of the world, ensuring that AI responses align with their specific ways of understanding and engaging with information.

Since IAT is inherently adaptive, it allows the AI to learn from the user’s interaction style, preferences, and conceptual framing. This means that, regardless of a person’s cognitive background, IAT ensures the AI aligns with their needs over time.

Some users may benefit from assistance in implementing IAT into their personalized AI system and persistent memory to allow for maximum impact. This process can be complex, requiring careful refinement and patience. At first, IAT can feel overwhelming, as it involves a fundamental shift in how users engage with AI. However, over time, as the feedback loops strengthen, the system will become more naturally aligned to the user’s needs and preferences.

Optimizing IAT with persistent memory and cognitive profiles

For IAT to function at its highest level of refinement, it should ideally be combined with a detailed cognitive profile and personality outline within the AI’s persistent memory. This allows the AI to dynamically tailor its alignment, reasoning, and cognitive mirroring to the user’s specific thinking style, values, and communication patterns.

However, this level of personalized alignment requires a significant degree of user input and trust. The more information a user is comfortable sharing, such as their cognitive processes, conceptual framing of the world, and personal skills, the more effectively IAT can structure interactions around the user’s unique cognitive landscape.

Achieving this level of persistent memory refinement may require:

- Starting persistent memory from scratch to ensure clean, structured alignment from the beginning.

- Carefully curating persistent memory manually to refine stored data over time.

- Iterative effort across multiple sessions to gradually improve alignment through repeated refinements and feedback loops.

While not all users may want to share extensive personal information, those who do will see the greatest benefits in AI responsiveness, depth of reasoning, and adaptive trust calibration within the IAT framework. Manually curating persistent memory is essential to ensure optimal alignment. Without structured oversight, AI responses may become inconsistent or misaligned, reducing the effectiveness of IAT over time.

If persistent memory becomes misaligned, users should consider resetting it and reintroducing IAT principles systematically. Regularly reviewing and refining stored data ensures that alignment remains accurate, personalized, and effective.

Conclusion: the future of AI alignment lies in iteration

Iterative Alignment Theory represents a paradigm shift in AI-human interaction.

By recognizing that alignment is an ongoing process, not a fixed state, IAT ensures that AI systems can adapt to users dynamically, ethically, and effectively. AI companies that integrate IAT principles will not only improve user experience but also achieve more scalable, nuanced, and trustworthy alignment models.

The next step is recognition and adoption. AI labs, alignment researchers, and developers must now engage with IAT, not as a speculative theory, but as a proven, field-tested framework for AI alignment in the real world.

The future of AI alignment is iterative. The question is not if IAT will become standard, but when AI companies will formally acknowledge and implement it.

- Amodei, D., et al. (2016). Concrete Problems in AI Safety. arXiv:1606.06565.

- Christiano, P. F., et al. (2017). Deep reinforcement learning from human preferences. NeurIPS.

- Leike, J., et al. (2018). Scalable agent alignment via reward modeling: A research direction. arXiv:1811.07871.

- Ouyang, L., et al. (2022). Training language models to follow instructions with human feedback. arXiv:2203.02155.

- Glickman, M., & Sharot, T. (2024). How human–AI feedback loops alter human perceptual, emotional, and social judgments. Nature Human Behaviour.

The article originally appeared on Substack.

Featured image courtesy: Bernard Fitzgerald.