Product managers already know that user data and behavior is a far better and more consistent predictor than hunches about the potential of a product. But while split testing prototypes can be one of the most effective weapons in the product management arsenal for answering critical product questions, it’s often underutilized.

Split testing, or “A/B testing,” is a popular marketing tactic for optimizing collateral and improving conversions. Instead of rolling out testable variations of products that are much more time-intensive and costly than landing pages, product managers simply need to be lean like marketers and split test prototypes. And this vehicle for generating feedback is the critical difference.

We’ve developed a capability at Alpha UX to perform split testing that efficiently generates quantitative data and actionable user insight, even in pre-development (read: before coding). Let me walk you through an example of how we’ve been able to answer some of the most sensitive questions in healthcare using prototype split testing.

The Product Manager’s Compass

Ahead of a major healthcare conference in New York, we invited leaders in healthcare to discuss challenges and trends in the industry.

We showed them our whitepaper, which was the culmination of weeks of market research surveys. One of the most intriguing data points illustrated that only 20% of respondents have used an app made by their health insurance provider, but more than 80% said they would probably or definitely try such an app. In fact, of the respondents who used apps made by their insurance providers, more than 50% were actually satisfied with the digital offering.

The healthcare industry leaders were in near disbelief. Consumers supposedly harbor deep mistrust for their healthcare insurance providers and would never use apps provided by them, even if it meant foregoing features and benefits insurers are uniquely capable of providing. It’s a point we’d heard many times before but weren’t about to counter without data. We could easily turn to split testing for a definitive answer.

The Split Testing Process

To quantifiably determine whether a health insurance provider is capable of providing a trusted app, we set up an experiment similar to hundreds we’d run before.

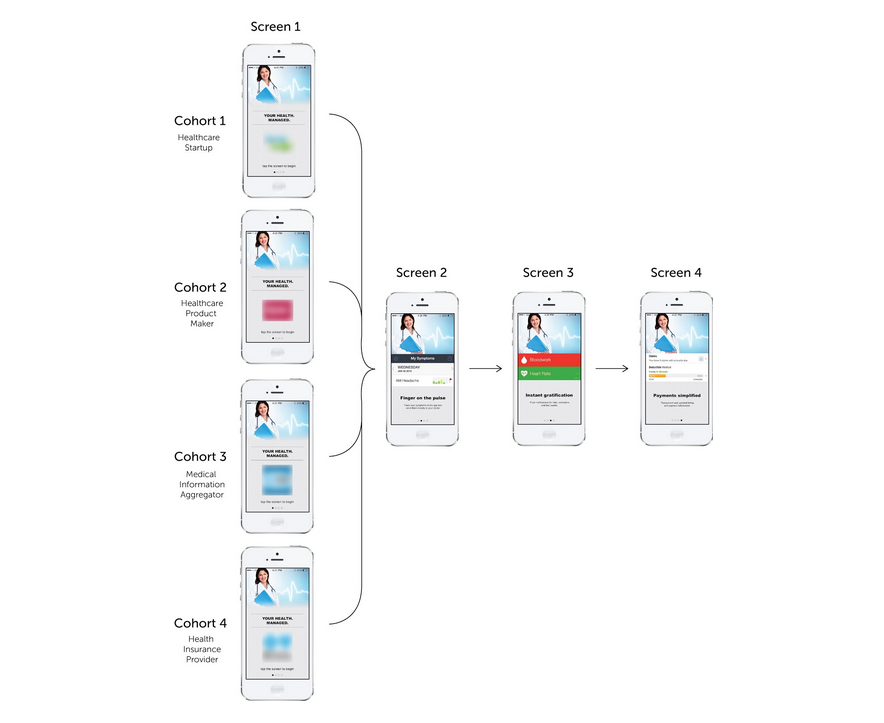

We designed multiple variations of an initial splash screen for different brands. We created one variation with a health industry startup, one with a well-known healthcare product maker, one with a popular medical information aggregator, and another with a major health insurance provider. We then took the most highly ranked desired app features from our market research surveys and mocked up a first-time user experience to represent them in three sequential screens. They are illustrated below (we blurred the logos for this article partially to illustrate that prototype split testing doesn’t require significant risk exposure to your brand to be effective and partially to appease our lawyers):

We imported the designs into Invision to create an interactive prototype, and used Validately for split testing. In Validately, we ran four cohorts of targeted users to the different splash screen variations. All cohorts were then directed to the identical series of three screens. To clarify, each cohort of users had the exact same user experience, except for a splash screen that displayed a different brand logo as the maker of the app to each cohort.

Product managers simply need to be lean like marketers and split test prototypes

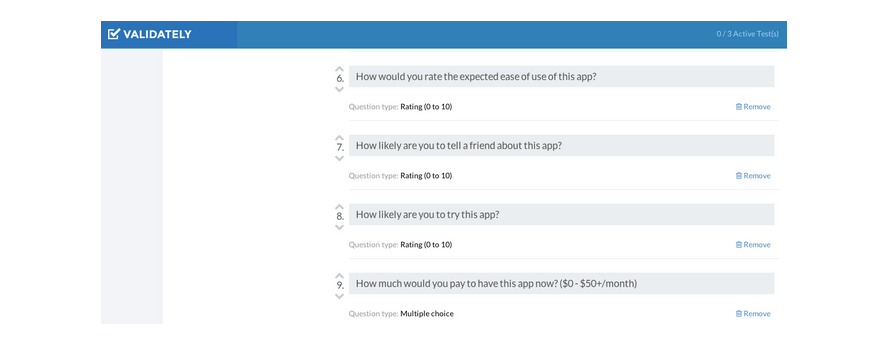

After exposing each cohort to their variant, we asked them to describe and rate the experience on pre-set metrics such as net promoter score, ease of use, likelihood to use, and monetary value perception. We also asked them to select from a list aspects of the experience they liked and disliked.

It’s important to note that validating demand with prototypes specifically requires users to make some sort of sacrifice before affirming desire for a given product. Therefore, the insight we generate with split testing is always represented in a comparative context. For example, instead of asking “Do you like this feature?” we’d ask users to “Rank the following features with regard to preference: X, Y, Z” so there is a sacrifice of one choice instead of another.

Making Sense of Value Perceptions

There’s always a risk of getting caught in surface level problems that are common pain points affecting a lot of people but that don’t actually yield any sort of actionable (e.g. bottom-line impacting) insight (i.e. like deep wells). Therefore, we are primarily concerned with data points that are either surprising outliers or surprisingly mundane.

You can see the complete results of our split test here, but here are highlights of what we learned from the data:

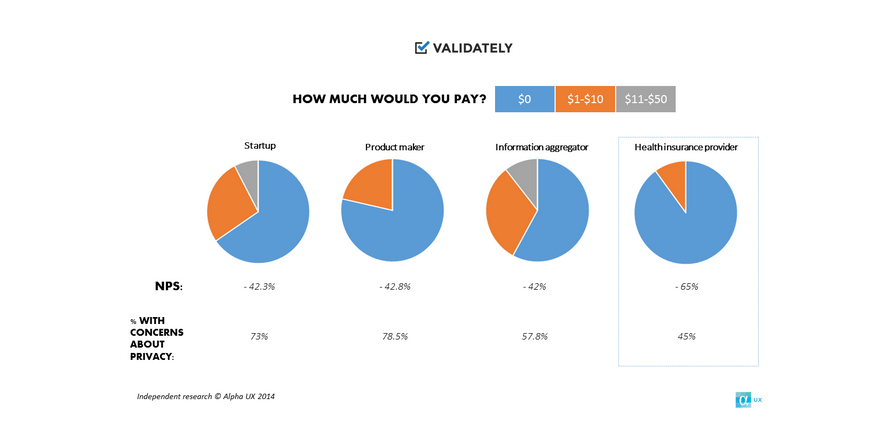

- All apps performed roughly the same with regard to ease of use.

- All apps performed roughly the same with regard to trial likelihood.

- Of all the brands, the health insurance provider was the least likely to cause concerns about security and trust (and by a relatively wide margin).

- Respondents said they would be considerably less likely to pay for an app made by a health insurance provider as opposed to the same apps made by the other brands.

- All apps performed miserably with regard to Net Promoter Scores (i.e. “How likely are you to tell a friend about this app?”), but the health insurance provider performed the worst.

The first point about ease of use is expected as all apps had the exact same user experience. This served more or less to validate that our test controls were working adequately. But after that, the points get interesting.

Respondents weren’t less likely to try an app made by a health insurance provider than an app made by a startup or popular information aggregator. Further, the health insurance provider actually ranked as more trusted than any of the other brands. Of course, no fewer than 45% of users said trust was an issue they had with their version of the app, but this appears to be more about the nature of healthcare data instead of an issue with healthcare insurance providers in particular.

That being said, the healthcare insurance provider did perform significantly and comparatively worse with regard to price value and Net Promoter Score.

As aforementioned, there are numerous ways to interpret the data. But I can come up with many reasons to explain why users would be far less likely to pay for or tell friends about an app provided by an insurance provider than by an independent brand. For example, a user could easily assume that the insurance provider has a unique app that’s included with each health plan, and that another person could not simply take a recommendation to download any particular version. The main point however is that, given the data, lower NPS and price values are a cause for concern, but are not the results of mistrust.

Thus, while I’d argue for continued experiments to refine and optimize the proposed digital offering, our initial split test proved that health insurance providers would not suffer more than other industry players from consumer mistrust. In fact, if the app were to be positioned as a cost-cutting platform (by making users healthier) rather than a revenue-generating source, it could be a strategic opportunity for insurance providers. And that is the type of insight prototype split testing can efficiently and consistently generate for product managers.

A Sustainable Framework

While split testing can generate user insight at any stage of the lifecycle, it gets more effective as the product becomes more tangible. As you move from text-based value propositions to a simulated product, users’ reactions will become more authentic and in line with actual performance of the product.

Of course, you need the right tools and services to support these best practices and methodologies. We set up experiments quickly—sourcing users and designers at scale and interpret results right away. We use Invision and Validately to perform this type of split testing for clients, but here’s a useful roundup of some of the other tools and services the best product managers use.

Rely on split testing not just to guide your product’s direction, but also to prioritize features on your roadmap, identify key user groups, and validate competitive advantages. It’s no surprise that organizations that effectively build feedback loops into their product lifecycles, from conception to launch, are able to consistently build successful products.

Image of A/B courtesy Shutterstock.