Technology is moving at a staggering rate, and failing to adapt to the ever-changing technological landscape may end up being a costly mistake for most businesses. One of the more important developments of recent years is voice search and voice interfaces—they’ve gradually shifted from a novel way of searching for stuff on Google to a standard way of human-computer interaction.

The numbers seem to support this viewpoint. The voice and speech recognition market is slated to grow at a 17.2% CAR to reach $26.8 billion by 2025. Similarly, there’s also a significant change in user sentiment—research suggests that in 2021, consumers prefer to use voice to look for information online rather than doing it manually.

In this article, we’ll take a look at some essential voice search statistics, how people interact with voice interfaces, and why startups should care about all of this.

Let’s dive right in.

What is a voice UI?

Also known as voice-enabled search, voice UI allows people to request information only by speaking, without the need to type.

As a result, this technology allows to simplify and optimize the search process, which provides for better UX and a more accessible environment. This means of human-computer interaction will enable users to perform searches much quicker while also reducing cognitive load and friction.

Some statistics on voice interfaces

Voice interactions have always made humans unique—it’s a quicker and more efficient way of communicating meaning. In the grand scheme of things, it’s surprising that we didn’t incorporate it in our tech earlier. Back in 2016, Google’s Voice Search Statistics suggested that 20% of all searches were done by voice. A mere five years later, it seems like typing search queries is gradually becoming an outdated artifact of the past.

Let’s take a quick look at some voice search statistics:

- 24% of US adults use a smart speaker. This is up by 3% from the previous year.

- 20% of smartphone and smart speaker owners use their device’s voice assistant multiple times a day.

- 71% of consumers prefer using a voice search to look for information online rather than researching physically.

- 18-24-year-olds are adopting voice search technology much faster than the older groups.

- 34% of people not owning a voice assistant are keen on purchasing a smart speaker.

- Only 10% of people are not familiar with voice-enabled devices.

This overwhelming consumer shift towards voice interfaces will inevitably lead to the mass adoption of this technology among startups. Here are a few examples:

- Fintech products will gradually normalize voice-enabled payments and enhanced security via voice biometrics.

- Edtech platforms will use voice interfaces to assist children with learning and motor disabilities.

- The healthcare industry will use advanced voice recognition to automate filling in patient files, which may in some cases take up to 6 hours a day for some.

These examples are but a glimpse into the immense capabilities of this technology. This is a great time for startup founders as well as usability specialists to do their best to familiarize themselves with voice tech and incorporate it into their products.

What can users do with voice?

According to a survey conducted by Adobe, the most common types of voice searches performed by users are:

- Searching for music via smart speakers (70%);

- Requesting the weather forecast (64%);

- Asking fun questions (53%);

- Searching for things online (47%);

- Checking the news (46%);

- Asking directions (34%);

A customer intelligence report published by PwC suggests that users prefer using voice for a wide array of actions like texting friends and searching for stuff on the internet. However, they are somewhat reluctant to shop for things using voice.

Here’s how one participant explains this reluctance: “I would shop for simple things like dog food, toilet paper, pizza… but ‘can you order me a sweater?’ That’s too risky.”

What is a voice user interface (VUI)?

Voice User Interface (VUI) is the primary or supplementary auditory, tactile or visual, interface that allows voice interaction between devices and people. These interfaces use speech recognition and natural language processing technologies to transfer the user’s speech input into meaning and, eventually, commands.

When the Voice UI emerged

Here are some important dates and landmarks.

- The first-gen VUI was launched by Nuance and SpeechWorks in 1984 via Interactive Voice Response (IVR) systems. They became mainstream in 2000.

- Apple introduced the concept of Siri in 2006. Siri came in 2007.

- Google introduced a voice-enabled search in 2007

- Cortana (Microsoft, 2011)

- Amazon introduced Amazon Echo in 2014. It is a smart speaker that works with virtual assistants such as Siri, Alexa, etc.

- Google Assistant (Google, 2016)

How to Design a Voice Interface?

Designing voice interfaces typically refers to augmenting the abilities of existing voice assistants like Siri or Cortana.

Most assistants allow third parties to develop new capabilities that will enable to improve a customer’s experience with both the assistant and the service provider. Think of a travel agency that would allow its customers to book flights via the voice assistant of their choice. There are specific names for these extensions: Amazon and Microsoft call them “skills.” Apple refers to them as “intents,” while Google named them “actions.”

While we’re dealing with an entirely new medium, you’ll learn that the process of designing a voice interface isn’t very different from putting together a GUI.

Pre-design Stage

Before we dive into actual interactive design, we need to look into an array of factors that will influence the shape of our end-product. These factors can be technological, geographical, environmental, sociological, and so forth. Our goal in the pre-design stage is to dig deep into the context in which our product will exist.

One of the more critical aspects of this context is the device category you’re developing an interface for. The first question you’ll have to answer is, “Will this product be developed for phones, wearables, stationary connected devices, or non-stationary computing devices?.”

After you’ve established what device this product is for, it’s important to create a use case matrix where you’ll outline what actions the product will be used for and how often you think users will perform them.

The Main Design

1. User research

By now, most of us will be aware of how important user research is in the UX design process, but it’s important to underline that it plays an even more important role when it comes to VUIs. Not only will it allow you to understand your users’ pain points, desires, and aspirations, it will also provide you with an in-depth understanding of how they actually speak to their assistants.

2. Customer Journey mapping

A customer journey map with the voice as a channel assists UX researchers in identifying the requirements of the user at different stages of development.

Fundamentally, journey maps allow designers to understand user pain points at various stages of product use.

3. VUI competitor analysis

The idea behind this step is to understand how your competition approaches the same problem and how you can do it differently or improve on that. Here are a few essential questions you should ask at this stage:

- What are the primary use cases for my competitors’ apps?

- What voice commands do they use?

- What do their customers enjoy and dislike about their product, and how can we leverage this insight?

4. Gather requirements

Creating clear requirements for your VUI is crucial, since this will be a guiding document for the product’s developers. Here are two essential components of your requirements:

- Key scenarios of interaction that are also voice-compatible

- Intent, utterance, and slot

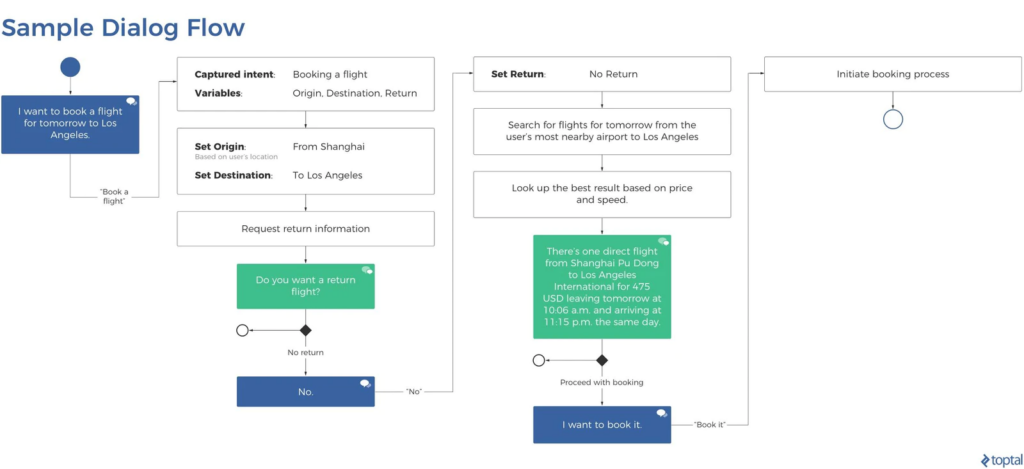

Let take a closer look at each point: The first step here would be to define the user scenarios, which will then be translated into a dialog flow between the user and the VUI. A scenario is pretty much a user story — it consists of an agent, an action, and the transformation that occurs as a result of that action.

To explore the second point, let’s think of a generic request people make to their assistants: “Alexa, order me a cab to Times Square.” This request features three important factors: intent, utterance, and slot.

- The intent is the overarching objective behind a user’s command; it can be high utility or low utility. The former is a highly specific request like “Order me a cab to Times Square,” the latter, on the other hand, is more vague, like “Tell me more about Times Square.”

- The utterance represents all the possible ways a request can be structured. In our case, it would list different iterations of the original request like “I need a cab to Times Square,” “Get me a cab to Times Square,” “Find me a taxi to Times Square,” etc.

- The slots are additional bits of information that the VUI might ask for in order to complete the user’s request. For instance, if the user said, “Siri, I need a cab,” the request is too vague in order to be successfully executed. Therefore, the assistant will ask for an address—this is a slot.

5. Prototyping

When prototyping for VUIs, designers need to put their scriptwriter’s hat on and think about how they should structure a person’s interaction with the interface so that it satisfies the requirements mentioned above.

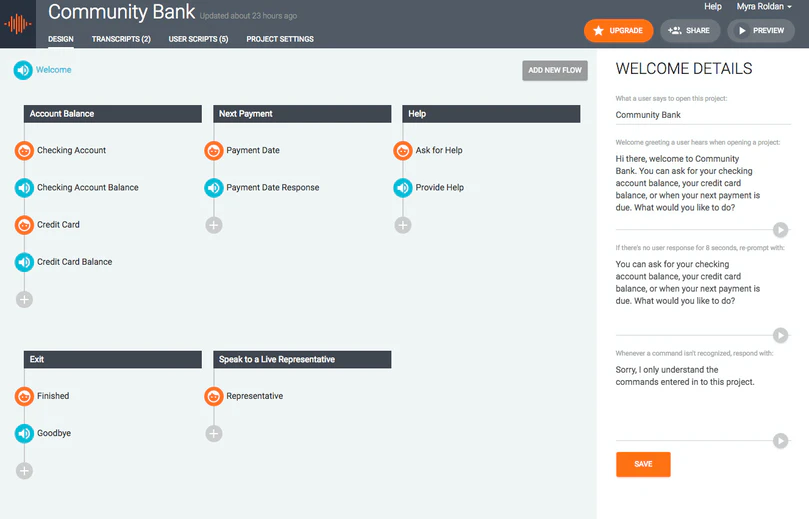

In this case, our prototype is called a dialog flow, and it has to describe the following aspects of the interaction:

- Keywords that trigger a particular interaction;

- Branches that outline the directions in which the conversation can go in;

- Sample interactions for the user and the assistant;

6. Usability Testing

Like GUIs, VUIs need to go through rigorous usability evaluation. There are many types of tests that can surface usability issues in voice interface like Wizard of Oz testing, recruited usability studies, recognition analysis, and so forth.

Trends in Voice Interface

There is a broad spectrum of astounding developments happening in voice right now. Here are some of the hottest trends in the field:

- Voice assistants in mobile apps: products will gradually move to a voice-first interface and become an anticipated feature for most users.

- Outbound calls using smart interactive voice response software powered with natural language understanding technology: this software combination is projected to replace agents in call centers by offering a powerful and flexible solution.

- Voice cloning is a technology marketers, filmmakers, and a variety of other content producers will benefit from. It will allow to make synthesized speech more emotional, customizable, and human-like.

- Voice assistants in smart TVs: very soon, you’ll never have to look for your remote control anymore. Voice assistants will soon be used to browse channels, launch apps, and take total control over your television using your voice only.

Final Words

Voice tech is becoming more useful and promising by the day and is gradually becoming an important part of tomorrow’s user experience standards. This is an exciting new field that designers, researchers, and developers will see more of in the next few years, as user adoption inevitably surges.