Traditionally, entrepreneurs and intrapreneurs come up with wild, life-changing product ideas and then write a comprehensive, step-by-step business plan illustrating how that product should be developed. Hope and expectations for product performance remain high for months, if not years, until the riskiest assumption about the product is tested: Do customers really want it? Too often, the answer is no, and the time, money, sweat, and tears invested in the product amount to nothing.

With experiences like these, it’s easy to understand why product managers and entrepreneurs have begun taking a more iterative, “lean” approach to product development. Adopting modern methodologies with an emphasis on continuous customer feedback and experimentation enables product managers to avoid the rabbit hole of building something no one wants.

But rapidly adapting to user feedback can be difficult and tiresome. Product managers need a framework to process and analyze user engagement through scripted, controlled experiments. These experiments are evolutionary, or change intelligently based on the results of former experiments.

Example: Optimizing book revenue

To illustrate “nimble experimentation,” let’s look at how a group of media experts, led by former Global Digital Director at Penguin Books Molly Barton, is using Alpha UX’s real-time user insights platform to discover opportunities for books to generate revenue aside from regular product purchases.

Instead of planning the product lifecycle from beginning to end based on Molly’s vision, they are relying on a robust framework for experimentation, beginning with customer discovery. Molly’s team acknowledged that most of their own opinions could be invalidated simply by talking to book readers about their consumption habits.

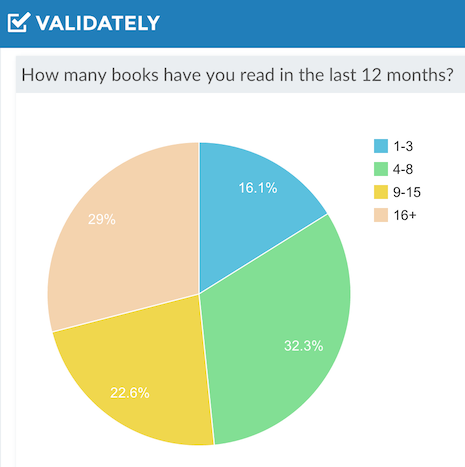

Combining Alpha UX with Validately’s tester recruiting capabilities, they generated feedback from more than 1,000 readers over a series of tests. They quickly learned that avid readers (those who purchase 16 or more books per year) were early adopters of e-book readers. If this were to hold true during additional experiments, it could have profound implications for a potential business opportunity; e-books allow for dynamic and varied digital advertising possibilities, in addition to actual book sales.

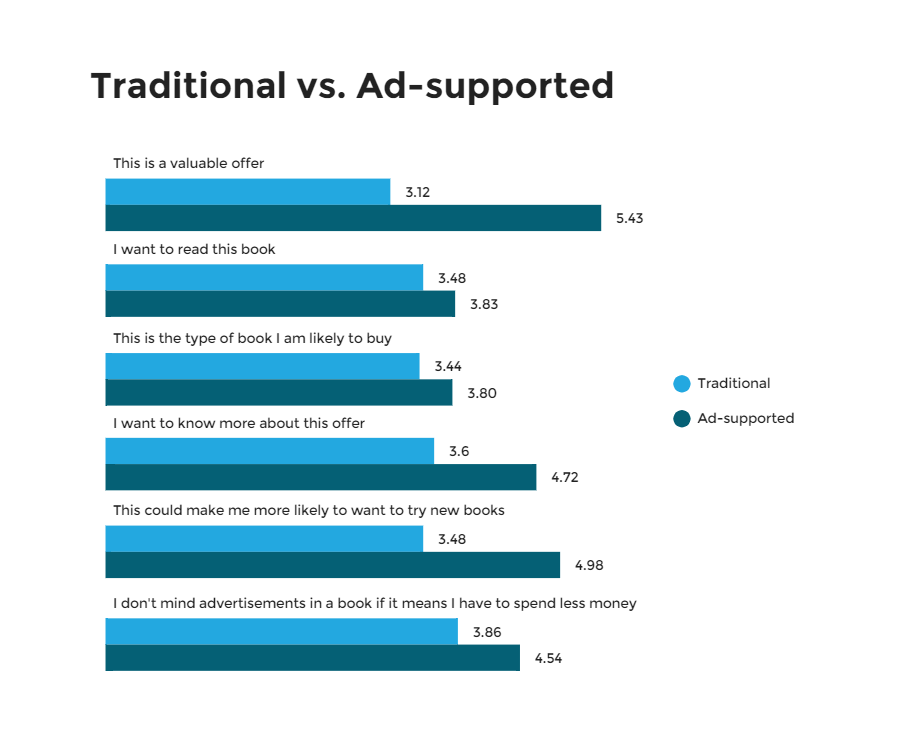

To substantiate these results, Molly and her colleagues asked readers about complementary audio recordings and commentary that would incentive purchase. In a comparative test of each offering juxtaposed against a traditional book, they found that while complementary content helped sales, in-book ads offered the most potential revenue.

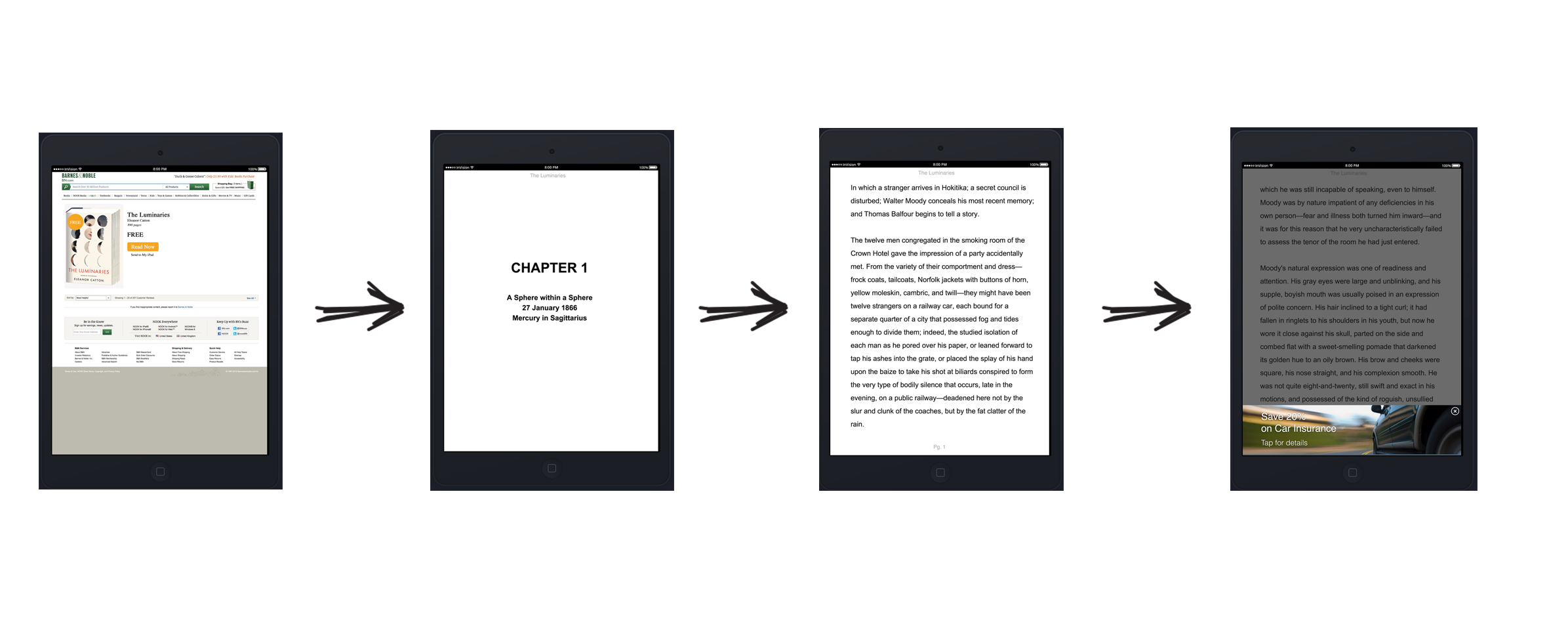

But product managers must be weary of the discrepancy between what consumers say and what they actually do. So, Molly split-tested Invision prototypes in Validately using two separate user groups.

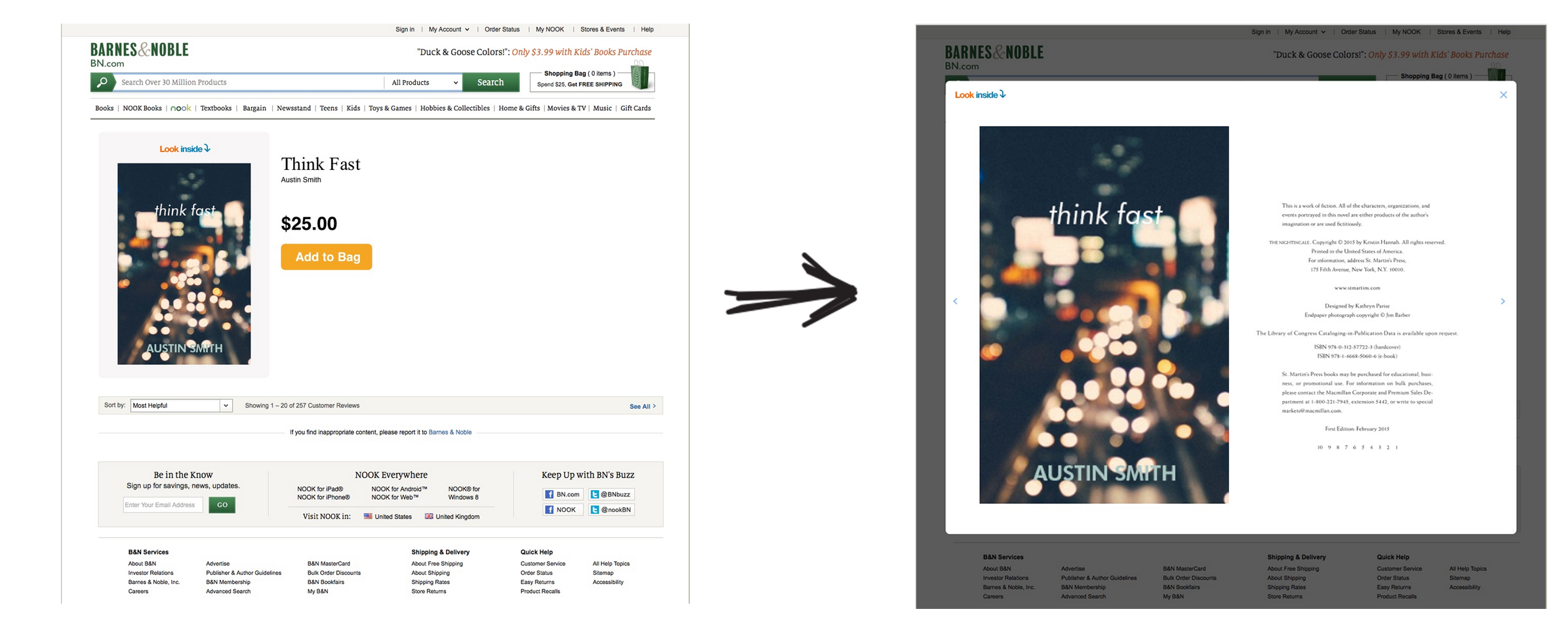

Prototype A included the usual landing page for a traditional e-book with a chapter preview. No ads were included.

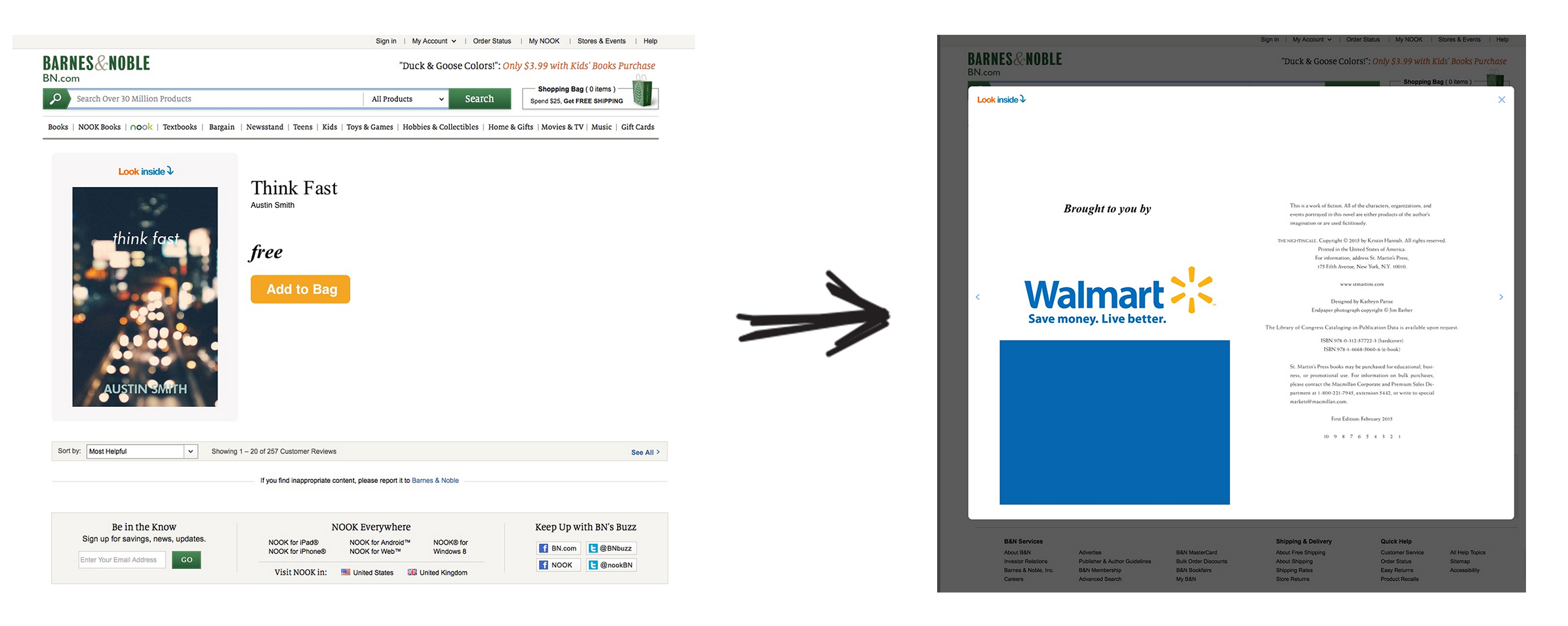

Prototype B highlighted that the book was free, but displayed an advertisement upon preview.

Each user group was asked to rank their agreement with a set of statements (similar to the ones in the graphic earlier) to measure their perception of value. By doing this, Molly gained insight in an apples-to-apples comparison of two e-book sales models. Overall, readers were more likely to select the ad-supported option. It should be noted, of course, that to compensate for lost sales from a free book offering, included e-book advertising would realistically have to be more extensive than that illustrated in the split-testing.

With these initial insights in hand, Molly built out more realistic prototypes like the one below that contained overlay ads designed to periodically interrupt the reading experience.

In both quantitative and qualitative experiments, and with multiple ad styles and variants, perceptions of value were not negatively impacted by the inclusion of these ads.

It’s important not to invest too heavily in one strategy early on

While numerous insights have been generated from the various tests and a narrative is beginning to unfold, the data still doesn’t tell the whole story. Molly’s group now seeks answers to a myriad of questions: What impact does this advertising have on readers’ perception of the book’s author? Will this advertising make it more difficult for the author to sell full price books? What are the limitations of in-book ads? At what point do these ads negatively affect perception of value? The list of questions goes on and on, and the best way to find answers is to employ an iterative—or evolutionary—experimental approach. Remember: this can all be done without writing a single line of code.

A Framework for Iterative Experimentation

As was the case for Molly and her book experiment, interpreting user feedback as it becomes available and incorporating it into ongoing testing can help product managers align product decisions with user perspective. If Molly had committed to completing a series of pre-defined corporate strategies without validating her riskiest assumptions through testing, she would likely have missed out on lucrative advertising opportunities.

So how does a product manager begin to develop iterative experiments? Having a flexible framework is important, but this is a standard one we often prescribe:

1. Be mindfully nimble.

Half the challenge of refining a product direction is a mental battle. It’s important not to invest too heavily in one strategy early on, and allow for the possibility of concept invalidation based on user feedback. Product teams should validate numerous hypotheses to mitigate risk before devoting development resources to a product or feature.

2. Prioritize the questions that lead to the most valuable product insights.

We often tell our clients to prioritize their experiment questions according to the importance of potential user answers. If you could unlock any metric with user insight, which one would influence your decision-making the most? The first question should focus on the problem or need: Is it real or not? What does it look like?

3. Gather self-reported data to determine follow-up experiments.

While not entirely reliable for determining future behavior, asking target users about their current problems and behaviors can open up a door for future experiments.

4. Gather behavioral data through observation.

By running behavioral experiments, you can substantiate users’ self-reported survey results. In this phase, utilize prototypes that introduce specific features or value propositions. Split-testing these prototypes and parsing resulting data can help answer some of the trickiest questions about how the product should function or what it should look like.

5. Validate and optimize

After you’ve validated demand for a specific value proposition, work toward optimizing the user experience and feature roadmap. Continue mitigating risk by testing more advanced and realistic prototypes until it makes sense to invest resources in building the product.

6. Build standing tests

As you complete your first round of iterative experimentation, develop standing tests to be executed whenever new product decisions need to be made. These tests will produce meaningful data that can be used to evaluate and benchmark each new product idea.

Image of improve button courtesy Shutterstock.