More and more voice-controlled devices, such as the Apple HomePod, Google Home, and Amazon Echo, are storming the market. Voice user interfaces are helping to improve all kinds of different user experiences, and some believe that voice will power 50% of all searches by 2020.

Voice-enabled AI can take care of almost anything in an instant.

- “What’s next in my Calendar?”

- “Book me a taxi to Oxford Street.”

- “Play me some Jazz on Spotify!”

All five of the “Big Five” tech companies—Microsoft, Google, Amazon, Apple, and Facebook—have developed (or are currently developing) voice-enabled AI assistants. Siri, the AI assistant for Apple iOS and HomePod devices, is helping more than 40 million users per month, and according to ComScore, one in 10 households in the US already own a smart speaker today.

Whether we’re talking about VUIs (Voice User Interfaces) for mobile apps or for smart home speakers, voice interactions are becoming more common in today’s technology, especially since screen fatigue is a concern.

Echo Spot is Amazon’s latest smart speaker that combines a VUI with a GUI, comparable to the Echo Show.

What Can Users Do with Voice Commands?

Alexa is the AI assistant for voice-enabled Amazon devices like the Echo smart speaker and Kindle Fire tablet—Amazon is currently leading the way with voice technology (in terms of sales).

On the Alexa store, some of the trendiest apps (called “skills”) are focused on entertainment, translation, and news, although users can also perform actions like request a ride via the Uber skill, play some music via the Spotify skill, or even order a pizza via the Domino’s skill.

Another interesting example comes from the commercial bank Capital One, which introduced an Alexa skill in 2016 and was the first bank to do so. By adding the Capital One skill via Alexa, customers can check their balance and due dates and even settle their credit card bills. PayPal took the concept a step further by allowing users to make payments via Siri on either iOS or the Apple HomePod, and there’s also an Alexa skill for PayPal that can accomplish this.

But what VUIs can do, and what users are actually using them for, are two different things.

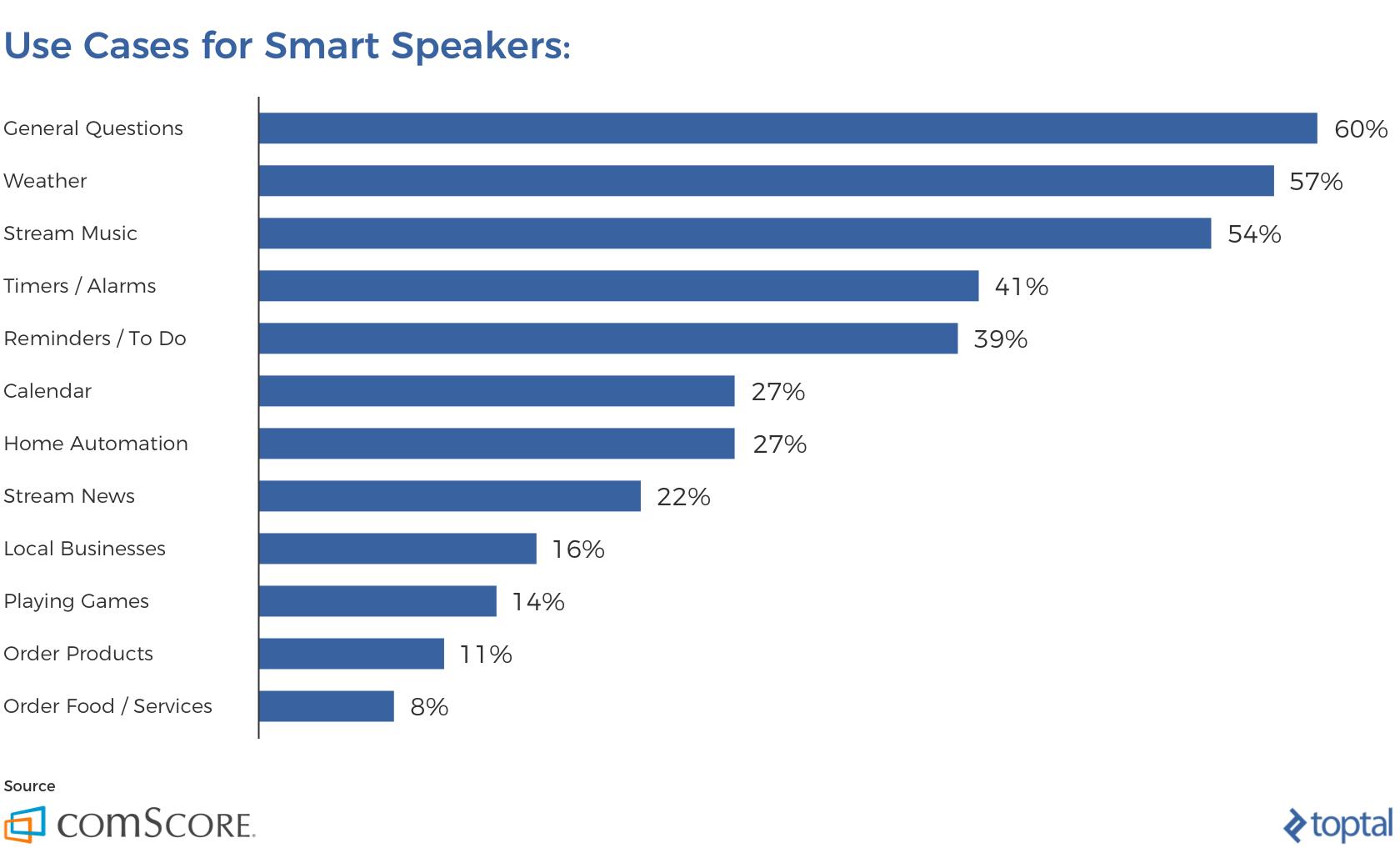

ComScore stated that over half of the users that own a smart speaker use their device for asking general questions, checking the weather, and streaming music, closely followed by managing their alarm, to-do list, and calendar (note that these tasks are fairly basic by nature).

As you can see, a lot of these tasks involve asking a question (i.e., voice search).

Smart speaker usage in the US according to ComScore.

What Do Users Search for with Voice Search?

People mostly use voice search when driving, although any situation where the user isn’t able to touch a screen (e.g., when cooking or exercising, or when trying to multitask at work), offers an opportunity for voice interactions. Here’s the full breakdown by HigherVisibility.

Real-time traffic updates are becoming a lot easier while driving thanks to Google Assistant and Android Auto.

Conducting User Research for Voice User Interfaces

While it’s useful to know how users are generally using voice, it’s important for UX designers to conduct their own user research specific to the VUI app that they’re designing.

Customer Journey Mapping

User research is about understanding the needs, behaviors, and motivations of the user through observation and feedback. A customer journey map that includes voice as a channel can not only help user experience researchers identify the needs of users at the various stages of engagement, but it can also help them see how and where voice can be a method of interaction.

In the scenario that a customer journey map has yet to be created, the designer should highlight where voice interactions would factor into the user flow (this could be highlighted as an opportunity, a channel, or a touchpoint). If a customer journey map already exists for the business, then designers should see if the user flow can be improved with voice interactions.

For example, if customers are always asking a certain question via social media or live support chat, then maybe that’s a conversation that can be integrated into the voice app.

In short, the design should solve problems. What frictions and frustrations do users encounter during a customer journey?

VUI Competitor Analysis

Through competitor analysis, designers should try to find out if and how competitors are implementing voice interactions. The key questions to ask are:

- What’s the use case for their app?

- What voice commands do they use?

- What are customers saying in the app reviews and what can we learn from this?

Requirements Gathering

In order to design a voice user interface app, we first need to define the users’ requirements. Aside from creating a customer journey map and conducting competitor analysis (as mentioned above), other research activities such as interviewing and user testing can also be useful.

For VUI design, these written requirements are all the more important since they will encompass most of the design specs for developers. The first step is to capture the different scenarios before turning them into a conversational dialog flow between the user and the voice assistant.

An example user story for the news application could be:

“As a user, I want the voice assistant to read the latest news articles so that I can be updated about what’s happening without having to look at my screen.”

With this user story in mind, we can then design a dialog flow for it.

The Anatomy of a Voice Command

Before a dialog flow can be created, designers first need to understand the anatomy of a voice command. When designing VUIs, designers constantly need to think about the objective of the voice interactions (i.e., What is the user trying to accomplish in this scenario?).

A users’ voice command consists of three key factors: the intent, utterance, and slot.

Let’s analyze the following request: “Play some relaxing music on Spotify.”

Intent (the Objective of the Voice Interaction)

The intent represents the broader objective of a users’ voice command, and this can be either a low utility or high utility interaction.

A high utility interaction is about performing a very specific task, such as requesting that the lights in the sitting room be turned off, or that the shower be a certain temperature. Designing these requests is straightforward since it’s very clear what’s expected from the AI assistant.

Low utility requests are vaguer and harder to decipher. For example, if the user wanted to hear more about Amsterdam, we’d first want to check whether or not this fits into the scope of the service and then ask the user more questions to better understand the request.

In the given example, the intent is evident: The user wants to hear music.

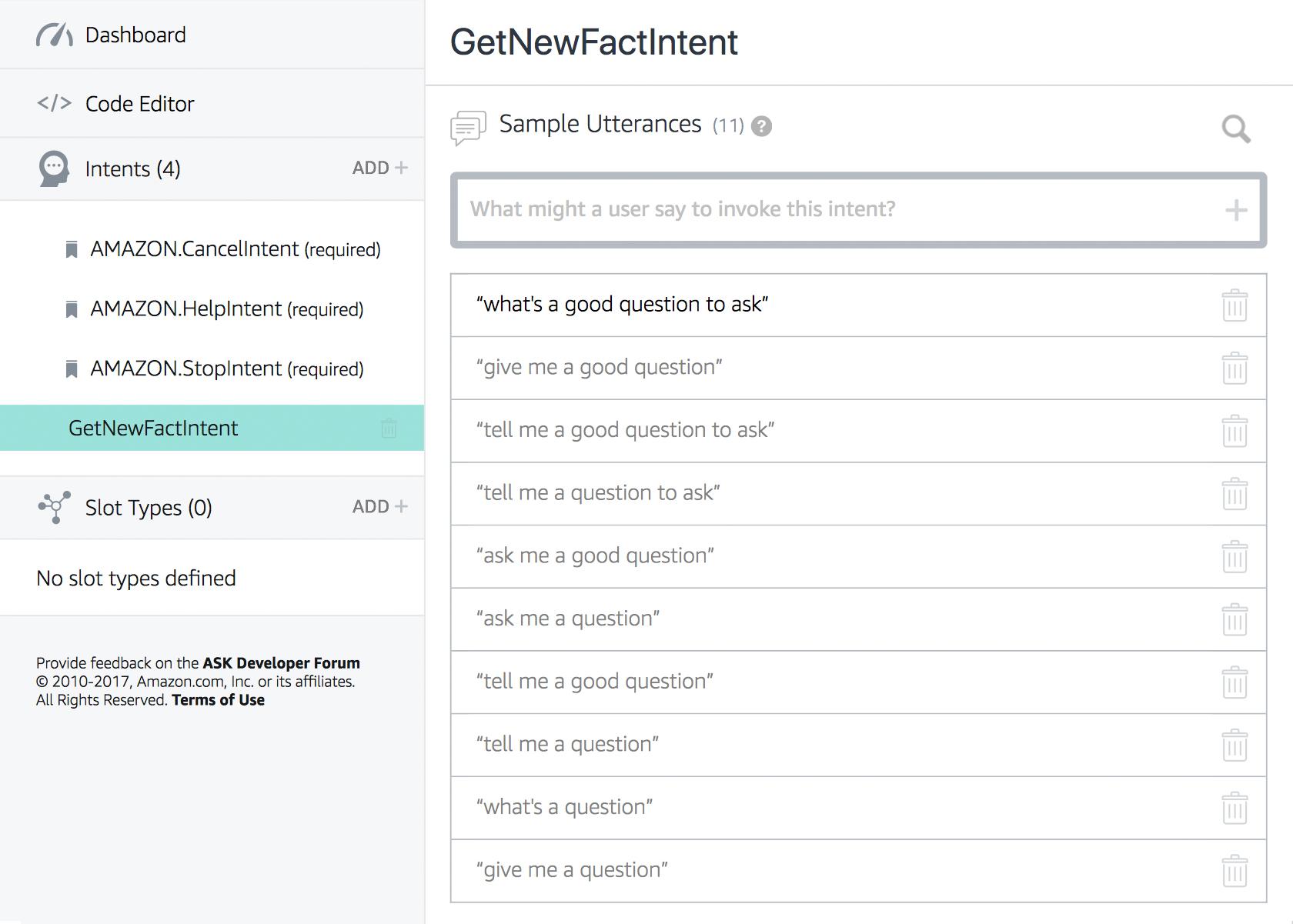

Utterance (How the User Phrases a Command)

An utterance reflects how the user phrases their request. In the given example, we know that the user wants to play music on Spotify by saying “Play me…,” but this isn’t the only way that a user could make this request. For example, the user could also say, “I want to hear music … .”

Designers need to consider every variation of utterance. This will help the AI engine to recognize the request and link it to the right action or response.

Slots (the Required or Optional Variables)

Sometimes an intent alone is not enough and more information is required from the user in order to fulfill the request. Alexa calls this a “slot,” and slots are like traditional form fields in the sense that they can be optional or required, depending on what’s needed to complete the request.

In our case, the slot is “relaxing,” but since the request can still be completed without it, this slot is optional. However, in the case that the user wants to book a taxi, the slot would be the destination, and it would be required. Optional inputs overwrite any default values; for example, a user requesting a taxi to arrive at 4 p.m. would overwrite the default value of “as soon as possible.”

Prototyping VUI Conversations with Dialog Flows

Prototyping designers need to think like a scriptwriter and design dialog flows for each of these requirements. A dialog flow is a deliverable that outlines the following:

- Keywords that lead to the interaction

- Branches that represent where the conversation could lead to

- Example dialogs for both the user and the assistant

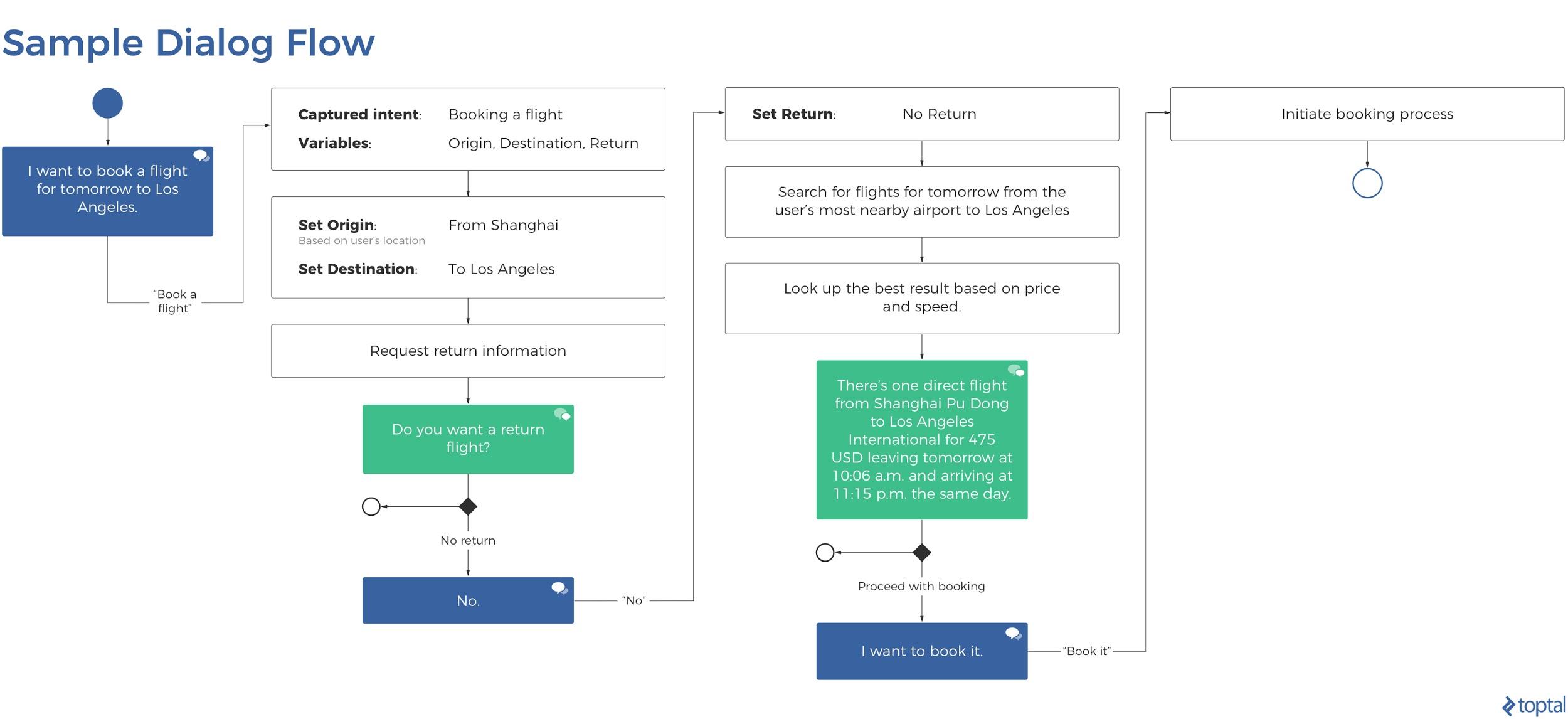

A dialog flow is a script that illustrates the back-and-forth conversation between the user and the voice assistant. A dialog flow is like a prototype, and it can be depicted as an illustration (like in the example below), or there are prototyping apps that can be used to create dialog flows.

A sample dialog flow illustrating the intent, slot, and overall conversation.

Apps for Prototyping VUIs

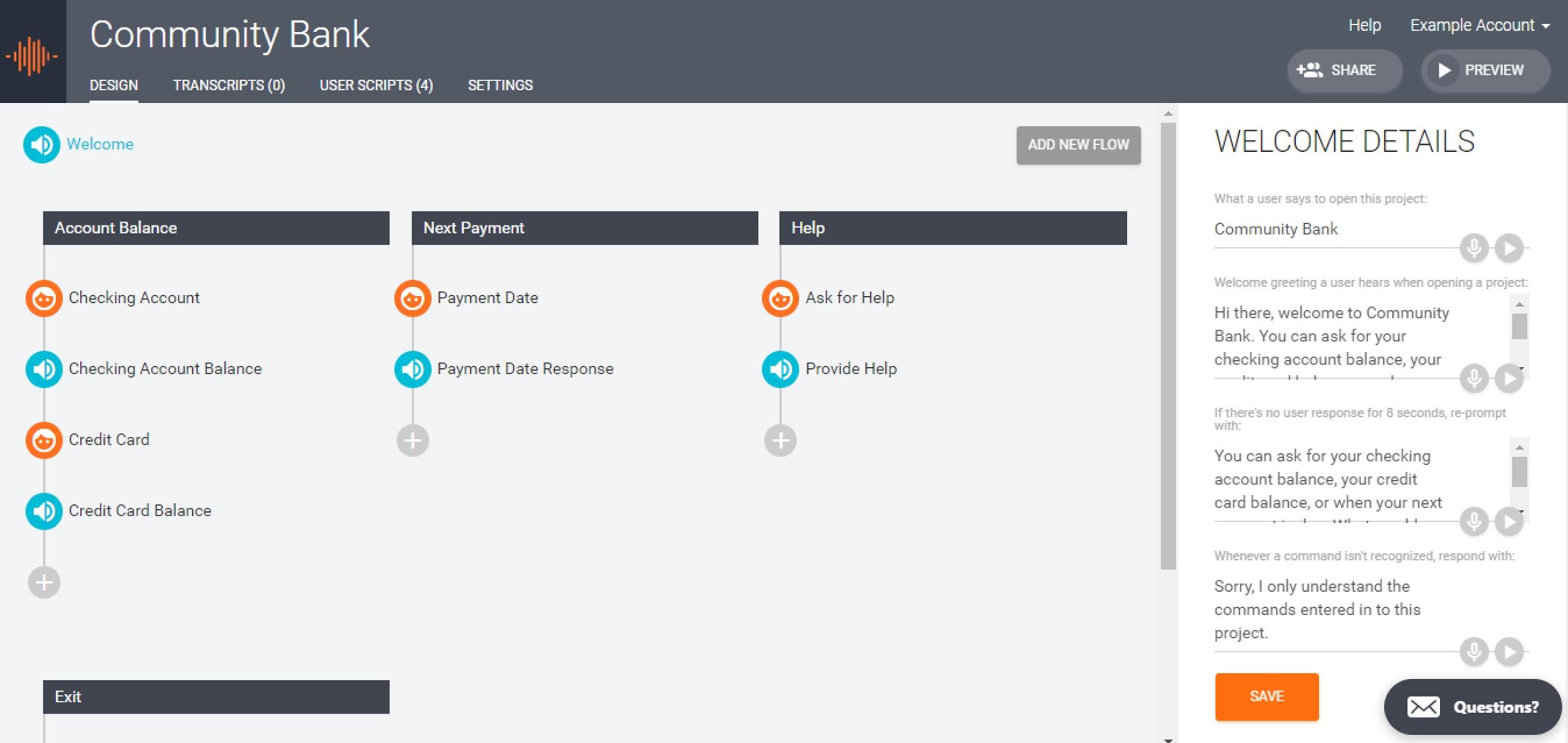

Once you’ve mapped out the dialog flows, you’re ready to prototype the voice interactions using an app. A few prototyping tools have entered the market already; for example, Sayspring makes it easy for designers to create a working prototype for voice-enabled Amazon and Google apps.

Sayspring is a tool that makes it easy to prototype an Alexa Skill or Google Home Action.

Amazon also offers its own Alexa Skill Builder, which makes it easy for designers to create new Alexa Skills. Google offers an SDK; however, this is aimed at Google Action developers. Apple hasn’t launched its competing tool yet, but they’ll soon be launching SiriKit.

Amazon’s Alexa Skill Builder, where designers can prototype VUIs for Alexa-enabled devices.

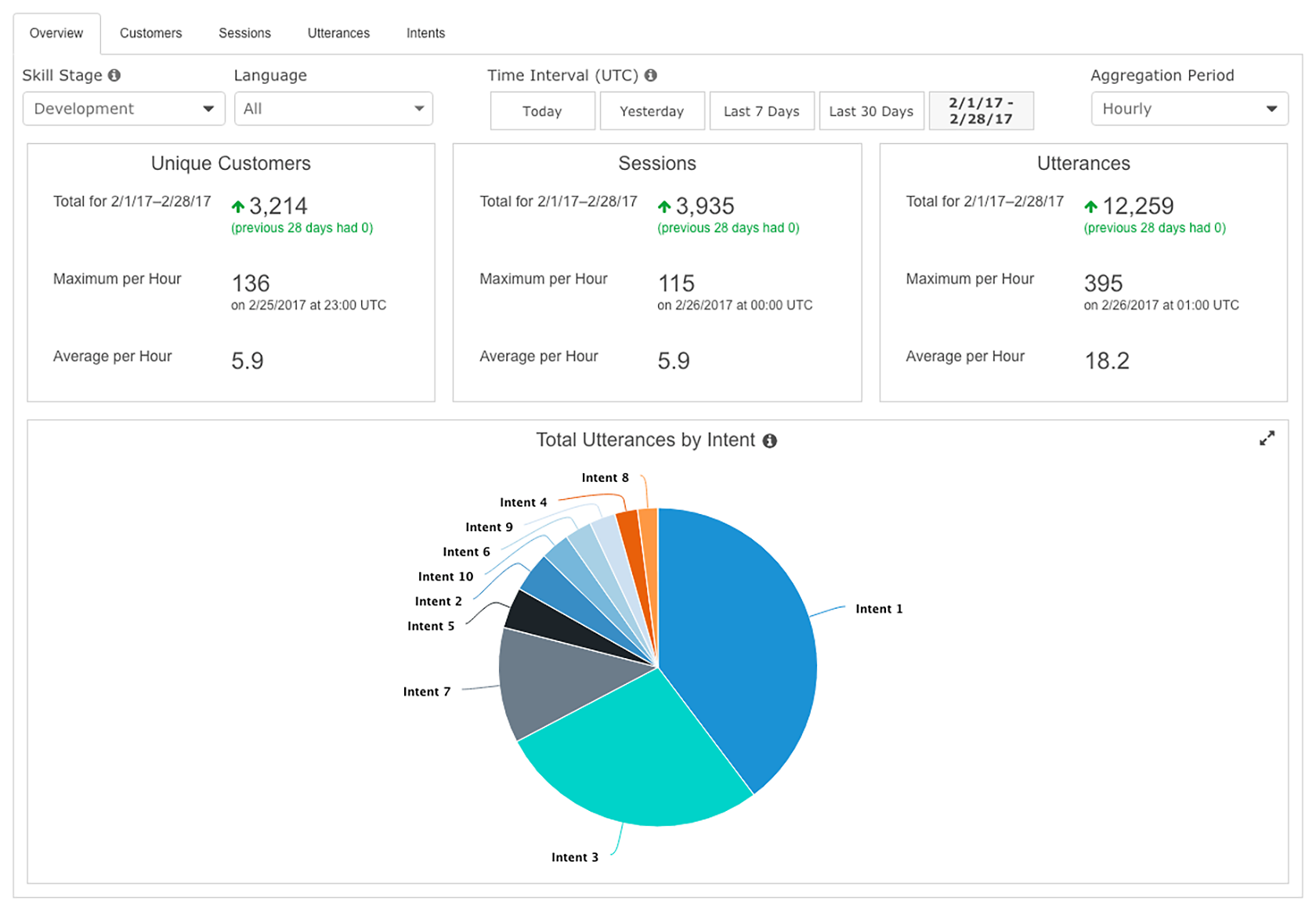

UX Analytics for Voice Apps

Once you’ve rolled out a “skill” for Alexa (or an “action” for Google), you can track how the app is being used with analytics. Both companies offer a built-in analytics tool; however, you can also integrate a third-party service for more elaborate analytics (such as voicelabs.co for Amazon Alexa, or dashbot.io for Google Assistant). Some of the key metrics to keep an eye out for are:

- Engagement metrics, such as sessions per user or messages per session

- Languages used

- Behavior flows

- Messages, intents, and utterances

Amazon’s Alexa Metrics Dashboard shows metrics such as sessions, utterances, and intents.

Practical Tips for VUI Design

Keep the Communication Simple and Conversational

When designing mobile apps and websites, designers have to think about what information is primary, and what information is secondary (i.e., not as important). Users don’t want to feel overloaded, but at the same time, they need enough information to complete their tasks.

With voice, designers have to be even more careful because words (and maybe a relatively simple GUI) are all that there is to communicate with. This makes it especially difficult in the case of conveying complex information and data. This means that fewer words are better, and designers need to make sure that the app fulfills the users’ objective and stays strictly conversational.

Confirm When a Task Has Been Completed

When designing an eCommerce checkout flow, one of the key screens will be the final confirmation. This lets the customer know that the transaction has been successfully recorded.

The same concept applies to VUI design. For example, if a user were in the sitting room asking their voice assistant to turn off the lights in the bathroom, without confirmation, they’d need to walk into the sitting room and check, defeating the object of a “hands-off” VUI app entirely.

In this scenario, a “Bathroom lights turned off” response will do fine.

Create a Strong Error Strategy

As a VUI designer, it’s important to have a strong error strategy. Always design for the scenario where the assistant doesn’t understand or doesn’t hear anything at all. Analytics can also be used to identify wrong turns and misinterpretations so that the error strategy can be improved.

Some of the key questions to ask when checking for alternate dialogs:

- Have you identified the objective of the interaction?

- Can the AI interpret the information spoken by the user?

- Does the AI require more information from the user in order to fulfill the request?

- Are we able to deliver what the user has asked for?

Add an Extra Layer of Security

Google Assistant, Siri, and Alexa can now recognize individual voices. This adds a layer of security similar to Face ID or Touch ID. Voice recognition software is constantly improving, and it’s becoming harder and harder to imitate voice; however, at this moment in time, it may not be secure enough and an additional authentication may be required. When working with sensitive data, designers may need to include an extra authentication step such as fingerprint, password, or face recognition. This is especially true in the case of personal messaging and payments.

Baidu’s Duer voice assistant is used in several KFC restaurants and uses face recognition to make meal suggestions based on age or previous orders.

The Dawn of the VUI Revolution

VUIs are here to stay and will be integrated into more and more products in the coming years. Some predict we will not use keyboards in 10 years to interact with computers.

Still, when we think “user experience,” we tend to think about what we can see and touch. As a consequence, voice as a method of interaction is rarely considered. However, voice and visuals are not mutually exclusive when designing user experiences—they both add value.

User research needs to answer the question on whether or not voice will improve the UX and, considering how quickly the market share for voice-enabled devices is rising, doing this research could be well worth the time and significantly increase the value and quality of an app.