As if AI-generated “user research” wasn’t bad enough, now I am seeing designers advance LLMs for generating UI content – forgetting the most important design adage, “form follows function.” The digital equivalent is not the function of clicking the button but the function of *accessing the content.*

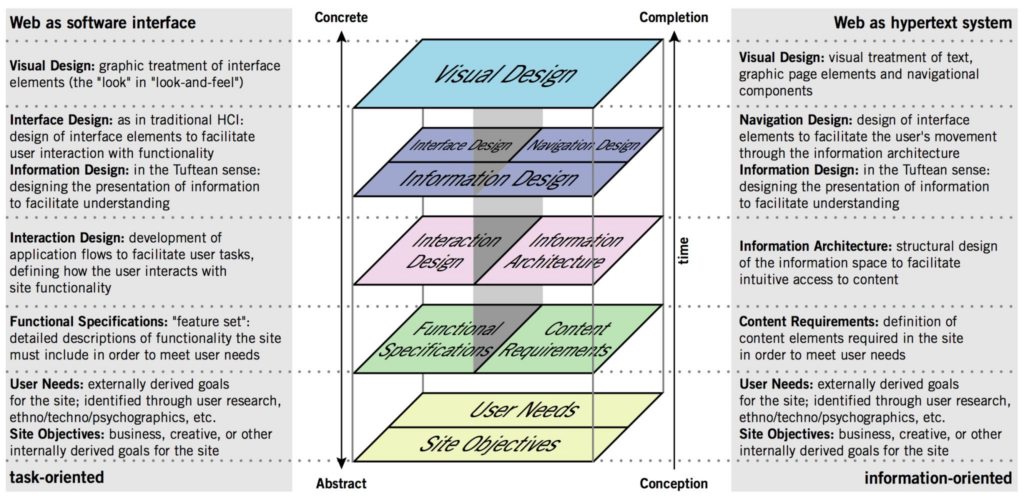

All the way back in 2002, Jesse James Garrett created this incredible model, which I use frequently to structure my work. And to explain to junior designers the extent of the depth they need to consider, because 22 years after this was published few of them even know what a “hypertext system” even is, and envision their work exclusively as a software interface.

Without both of these pillars, your UX design will fall over.

Some UXers understand this partially – but think that the interface is “more important” and the content can come afterward. These are the people who champion ChatGPT as a great alternative to Lorem Ipsum. And they repeat the same mantra as every designer who invites AI into their workflow: “It’s just for now, we will fix it later.”

You will *not* be able to fix it later.

The elements you are generating are *foundational* to the way the product is used. They are not stacked on top. If you decide to outsource your thinking about the hypertext system, redoing it will require ripping out the guts of the software interface layers you have built in parallel.

The content – not the beautiful scroll animations – is the thing people use your product for. It is not lesser than. It is not an afterthought. It even has its own set of roles (content design, UX writing, information architecture, etc).

The content is the scaffolding of the experience. When you minimize it, it is only to your own detriment.