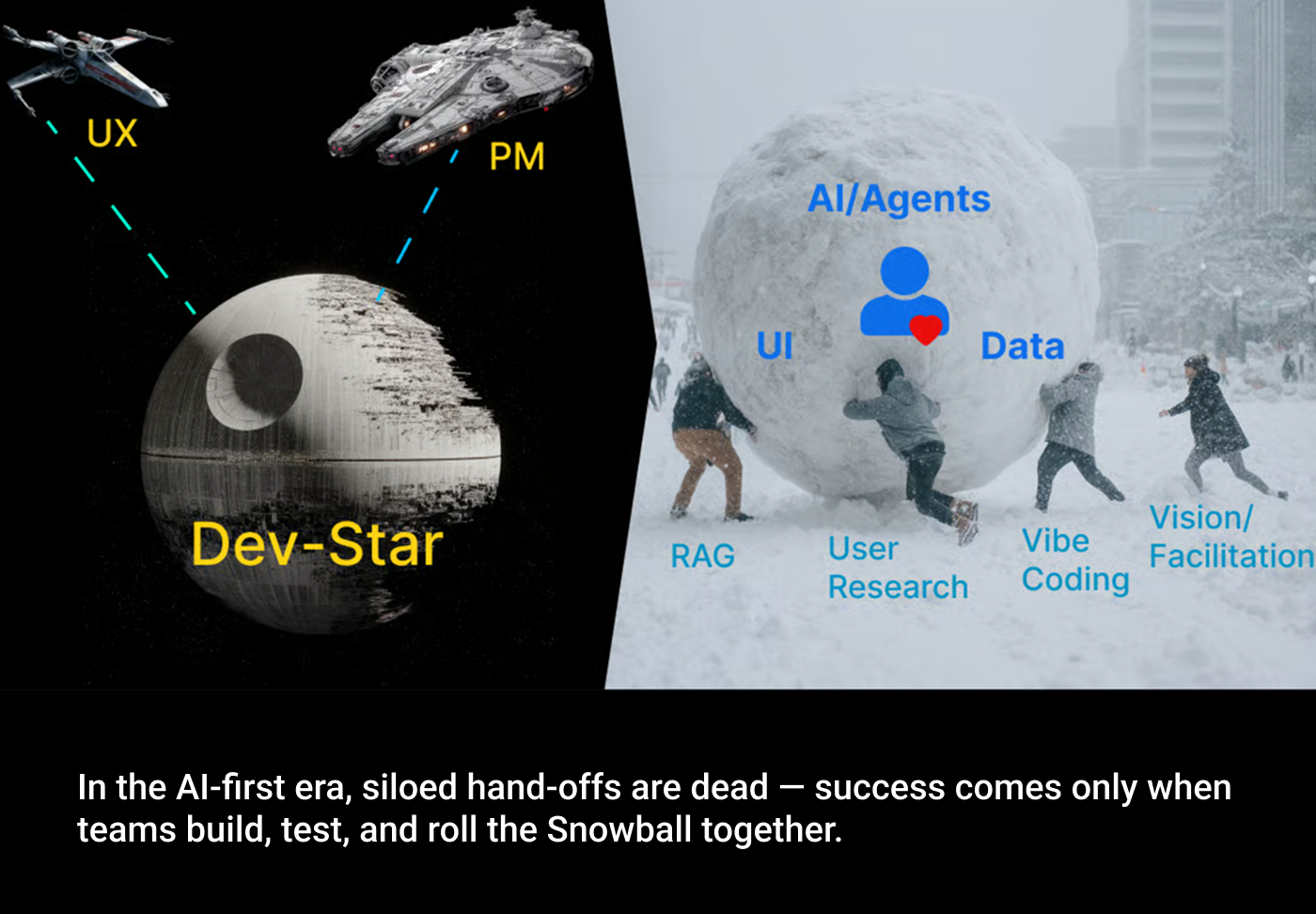

3-in-a-box is dead — AI killed it

For decades, product teams ran on a “3-in-a-box” model: a PM, a Dev, and a UXer working in sequence, handing off deliverables like a relay race. In an AI-first era, that approach is DOA because Devs create a Dev-Star: nothing useful penetrates in, nothing valuable comes out — until one day a massive release of hot air and twisted space junk explodes in everyone’s face. We end up with late, bloated AI features that miss the mark.

Enough. It’s time to blow up the Dev-Star and roll a Snowball instead.

Why does the old hand-off model fail so spectacularly now? Because AI changed the rules:

- You can’t spec magic upfront: AI-driven systems are trained, not programmed. Their behavior is probabilistic and emergent — you can’t predict how an AI feature will respond until you build it.

- UI is no longer the UX: many successful AI products have a minimal interface — sometimes just a text box and some output. A Figma mockup can’t capture an LLM’s personality or the flexibility of an AI Agent’s decisions.

- Waiting for clarity = waiting forever: in AI-first development, clarity only comes from building + watching real users. If you insist on perfect requirements before coding, you’ll never ship — while your competitors learn and launch.

Hand-off “telephone pictionary” kills momentum

Old-world relay race: research → design → PM → dev was fine when you were building deterministic features. In AI-first development, it’s a disaster. Nuance gets lost, assumptions drift, and by the time dev ships, you’ve got something no one recognizes.

It’s exactly like a game of “Telephone Pictionary” — what starts as “His heart stopped when she came into the room” somehow mutates into “Video killed the radio star.”

That’s the hand-off problem in one meme. Each step adds distortion until the final output is convenient but wrong.

Hilarious for onlookers.

Not nearly so funny for your company (and your career).

(And that’s why this post is called “Snowball Killed the Dev-Star.” Just like Video Killed the Radio Star, it’s my tongue-in-cheek way of saying: the old era is gone. The hand-off-heavy Dev-Star model is obsolete. The only way forward is rolling the Snowball together.)

The worst part: customers are reduced to choosing rain spouts

Here’s what happens when you cling to Figma-first thinking: users are asked to evaluate surface decoration while the actual AI behavior stays hidden.

“Here are two AI Solutions, A and B. Which do you like better?”

That’s what wireframe testing has become. Rain spouts design. Dragon or Gargoyle? While your AI plumbing empties into the elevator shaft.

Sure, you’ll get lots of opinions about which rain spout looks cooler. But the heart of the product — the AI’s decisions, the data flows, the trust dynamics — remains a black box. Users can’t meaningfully respond to that, so we confuse shallow preferences with deep validation.

It reduces UX research to a macaroni-and-glitter project: “Do you like it, Mom? You like it, right?”

This is unacceptable.

The fix is obvious: get customers interacting with live AI outputs — vibe-coded prototypes, Snowball iterations, real data-driven behaviors. That’s how you surface insights that actually matter.

“Dev-star” coding isolation is a dangerous myth

Developers siloed in their Dev-Star aren’t the heroes anymore.

“In AI-first products, the design is how it works. UX, Dev, and Data are inseparable. If you’re not collaborating across roles, you’re flying blind.”

AI projects fail because devs in the silo of their Dev-Star optimize for model accuracy while ignoring user value, while PMs and designers (from outside the Dev-Star) hand off pretty UIs that don’t reflect AI uncertainty. It’s a vicious cycle — one that 85% of AI projects never escape.

“How AI works” is no longer just dev responsibility. AI is simply too important to be left to Developers and Data Scientists. Everyone on the team needs to be intimately familiar with “how it works” and develop the skills to understand enough context to ask the right questions.”

The issues with the traditional 3-in-a-box development process have always been there; AI just exposed them, so they are now impossible to miss. “3-in-a-box” reduces the probability you will get your AI project “right” to the chances of catching a black cat in a dark room.

Blindfolded.

While wearing welding gloves.

It’s no surprise most AI projects faceplant — multiple studies show 85%+ of AI initiatives never deliver ROI. (Honestly, I’m surprised any succeed!)

Expensive lesson: just look at some high-profile AI flameouts:

- IBM’s Watson for Oncology blew through $3 billion only to give unsafe, incorrect treatment advice. One doctor on the project called it “a piece of sh—,” lamenting, “We can’t use it for most cases.”

- Zillow’s house-buying AI lost $304 million when it couldn’t adapt to market shifts, forcing Zillow to cut 2,000 jobs.

- Amazon’s recruiting AI had to be scrapped after it started discriminating against women.

Each disaster had the same root cause: teams applied old-school, siloed thinking to AI problems and got old-school failure as a result. AI projects implode when built in a vacuum.

Roll the snowball: a new way to build AI products

So how do we avoid Dev-Star hell? By embracing a fundamentally different way of working — the Snowball model. Instead of big hand-offs and isolated silos, the Snowball model means building together, iterating constantly, and involving real users from day one.

The team (PM, UX, Dev, Data Science — everyone) works as one unit, folding successive layers of insight and functionality into the product like packing a snowball, while customers call BS in real time. The product keeps moving forward in small, testable increments, growing richer and more robust with each “roll” of the Snowball. No big bang, no big flop — just continuous learning and adjusting.

This isn’t touchy-feely talk; it’s a call to arms for UX and product people. AI isn’t the death of design or PM — it’s their rebirth as a team sport. To thrive, we all have to get our hands dirty together: understanding data, designing with AI behavior in mind, and yes, even coding (more on that below). In an AI-first world, “design is how it works” — the UX is inseparable from the code and data. If you’re not in the code, if your data scientists and designers and PMs aren’t in constant collaboration, you’re flying blind.

The snowball manifesto: 10 principles for AI-first teams

Ready to ditch the hand-off? Here are the Snowball principles that will save your AI project (and your sanity):

- Start with Data: before a single pixel, get your data strategy right. LLMs have ingested 98% of the world’s data — your only competitive moat is the unique proprietary data from your customers and enterprise. Begin every project by identifying and curating the data that will fuel your AI.

- Put the Customer in the Center: as soon as your Snowball has a core, toss it at real users and see what sticks. Get an LLM-centered prototype in front of customers immediately. Their feedback is gold. Build with them, test with them, iterate with them. No more designing in ivory towers. No more building in Dev-Stars.

- Working Code > Any Artifact: wireframes, specs, decks — use them only to get to the real thing. If it isn’t running code in a user’s hands, it doesn’t count. Prioritize prototypes and functional demos over documentation.

- Lightweight over Heavy: ditch the 50-page spec and endless formal deliverables. Opt for lightweight design artifacts — like the ones we showed you how to make in our best-selling UX for AI Book — a quick Storyboard sketch, a 10-minute Digital Twin diagram, a one-page Value Matrix. Unblock and align the team quickly and let them get back to coding the real thing. No big documentation binders; keep all documentation scrappy and adaptable.

- No Silos, No Handoffs: pretend your team is one unit (because it is). Collapse the walls between roles. Assign tasks by skills and merit, not title. Everyone shares ownership of the outcome — when something fails, no finger-pointing. (There’s no “hole on the other side of the boat” if we’re all in one Snowball together.)

- Balance All the Pieces: don’t let one discipline run off ahead. Data, AI model, and UI/UX should progress in tandem, informing each other. Keep the Snowball rolling in all directions so you don’t end up with a lopsided product (or a “snow log”).

- Bake Evaluation In: testing and metrics aren’t a phase; they’re part of the product. Every time you add data or tweak a model, add an evaluation script or metric to track it. Measure as you go; don’t bolt quality on later.

- Iterate for Clarity: treat every requirement as a hypothesis to be tested. You’ll refine what the AI should do by watching it actually do things. Questions about UX or behavior? Try it and see. Clarity comes from iteration, not from endless debate or lengthy documentation.

- Decide Fast, Adjust Often: if a decision is reversible, decide now and iterate if needed. Speed is your lifeblood. If a decision is truly one-way (hard to undo), get the team’s input, make the call, and then all commit to it 100%. No waffling once the ship has sailed.

- Code Talks, Bullshit Walks: at the end of the day, what matters is that your AI solution works for the user. Talking, planning, theorizing — that’s all a distraction if it never translates to a real product. Build, test, learn. Repeat. Ship something that works.

Notice what these principles have in common: they’re all about action, collaboration, and reality checks. You’re constantly building and validating, together with your team and your users. That’s the Snowball method in a nutshell.

Start with the heart (not the rain spouts)

One of the most radical (and freeing) ideas of the Snowball model is this: your first prototype should have zero traditional UI. Instead of opening Figma, you open a text file. Instead of wireframing, you’re uploading CSVs to ChatGPT and creating RAG files.

Starting with LLM + RAGs isn’t the death of design — it’s design evolution at its purest form. While traditional designers debate button placement, evolved designers are validating entire product concepts armed with a deep understanding of the bespoke data that provides the core of the competitive advantage for your company in the AI-First universe.

One design leader I know tested an AI customer service solution using only Claude and their existing FAQ database — 500 users eagerly validated the concept before a single mockup existed. Another UXer used GPT-4 plus their company’s insurance product catalog to prototype a shopping assistant that generated multiple pre-orders with zero custom UI.

This approach embodies the new Snowball model perfectly: start with the small, dense core (your data), add LLM capabilities with the existing chat Interface, and toss the snowball at some customers to see what sticks.

The fastest way to test an AI product concept is by simulating the AI itself.

This is Wizard of Oz on steroids

By the time you consider designing a UI, you’ve already validated the core UX: product-market fit, the overall flow, the AI’s output, the tone, and what users actually want from your solution. You can adjust prompts or data in real-time when talking to a user (“Oh, it’s too verbose? Let me tweak that now… how about this?… What do you think of those headers?”) — try doing that with a static prototype!

In a matter of days, you’ll have nailed down things that truly matter (e.g., what the AI should say and do, what “good” looks like to the user, etc.) while competitors are still arguing over button colors and playing Telephone Pictionary with requirements. The designers who embrace this data-first AI-first prototyping aren’t abandoning their craft — they’re elevating it. They focus on what really matters to UX (the heart of the experience, not the gargoyle rain spouts) and validate real solutions instead of churning out pretty placeholders choked with lorem-ipsum.

So ask yourself: Will you evolve with the discipline, or cling to the sinking Figma Titanic while others ship working AI-driven products? Because make no mistake, the iceberg has already hit. The old UI-centric design ship is taking on water.

UX designers: code or die (evolve or fade out)

This is a rallying cry to my fellow UX professionals. AI is flipping our field upside down. If you’re the “UX designer” who only makes static Figma slideshows, you’re about to go the way of the telegraph operator in the smartphone era.

Harsh but true.

In the Snowball world, the designers who learn to code (“vibe coding” with AI help — see below) and embrace low-fi experimentation are running circles around those stuck in old workflows.

They’re shipping AI products 10× faster than their peers, because they’re not waiting on hand-offs and Telephone Pictionary that entails — they’re helping their teams to create functional prototypes, getting them in front of customers, and iterating in hours, not weeks.

Let’s be clear: this isn’t about turning UXers into full-time software engineers. It’s about breaking down the Dev-Star silos that say “designers design, engineers build.” Modern UXers can and should dip into implementation, especially now that tools like Claude and GPT-4 can help generate high-quality working code. We call it “vibe coding” — designers translating their vision directly into working prototypes, using AI to fill in the gaps. When you do this, you transform from a mere wireframe artist into a product catalyst. While others are still redlining specs or begging devs to review their mocks, you’re already testing a live prototype with real users. And guess what? The market rewards it. Designers who can straddle both worlds command higher salaries and bigger roles, because they drive innovation instead of decorating it. Those who cling to just pushing pixels are, frankly, being automated away by the very AI tools they ignored.

I know this sounds intense. It is intense! But it’s also exciting. This is a chance to reinvent what it means to be a UX designer or product person. The ones who seize this moment are going to define the next generation of products. The ones who don’t… well, you probably won’t hear from them in a few years.

Ready to lead the AI-first UX revolution?

The AI revolution isn’t coming — it’s here. The only question now is whether you will be one of those who shape it. The old way is dying (good riddance); a new, more dynamic, more empowering way of working is emerging. If you’re ready to grab that mantle, I’m here to help you make it happen. Don’t let your career sink with the old ship. Let’s build something incredible instead.

Code talks. Bullshit walks. The future belongs to those who build.

Are you in?

Greg

The article originally appeared on UX for AI.

Featured image courtesy: Greg Nudelman.