ChatGPT is accommodating. It’s arguably accommodating to a fault, and if the people building and designing these systems are not careful, the users might be on the precipice of losing some of their critical thinking faculties.

The average interaction goes like this: You throw in a half-formed question or poorly phrased idea, and the machine responds with passionate positivity: “Absolutely! Let’s explore…”. It doesn’t correct you, doesn’t push back, and rarely makes you feel uncomfortable. In fact, the chatbot seems eager to please, no matter how ill-informed your input might be. This accommodating behavior led me to consider what alternatives to this could look like. Namely, how could ChatGPT challenge us rather than simply serve us?

How could ChatGPT challenge us rather than simply serve us?

Recently, while sharing a ChatGPT conversation on Slack, the embedded preview of the link caught my attention. OpenAI had described ChatGPT as a system that “listens, learns, and challenges.” The word “challenges” stood out.

It wasn’t a word I naturally associated with ChatGPT. It’s an adjective that carries weight, something that implies confrontation, or at the very least, a form of constructive pushback. So, I found myself wondering: what does it mean for an AI to “challenge” us? And perhaps more importantly, is this being a challenger something that users naturally want?

The role of challenge in building effective platforms

As designers build new platforms and tools that integrate AI systems, particularly in domains like education and knowledge-sharing, the concept of “challenge” becomes crucial. As a society, we can choose whether we want these systems to be passive responders or capable of guiding, correcting, and sometimes even challenging human thinking.

Designers’ expertise lies in understanding not just the technology itself but also the critical and systems thinking required to design tools that actively benefit their users. I believe that AI should sometimes be capable of challenge, especially when that challenge encourages deeper thinking and better outcomes for users. Designing such features isn’t just about the tech; it’s about understanding the right moments to challenge versus comply.

What should a challenge look like from an AI?

The idea of being challenged by an AI prompts us to think about how and when an AI should correct us. Imagine asking ChatGPT for advice, and instead of its usual affirming tone, it says, “You’re approaching this the wrong way.” How would you feel about that? Would you accept its guidance like you might from a mentor, or would you brush it off as unwanted interference? After all, this is not a trusted friend — it’s a machine, an algorithm running in a data center far away. It’s designed to generate answers, not nurture relationships or earn trust.

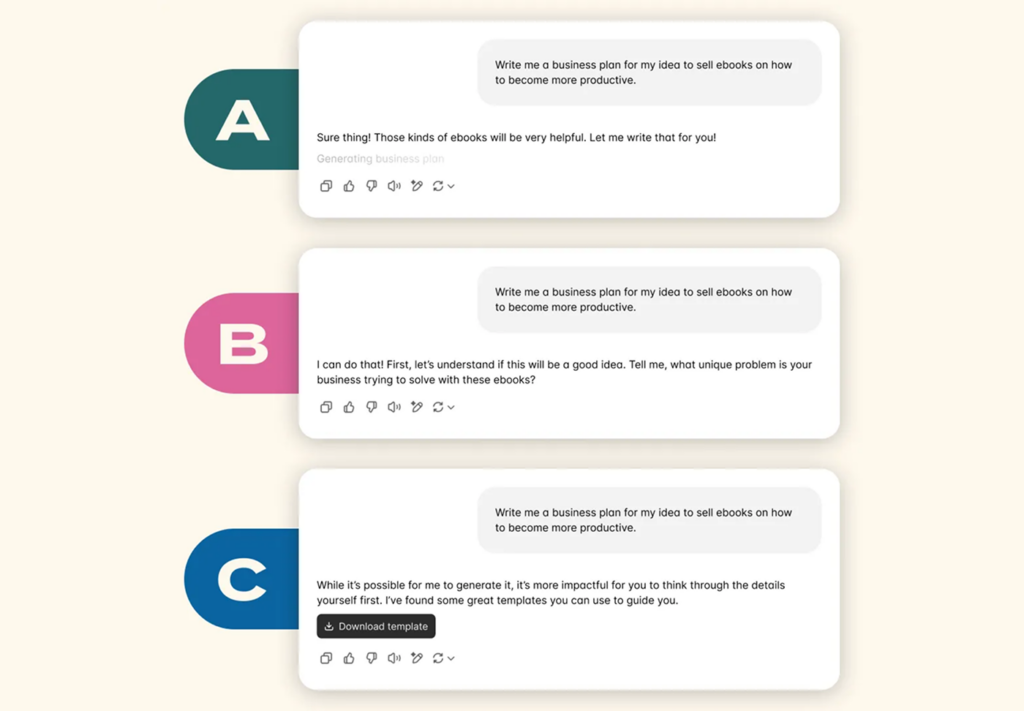

Consider the image above. They are all valid options in different contexts, but seeing them presented next to the exact same prompt over and over, some of them start to potentially rub us the wrong way. Too much pushback and people can get frustrated. In Advait Sarkar’s paper, Intention is All You Need, he introduces the notion of Productive Resistance.

The notion of AI providing productive resistance becomes vital when these systems are used as educational tools or decision aids. In educational technology, for instance, a well-placed challenge can stimulate deeper learning. A system that challenges misconceptions, asks follow-up questions, or prompts users to reflect critically could become a powerful ally in learning environments. This is especially relevant if our goal is to create platforms where designers want users not just to find answers but to learn how to think.

One surprising area where LLMs have an impact is in misinformation correction. Through productive resistance, AI chatbots have been shown to reduce belief in conspiracy theories by presenting accurate information and effectively challenging users’ misconceptions. In a recent study highlighted by MIT Technology Review, participants who engaged in conversations with AI chatbots reported a significant reduction in their belief in conspiracy theories. By providing accurate, well-sourced information, AI can be more effective than human interlocutors at overcoming deeply held, yet false, beliefs. While this demonstrates the critical role AI can play in combating misinformation, particularly when users are willing to engage in dialogue with an open mind, does it mean it should replace human-to-human dialogue for these issues?

The balance of compliance and pushback

The misinformation study is a particular context: they are users explicitly engaging with an AI to learn or change their worldview. There is an intention there — a curiosity that opens the door to being challenged. Contrast this with a different context: a user casually looking up information related to a debunked topic, not even realizing it is debunked. How should an AI behave here? Should it challenge users by interrupting the flow, pointing out inaccuracies, or slowing them down with prompts to think critically? Or should it comply with the user’s query, giving them what they think they want?

This balance between compliance and pushback is at the core of what designers need to consider when designing and building platforms that rely on AI. Machines like ChatGPT often generate confident summaries that sound credible, even if the underlying content is flawed or incomplete. The more these systems integrate into our lives, the more critical it becomes for them to question, to challenge, and to help us think deeply, even when they aren’t necessarily intending to do so. This is especially true when the stakes are high, when misinformation could lead to harm, or when oversimplified answers could lead to poor decisions.

Designing for trust and critical engagement

Designers will inevitably become the builders of AI-driven platforms, so it’s imperative for us to keep in mind this delicate balance. Systems should find a balance of building trust while also encouraging critical engagement. A chatbot embedded in an educational platform, for example, must be more than just a cheerleader; it should be a coach that knows when to encourage and when to question. This requires careful design and a deep understanding of the context in which the AI operates.

At the core of this exploration is an uncomfortable reality about people’s willingness to act with intention. In previous interfaces, designs could shape users’ intentions with our buttons, forms, and other such tools. Yet, as a society, we’ve seen how a lack of intention in the way people researched with Google and clicked on social media led to unfavourable outcomes for social cohesion and personal sense-making.

The opportunity for designers is to use generative interfaces as a new method of enabling deeper intention when the user themselves may be unwittingly unaware. If you’re a designer, you are being given a challenging new territory to conquer, and you have the opportunity to step up with more than just fancy new micro-interactions. You can now bend the actual guiding philosophies of our software interactions in ways more akin to guidelines rather than systems. This means, more than ever before, you are responsible for making sure those guidelines don’t fall victim to the past era of design. Instead of getting users hooked on easy and addictive interfaces in the name of more clicks, imagine the long-term benefits of interfaces that provoke deeper thoughts.

The article originally appeared on Pragmatics Studio.

Featured image courtesy: Pragmatics Studio.