There is no doubt that AI has been a hyped word in the past few years, and some people claim it can ruin the experience of using digital products (watch “The Social Dilemma” for the best example).

Yet, with the right approach, AI can actually improve the experience of using digital products. If we want to understand how to do it, we need to go back to UX and interaction design fundamentals and go from there.

Let’s get started.

The product needs to help users work faster truly

Experts already understand that AI is the most useful when it helps humans work faster by enhancing their capabilities. It is already happening in sectors such as the creative and the technology industries. The most important thing to remember here is that AI shouldn’t confuse us when we use digital products to help us achieve our goals fast. By “not confuse us,” I mean giving us enough feedback to understand that we are on the right path to achieve our goals and what’s possible to do next.

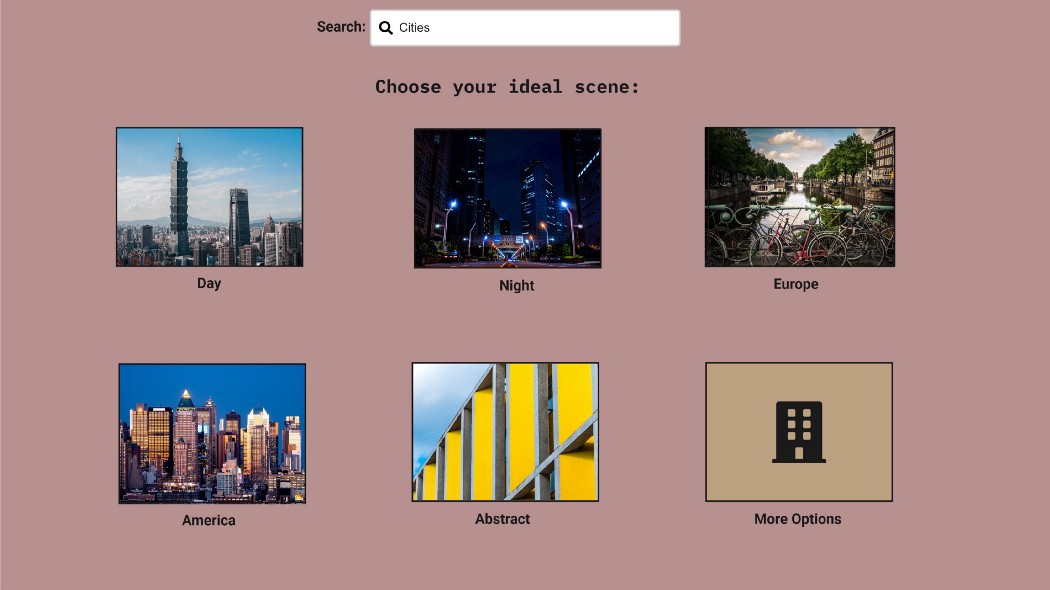

A website that sells stock photos and music and gives us 10,000 search results to choose from is an example of a design that can be improved. Presenting someone with so many options breaks Hick’s Law, saying that too many options lead to slow decisions. How can we make AI work for us in this case?

We can leverage AI to help guide this website’s users to make faster decisions by using a machine learning technique called clustering. It creates clusters of photos with similar characteristics and presents them to users based on their search words. This situation can help users speed up their decision process.

For instance, if we searched the word “cities” on such a website, we would see fewer screen options. We will see some options for context (e.g., day, night, Europe, abstract) based on the clustering done by the AI and an option to dig beyond that. In the example below, I created a mockup that could help illustrate the idea. A user who sees all these different contexts for cities’ photos can already feel a little less “lost” and potentially choose photos faster.

An example of helping users choosing the right context. Photo by author.

The product should still allow users to take control

Many people still perceive AI as a “black box” with no visibility to its mode of operation, and still want to have some degree of control over it. We can assume the better expertise users have, the more control they will want. Therefore, you should allow your power users (and others) to have more control over the system if they want to.

Ensuring power users have more control over the experience that AI enables is a good practice because of two reasons.

- Better user input can help the AI learn faster and become better at helping other users since it learns from experience.

- It gives your users a better experience with the product by having the feeling of “I am in control of things”.

A good example of a company that applies this principle very well is Google. Anyone can use its search engine, but the power users can use search operators to find results much faster.

The experience should still be consistent

The documentary “The Social Dilemma” showed us some great examples about how social media websites can create a bias for us. They do it by selecting content for us using AI and focusing our attention on it. Many people who use these applications are still not aware that this is even happening. Yet, I’m sure it causes frustrations to people who want to find that post they saw an hour ago and that is now “gone”.

This is an example of an inconsistent experience that can confuse us as users. If the AI doesn’t allow you to have a consistent experience over time without the possibility to “un-do” certain actions on the screen, it breaks many UX rules including consistency and user control, and freedom.

A potential solution for the problem could be a “non-AI” mode that looks like an “old-school” chronological feed (More like Facebook’s 2008 feed). Another solution can be an option to “save the post for later” so we can have some degree of control over the system. Besides, from an ethical perspective, it could be helpful for consumers if companies presented a disclosure about the AI components of their products. This way we will at least know why these inconsistencies happen.To sum up, products where AI can help users work faster without creating tunnel vision (it’s a fine balance), can provide a good user experience. Also, it’s recommended to allow power users (or anyone else who wants to) to take more control over the system when needed. After all, machines should help enhance human capabilities and not limit them.