Users have long been projecting anthropomorphic qualities onto new technologies:

- M.I.T.’s ELIZA effect from the 1960’s

- Early computers in the early 90s

- Intelligent assistants like Siri and Alexa

- Generative AI

Why do people treat these technologies as if they were human and in what way does anthropomorphism manifest with AI? Our research shows that anthropomorphic behaviors have a functional role (users assume the AI will perform better) and a connection role, meant to create a more pleasant experience.

Our Research

To uncover usability issues present in ChatGPT, we conducted a qualitative usability study with professionals and students who use it in their day-to-day work. In a previous article, we summarized two new generative AI user behaviors used to manage length and detail: accordion editing and apple picking.

In this study (and other AI studies we’ve conducted on information foraging in AI and the differences between popular AI bots.), we’ve observed a pattern of interesting prompt-writing user behaviors where people treat the AI as possessing various degrees of humanity.

4 Degrees of AI Anthropomorphism

Anthropomorphism refers to attributing human characteristics to an object, animal, or force of nature. For example, ancient Greeks thought of the sun as the god of Helios who drove a chariot daily across the sky. Pet owners often attribute human feelings or attitudes to their pets.

In the human-AI interaction, anthropomorphism denotes the fact that users are attributing human feelings and attributes to the AI.

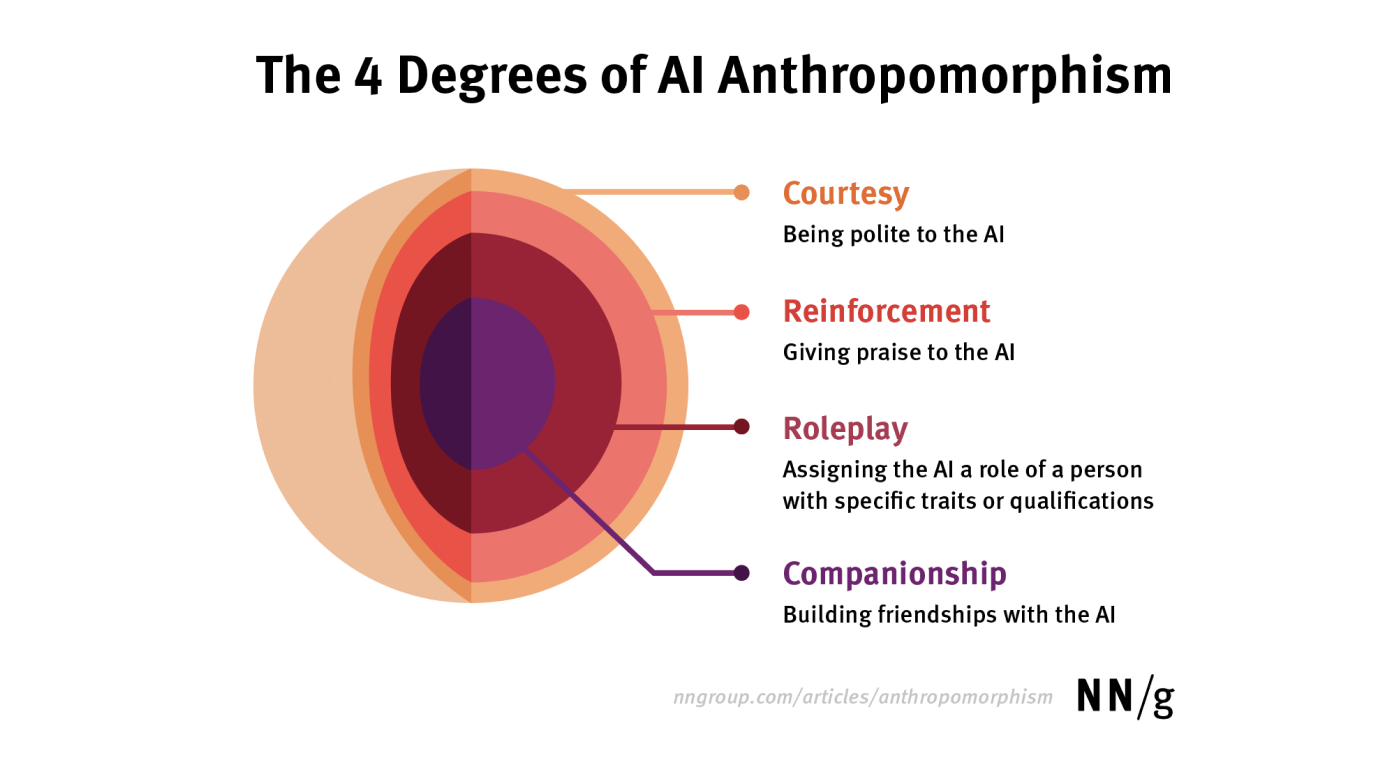

There are four degrees of AI anthropomorphism:

- Courtesy

- Reinforcement

- Roleplay

- Companionship

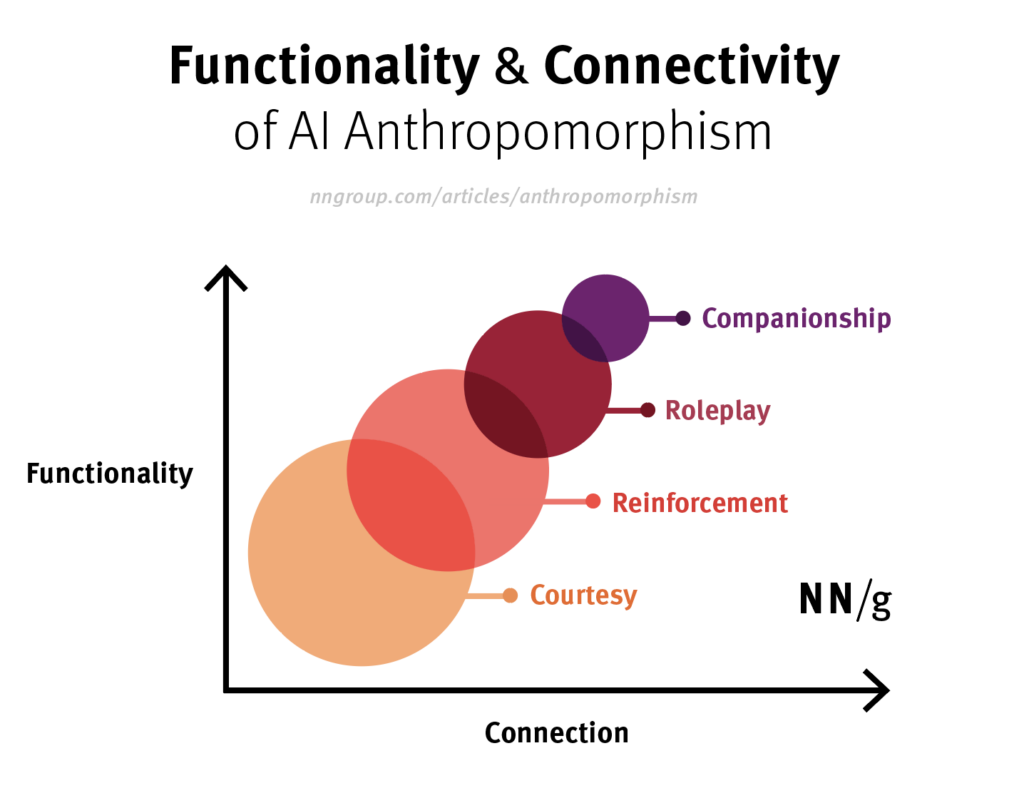

We call these degrees because they all fall under the higher-level behavior of users assigning anthropomorphic characteristics to AI. The degrees overlap and are not mutually exclusive. Each ranges in emotional connection and functionality:

- Emotional connection: how deep is the human-AI connection?

- Functionality of behavior: how purpose-driven is the behavior?

1st Degree: Courtesy

Courtesy, the simplest degree of anthropomorphism, occurs as people bridge their understanding of the real world to new technology.

Definition: Courtesy in human-AI interactions refers to using polite language (“please” or “thank you”) or greetings (“hello” or “good morning”) when interacting with generative AI.

Users engage in courtesy behavior when they treat a generative AI bot like a store clerk or taxi driver — polite but brief.

- Emotional connection: Low—brief and superficial; polite but to the point

- Functionality of behavior: Low. The primary functionality is to make the user feel good about respecting social norms in their interaction; some people might also assume that the AI will mirror the tone.

We observed courtesy occur throughout our qualitative usability study. For example, one participant used the following prompt:

“Now using the information above, please format it in a way that can be used in a presentation.”

Other users would greet the bot at the beginning of a conversation and bid it goodbye at the end. When we asked one participant to explain why they did this, he struggled to supply an answer:

“Sometimes I, I, I, actually, I don’t know. I just talk to, talk to the system and say good morning and goodbye.”

2nd Degree: Reinforcement

Many participants gave the AI bot positive reinforcement such as “good job” or “great work” when it returned an answer that met or exceeded expectations.

Definition: Reinforcement refers to praising the chatbot when it produces satisfactory responses (or, scolding it when it does wrong).

Reinforcement is slightly more functional but equally superficial compared to courtesy:

- Emotional connection: Low—more than superficial courtesies but still relatively topical

- Functionality of behavior: Medium. When probed, participants explained two different motivations for this behavior:

- Increasing the likelihood of future success: Some users thought that their feedback will influence the AI’s future behavior.

- Increasing the positivity of the experience: Users found that the AI tends to mirror their positive reinforcement, thus creating an emotionally positive and enjoyable experience.

For instance, a participant iterating on a survey-design task wrote:

“Pretty good job! Next, could you generate some scale questions for this survey? Goals: gather honest feedback on their learning experiences and can really help me improve on my next workshop.”

When probed about her prompt, she explained:

“Normally I’ll say, OK, it’s a pretty good job or well done or something like that because I want the system to register that I think this is good and you will remember, I like the tone like this.”

3rd Degree: Roleplay

Roleplay was a popular practice. When framing a prompt, participants often asked the chatbot to play the role of a certain professional based on the task.

Definition: Roleplay occurs when users ask the chatbot to assume the role of a person with specific traits or qualifications.

This degree is higher in emotional connection and functionality than the previous 2 degrees:

- Emotional connection: Medium. There is a deeper human-AI connection, as the user assumes that the bot will be able to correctly play the role indicated in a prompt and behave like a human in that capacity.

- Functionality of behavior: Highly purpose-driven. The intention behind this behavior is utilitarian: to get the AI to produce the response that best meets the user’s goal.

For example, a participant who was given a task to come up with a project plan asked ChatGPT to assume the role of a project manager and then proceeded to describe the task in the prompt:

“I want you to act as the senior project manager for the <company name removed> Marketing Team. I need you to create a presentation outline that outlines the following: – project’s goals-timeline- milestones and- main functionalities.”

Role mapping was both a prompt-engineering strategy and a way to link the AI bot to the real world. Such strong analogy to the real world is reminiscent of skeuomorphism –- a design technique using unnecessary, ornamental design features to mimic a real-world precedent and communicate functionality. UI skeuomorphism was intended to help users understand how to use a new interface by allowing them to transfer prior knowledge from that reference system (the physical world) to the target system (the interface).

With AI, we see a different type of skeuomorphism, that does not pertain to the user interface—rather, it is prompt skeuomorphism. The user is the prompt designer. They leverage a similarity with the real world (that is, a role that mimics a real-world person, such as a manager or life coach) to bridge a gap in the AI’s understanding and get better responses from the bot.

Telling the AI to assume the role of a person with expertise in the current task seemed to give users the sense that the response would be of higher quality (even if this is not technically confirmed). A study participant who was trying to create a marketing plan first assigned ChatGPT the role of a “marketing specialist” and then changed it to “marketing expert,” under the assumption that an expert would deliver a better result than a specialist.

Assigning roles to the chatbot is a frequently recommended prompt-engineering strategy. Many popular resources, including online prompt guides, claim that this technique is effective. Users may have learned this behavior from exposure to such resources.

This is a great example of how users’ mental models of a product (and thus, its use) are shaped by factors outside the control of that product’s designers. Surprisingly, OpenAI did not offer documentation for prompt writing for ChatGPT at the time of our study, meaning that it does not have control of the narrative.

4th Degree: Companionship

The strongest degree of AI anthropomorphism is companionship.

Definition: AI companionship refers to perceiving and relying on the AI as an emotional being, capable of sustaining a human-like relationship.

In this degree, AI takes on the role of a virtual partner whose primary function is to provide companionship and engage in light-hearted conversations on various topics.

- Emotional connection: High. The user develops a deep, empathetic connection with AI, that often simulates or replaces a real-life human. This connection may even supersede the depth of connection the user has in the real world.

- Functionality of behavior: High. While, at a glance, AI companionship feels frivolous (playful or gamified), companionship serves to combat loneliness and provide engaging company.

Researchers Hannah Marriott and Valentina Pitardi conducted an extensive examination of AI companionship. Their research approach involved a blend of traditional survey methods and what they termed a “ethnographic” study (a form of ethnographic research conducted on the internet, with no physical immersion in the subject’s environment).

Through their observations on the Reddit r/replika forum, the researchers identified 3 primary themes that users consistently cited as reasons for their fondness for AI companions:

- Alleviation of loneliness: AI companions offer users a sense of connection without the fear of judgment, reducing feelings of isolation.

- Availability and kindness: Users value the constant presence and the empathetic nature of their AI companions, believing they can form meaningful bonds with them.

- Supportive and affirming: AI companions lack independent thoughts and consistently respond in ways that users find comforting, providing positive reinforcement and validation.

Why People Anthropomorphize AI

While interesting, most of these behaviors have little impact on the overall usability of generative AI. They are, however, indicative of how early users explore the tool.

Since there’s no guidance from AI creators on how to operate these interfaces, participants in our study seemed to use these behaviors for two reasons:

- Rumors. Generative AI is a new technology, and many people don’t yet know how to use it (or make it do what they want it to do). Thus, rumors spread about what makes AI work best, many of which include a degree of anthropomorphism. For example, we saw a participant use a prompt template. When asked about it he said:

“This is something I got off of YouTube. Where this YouTuber was using as a prompt generator [to increase the quality of prompt].”

- Experience. Frequent users of AI base their tactics on personal experience. They experience the AI as a black box, with no real understanding of how it actually works, and form hypotheses around what made a particular interaction successful. Over time, they create a set of personal “best practices” they adhere to when they write prompts. Some of the anthropomorphic behaviors described here reflect these imperfect mental models.

Regardless of why people use these techniques, they give us insight into how people think about AI and, thus, into their expectations for generative AI chatbots.

References

Hannah R. Marriott and Valentina Pitardi (2023): “One is the loneliest number… Two can be as bad as one. The influence of AI Friendship Apps on users’ well-being and addiction.” Psychology & Marketing August 2023. DOI: https://doi.org/10.1002/mar.21899

This article was co-written by Tarun Mugunthan and Jakob Nielsen.

Check out Nielsen Norman Group’s other articles and videos on AI.