By Leah Acosta, Caroline Kim and Ryan Finch

Zero-to-one product teams — those that are creating brand new products — have dozens of ways to test product ideas. Well-tested approaches include A/B tests, interactive prototypes, and wireframes. We use all of those at Meta, but we were missing a way to quickly test a bunch of ideas (15+) before we even had prototypes. After several rounds of testing and iteration, we landed on a version of quantitative concept testing that has helped our teams integrate meaningful user feedback into the idea prioritization process.

In this method, we use text descriptions instead of images because, at this early stage, we want to know if a core idea is valuable to our target market. We A/B-tested our survey design and ran the exact same survey twice, one with only text descriptions, and the other with both images and text descriptions. We found that, while images can help clarify and add color to the concept description, the design executions can be distracting. Using text descriptions removes the risk of participants fixating on specific design implementations. Feedback on the execution is better fit for qualitative testing later in the process.

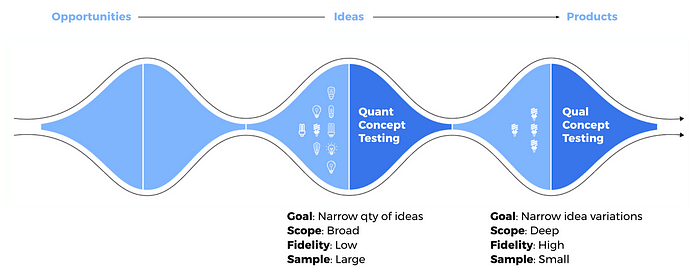

How Quantitative Concept Testing Fits Into the Product Development Process

In a typical product development process, teams move from many broad opportunities to a narrower set at each stage. Due to time and resource constraints, teams often rely on their own intuition to decide which ideas to develop. Quantitative concept testing helps ensure that the user voice influences which ideas are developed into testable products. Qualitative testing is best for narrowing down the best executions of an idea in the next stage.

Our Approach: MaxDiff + Sequential Monadic Survey

Quantitative concept testing has two goals:

- Identify the most promising concepts for further development from the participants’ perspective, and order them in a ranked list.

- Understand the strengths and weaknesses of each concept, and pinpoint low-ranking concepts with hidden promise.

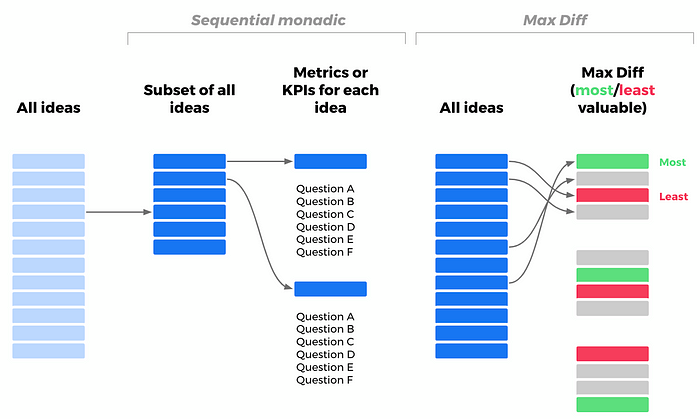

To address both goals, our approach combines two distinct methods in a single survey.

The first goal is addressed by a MaxDiff data collection and analysis method. This provides a stack-ranked list of ideas from most valuable to least, which is based on forced tradeoffs that participants make in the survey. For example, out of a total of 21 concepts, participants will see randomized subsets of 4 concepts at a time. Participants choose the most and least valuable in each subset, as shown in the final column in the diagram above.

The second goal is achieved with a sequential monadic survey design. This method evaluates each individual concept along the same set of metrics. Participants see one concept description at a time, and rate each metric on a five-point scale, as shown in the center column in the diagram above. Common metrics include likelihood to use, uniqueness, and comprehension.

While it would be nice to get metrics for every concept from every participant, we often have too many concepts (15–21) for our ideal survey length (20 minutes or less). To limit the length of the survey for participants, we split our participants into subgroups so that any given participant only answers metric questions for 5–7 concepts. Every participant sees all the concepts in the MaxDiff, but only gives metrics feedback on a subset of concepts.

Planning Your Quantitative Concept Test

Identifying Segments and Markets

Which countries are most important for your team? Which customer segments are most important to compare? Note that the general population will be the least expensive to reach. Costs increase linearly as your segments become more specific. We recommend that each respondent evaluate no more than 7 concepts (to limit survey length), with n= ~300 per concept, per segment.

Setting Up the MaxDiff

Write one-sentence text descriptions of every concept. We recommend no more than 20 concepts, plus at least one benchmark concept (21 total concepts max). It’s important to collaborate with your key stakeholders (e.g., content strategist, designer, product manager) to align on concepts and descriptions and ultimately to achieve impact.

Setting Up the Sequential Monadic Survey

Choose your metrics. Your needs might be different, but we landed on the following set of metrics for each survey we do: Overall Opinion, Likelihood to Use, Uniqueness, Comprehension, Unmet Needs, and Effect on Experience. All recommended metrics have gone through cognitive testing.

Guidelines for Concept Descriptions

Consider these five key components when developing concept descriptions:

- Consistent length and detail: All concepts being tested should be similar in length and level of detail.

- Clear language: Be clear, simple, and specific. Write in a voice appropriate for the target audience. Make sure to use neutral, consumer-friendly words and phrases — no internal company language.

- Uniform phrasing and tone: Keep the verb tense, punctuation, tone, and text formatting (bolding, etc.) consistent across concepts.

- Singular ideas: Focus on the main idea of the concept. Testing “double barreled” concepts will obscure understanding and muddy reactions.

- Address a need: Ensure that the concepts speak to an underlying consumer need or address a relevant pain point.

Survey Design Overview

Start the survey with a screener that defines the target audience and key demographics to ensure a representative sample. End the survey with additional demographics (e.g., income) that are important for audience profiling, but are not used for quotas or in the audience definition.

Design and programming of the survey should be optimized for smaller screens (smartphones, tablets, etc.) by using accordion or banked grids, keeping text short, and limiting open ends.

Timelines can vary wildly depending on the specific context of your project. We typically spend 5–7 weeks from start to finish. But duration also depends on how many markets, audience segments, metrics, and concepts are being tested. Below is a sample timeline.

I Have the Results! Now What?

The most important part of this process is generating your recommendations based on the interpretation of the data. Combining insights from the MaxDiff and metrics will provide clear results from the ranked order lists and attribute ratings respectively.

Tips on combining these data sets:

- Metric ratings help explain the MaxDiff ranking reasoning. Concepts that score high across the metrics and the MaxDiff should be the top recommended concepts to pursue. Concepts with low MaxDiff rankings and high metrics ratings may indicate untapped value. Concepts that rank low in comprehension but higher in other metrics could fare better in qualitative concept testing with prototypes.

- It’s not all about the MaxDiff rankings. Lower-ranked ideas that score highly on other metrics might be worth pursuing. You may just need to clarify the value proposition and execution. Also, look for patterns that may emerge, such as commonalities among high-scoring concepts.

- Some metrics may be more important for your team. For example, if a concept scores high on Likelihood to Use but low on Effect on Experience, the idea may be too risky.

- Scores may differ across audience segments. Have a discussion with your team about which segments are most important and any potential tradeoffs. (This may vary for some concepts.) Differences between segments can also help you parse ranking scores where there’s little variation between them. For example, one concept may perform well in a younger age group, while another one performs well in an older age group. You might recommend pursuing both concepts to address a wider range of people — or double down on a specific segment and recommend concepts that interest only that group.

- User input is only one consideration the team should use to make a decision. Balance this research with estimates of engineering cost, data science opportunity sizing, team goal metrics, product team interest in a concept, etc. These considerations should be discussed with stakeholders (e.g., PM, engineering, design) in collaborative prioritization exercises, such as mapping concepts across evaluative frameworks.

After working through those points, you’ll be able to put together a list of recommendations for your product team. We recommend organizing them into three sections: which ideas are worth pursuing, which need more discussion, and which to dismiss for now (and why).