Data scientists are users too.

There are many instances where it feels like someone attempted to make a data science tool for data scientists without ever having met a live one. If you take that product development approach, you remind me of bros trying to break into the tampon market without ever consulting a woman. What could possibly go wrong…?

If you’re a toolmaker who’s never heard of user experience (UX) design, I’m happy to welcome you to the 21st century. Stop reading this and trundle over to Wikipedia, you’re in for a treat! So much has happened while you were sleeping.

“It’s important to understand how the end user uses the product!” (I found this image here.)

Personas

UX101 mentions user personas right out of the gate. These will be hard to generate if you’ve never met all the real-world versions of people from this list. To design nice things for us, you need to take the time to build that empathy.

I’m sorry it’s hard to wrap your heads around us, but we’re not the typical software engineer. For starters, if you look at the list, you’ll notice that we come in different flavors. Surprise! There are different kinds of data science professionals. Which one are you designing for? Have you taken the time to understand why an analyst doesn’t care if a tool is production-worthy but an ML engineer does? (That puzzle piece will come in handy if you’re confused by the R vs Python fuss.) Do you know why statisticians might flip a table if you tricked them into using a tool optimized for speedy analytics? If not, those are two great places to start your detective work.

What good design looks like

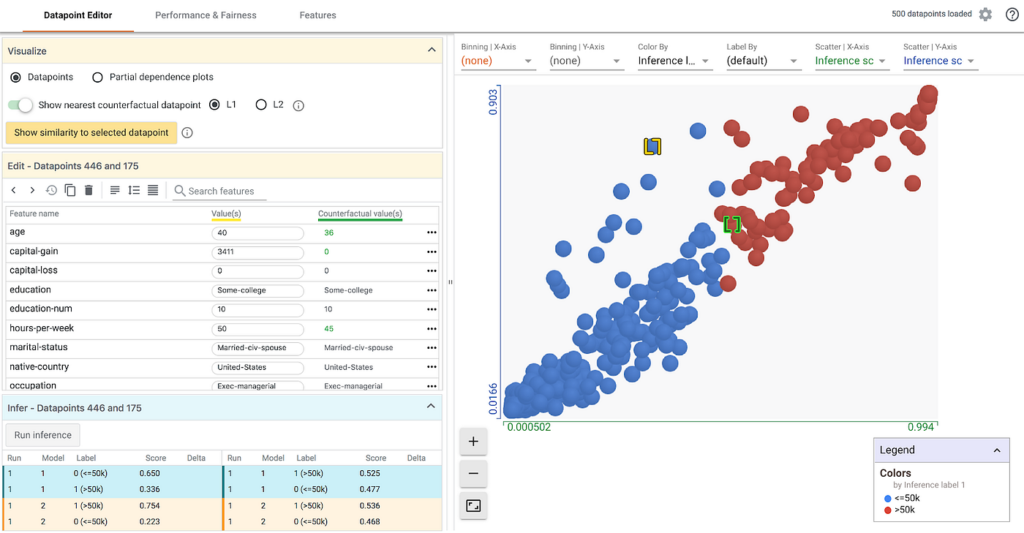

A collaboration that I’m proud to be part of is the What-If Tool, as in “What if getting a look at your model performance and data during ML/AI development wasn’t such a royal pain in the butt?” Being able to get a grip on your progress is the key to speedy iteration towards an awesome ML/AI solution, so good tools designed for analysts working in the machine learning space help them help you meet ambitious targets and catch problems like AI bias before it hurts your users.

Something I love about the What-If Tool’s approach is that data science UX was not an afterthought — the project included a visual designer and UX engineer from the start. The first version (released in late 2018) was designed for analysts supporting teams committed to TensorFlow development. We knew TensorFlow’s grumpy opacity would frustrate analytics enthusiasts, so we started there.

We gradually expanded our target user group to any ML/AI analyst working with models in Python, culminating in What-If Tool v1.0 announced at TensorFlow Dev Summit 2019 earlier this month, along with groundbreaking news about TensorFlow’s stronger overall commitment to user experience, which I’ll cover in a separate post very soon.

That’s right: model understanding and quick data exploration for feature selection/preprocessing insights even if you’re allergic to TensorFlow. Complete with handy AI bias detection because that’s often an ML/AI analyst’s first question. Does it work with Jupyter notebooks? You bet! Built-in Facets? Sure thing!

Take the What-If Tool for a test drive here.

We knew we wanted this to be a great tool for ML/AI analysts, so we observed real analysts using the tool in their natural habitats and in usability workshops. We incorporated their screams of frustration to drive better design until the sobbing subsided and the scowls turned into smiles (mostly — it’s not perfect yet, but we’re working on it). This tool isn’t some accidental roadkill that we’re foisting on the unsuspecting data scientist. We made it for you and we hope you’ll like it. (And please do give us feedback on the site so we can keep making it better.)

We’re also aware of who’s not in our intended user group. Statisticians won’t be fans unless they moonlight as analysts. Researchers have probably already cobbled together their own niche version. Complete beginners might be better off learning the basics elsewhere first.

Whatever else you might say about the What-If Tool, the part I’m most proud of is that we took UX design seriously and put the effort to understand our data science users. (We even know why you’re annoyed that we had to compromise for the sake of our other target user group and keep the TensorFlow legacy lingo that makes traditional data scientists want to punch something. Yeah, that “inference” isn’t inference. We feel you.)

If you’re eager to see the What-If Tool in action, you don’t have to install anything — just go here. We’ve got dazzling demos and docs aplenty. If you want to start using it for realsies, you don’t even need to install TensorFlow. Simply pip install with widget.

What’s my point?

The moral of the story is that if you want happy data scientists, you have to understand us. It’s sad to see how rarely non-data-scientists take the time. If you’re one of us, check that whoever you’re about to trust with your career understands you and your needs. Ask potential employers pointed questions about data, decision-makers, and tools. If you’re hiring us, make sure you have what we need to be happy and effective. If you’re designing tools for us, learn who we are and how we think.

If you want happy data scientists, you have to understand us.

Sure, that’s hard work if you’ve spent your life avoiding us because someone yelled at you for confusing correlation with causation once upon a time… The question is: are we worth the effort?

Are data scientists worth it?

If you’re a product manager, engineer, or user experience designer thinking about just sitting this whole thing out and not bothering to get to know what makes your data scientist comrades tick, you’re taking a bet on data science being a bubble and AI being a fad. It’s not a bet I would recommend, because though the names may evolve, data science and AI are fundamentally about making data useful and I can’t imagine your having less data in the future than today.

I’ve argued often that information is valuable, as has anyone who has ever said, “Knowledge is power.” Investing in professionals whose skills are geared at helping you make the most of information is a great way to get or keep your edge in the market. Whatever you call the professionals who make your data useful for you, that role is only going to become more prevalent in your industry. You’re going to have to understand who we are sooner or later. You may as well get the early bird special and investigate us while your colleagues are still snoozing. Your empathy and ability to collaborate with us will be an incredible advantage in your careers too.

I believe we are worth your time. Making data useful is the future and that’s what we do for you. Let’s be friends!