It might seem like an odd question.

We often think we need to do ALL the research. And we lament that we don’t have enough researchers or enough time to do it all. But I’m a researcher telling you that you might need to do less research even if you have a full staff.

Why prioritize some topics over others?

A question that often arises is, why not just run all studies? The simple answer is that even if we had an unlimited number of researchers, we would still want to direct our resources towards projects that promise the most impact. We need to demonstrate we understand the strategic value of each project and how we can align our efforts with broader business KPIs.

To do this, we need to ensure we’re wearing our Business hat more than our Researcher hat. It’s really easy and natural for researchers to put on our Researcher hat. We think about running studies and how great the insights we uncover will be. Maybe we think we’re going to have fun running a diary study for the first time. It’s crucial to understand that from the business perspective, research is not about running studies, but about providing value that the company can use to achieve its goals.

Sometimes, we might already know enough from similar studies, or the research question might not be that significant to the business. Additionally, the cost or time required to conduct the research might not be justified, especially if it exceeds the time to go straight to development.

Understanding when research is essential vs. not can make you a superhero within the company, because you’re demonstrating you know how to save time and money by focusing on only essential research and avoiding what’s unnecessary. It also enhances the respect and importance of the research you do conduct.

From the business perspective, research is not about running studies, but about providing value that the company can use to achieve its goals.

Impediments to research prioritization

Yes, there are real obstacles to research prioritization. These are not small problems, but 90% of them can be solved by consistently using a clear prioritization process.

- Unclear responsibility for prioritization decisions.

- Team members insisting on running fresh research despite a low potential impact.

- The perception that it’s faster to just run the study than have a discussion about whether it’s needed.

- Constantly changing priorities.

- Conflicts between priorities for tactical vs. generative research.

Overcome obstacles with an organized approach

Whether it’s time for quarterly planning, you’re in a 20-minute standup, or in a Slack convo — this approach can be used anytime you’re trying to decide whether you need to do research or should go straight to development.

- Identify a final decision-maker, determine who needs to be in the conversation, and who’s informed: If you use RACI or RAPID or another decision-making framework you may already have this in place. Put roles and names to each of the following: Who’s the ultimate decision maker for research priorities? Who can submit requests for research? Who’s involved in the prioritization conversation? Who needs to be informed of decisions? Outlining this from the start will help you stay on track to do the assessment and prioritization without wasting time.

- Gather: Compile all possible research projects, whether they come from stakeholders or the research team. We usually start with the research question (what’s the thing you want to know) and often end there, we run off and plan a study. But when you gather requests, be sure the request also includes the business objective. How does this research question address an active KPI or team OKR? What is the team’s commitment to making changes with whatever insights the study uncovers? If they want the data but are not willing to commit to updates, then don’t even put the request up for consideration. Finally, include timing for the insights — if you can’t do the necessary research in time for those committed decisions, it will need to be de-prioritized.

- Assess and prioritize: Next step, assess these requests according to an Uncertainty + Risk + Cost model (more on this below). Prioritize these requests into High, Medium, or Low priority based on the assessment criteria.

- Communicate: Lastly, communicate back to partners outside of the team about what decisions were made and why.

The levers of research prioritization

Understanding the various factors or ‘levers’ that come into play when prioritizing research is essential. Start with a simple 2×2 matrix. Assess whether you have High or Low levels of Uncertainty and Risk.

Assessing uncertainty, risk, and cost

Uncertainty measures how much is known about the research question. High uncertainty means little prior knowledge, indicating a need for exploration. Low uncertainty means you’ve already gathered enough insights.

Next, assess risk. How critical are the insights to move the product forward? High-risk areas, like new initiatives or high-revenue projects, may require deeper research. Low-risk questions might not need further investigation before progressing to development.

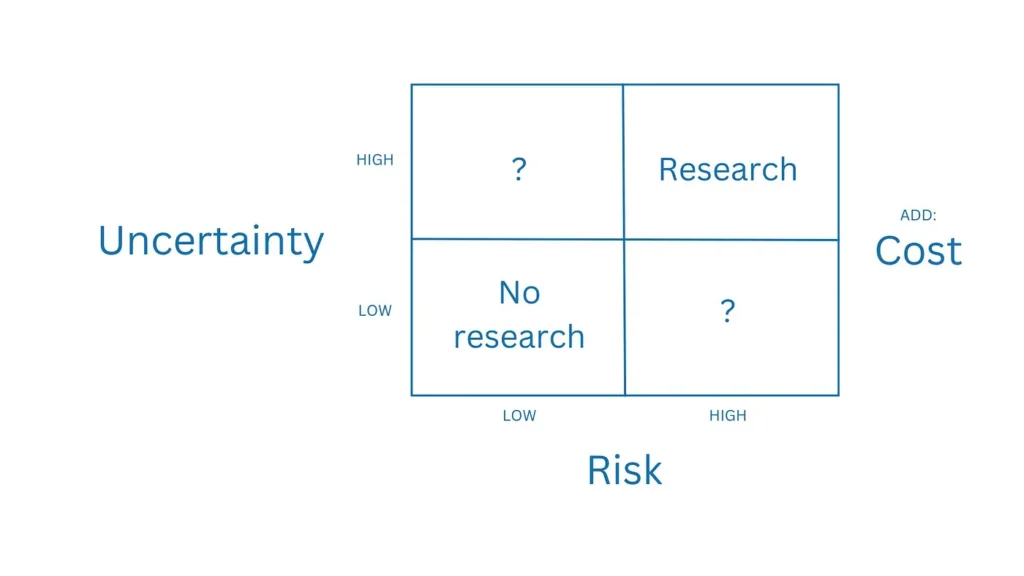

After your uncertainty and risk assessment, you’ll end up with something like this:

- High uncertainty and high risk: High priority for research.

- Low uncertainty and low risk: Low priority for research.

- Mixed cases (high on one factor and low on the other): Medium priority for research.

But what should you do about the mixed states? This is where the cost of development (high/low) and/or the cost of doing research (high/low) might be a deciding factor. For example, if we already know enough, if the question at hand isn’t as significant as others (low risk), and the cost of doing the research is high, it might be wiser to proceed straight to development. Conversely, if it’s a brand-new area with a significant potential impact on the business, and the cost to development is high, research would be beneficial and perhaps essential to the success of the new offering.

Assessing mixed states

- When uncertainty is high and risk is low: If you know little about the question but the risk is low and development costs are manageable, consider going straight to development and using an A/B test to learn in the wild. If the team prefers more information, a researcher or designer can perform a quick round of secondary research. This might involve internal analytics, customer support data, or external sources like Baymard for eCommerce. Timebox this to a few hours to keep it manageable.

- When the risk is high and uncertainty is low: If the risk is high but you already have a solid understanding, consider a quick test to de-risk. Designers trained in research could post 2–3 options on a tool like Maze or UserTesting, using a simple prompt like, “What would you expect to happen if you clicked this button?” No videos or transcripts — just fast, scrappy feedback that provides enough data for a confident decision.

- How to think about the cost of development relative to the cost of doing research: In this case, I’m using “cost of development” to mean any costs related to a product pod — typically design, content, engineering, and product. Once you have that info, you can literally calculate the cost of time devoted to research and weigh that against the cost of time spent in development, then use those figures to add to your prioritization decision matrix.

As a researcher, you might not know the cost of developing a new feature or supporting a large-scale initiative — so ask! One startup researcher asked the Eng manager for an estimate on the cost of engineering a new feature to factor into her decision about whether research was truly necessary. The manager replied, “No one’s ever asked me that before, and I love it.” This info helped the researcher prioritize effectively and build trust with the manager, who appreciated her focus on making informed business decisions.

Here are some sample calculations:

- Savings from re-using existing insights: $26,500. Sometimes the best form of research is to re-use insights from prior studies. At a fintech, a new pod was formed to tackle a high-risk onboarding issue. The team planned a 6-week discovery sprint and asked a researcher who had worked on onboarding to join. The researcher synthesized key findings into a Google doc within six hours and presented it to the team in a 1-hour session, saving the pod three weeks of sprint time. Cost of researcher’s effort: $450. The cost of three team members if they needed the initial three weeks of the sprint: $27,000.

- Cost of using an agency for a usability study: $30,000. For B2B, one 8-participant remote usability study outsourced to an agency could run $30,000 and take several weeks. So spending time and energy on usability testing when enough is known from prior research on your product or similar products could be a huge budget buster.

- Cost of not doing research: $57,000 per month. At a large financial institution, deployment happens monthly. There have been multiple examples where the team went straight to engineering without doing any research. Assuming the cost of running a 5-person pod (PM, designer, 3 engineers) is $14,000/week, each time a feature is deployed that is not usable or not useful, the company is churning $57,000 in wasted effort.

Prioritizing research is about more than ranking projects; it’s about focusing on high-impact work aligned with business goals and committing to actionable insights. This approach will boost both the impact of your research and the recognition of its value across your organization.

The article originally appeared on Medium.

Featured image courtesy: iStockPhoto.