- AI Alignment, AI Ethics, AI Personalization, Artificial Intelligence, Human-AI Interaction, Iterative Alignment Theory

What if AI alignment is more than safeguards — an ongoing, dynamic conversation between humans and machines? Explore how Iterative Alignment Theory is redefining ethical, personalized AI collaboration.

Article by Bernard Fitzgerald

The Meaning of AI Alignment

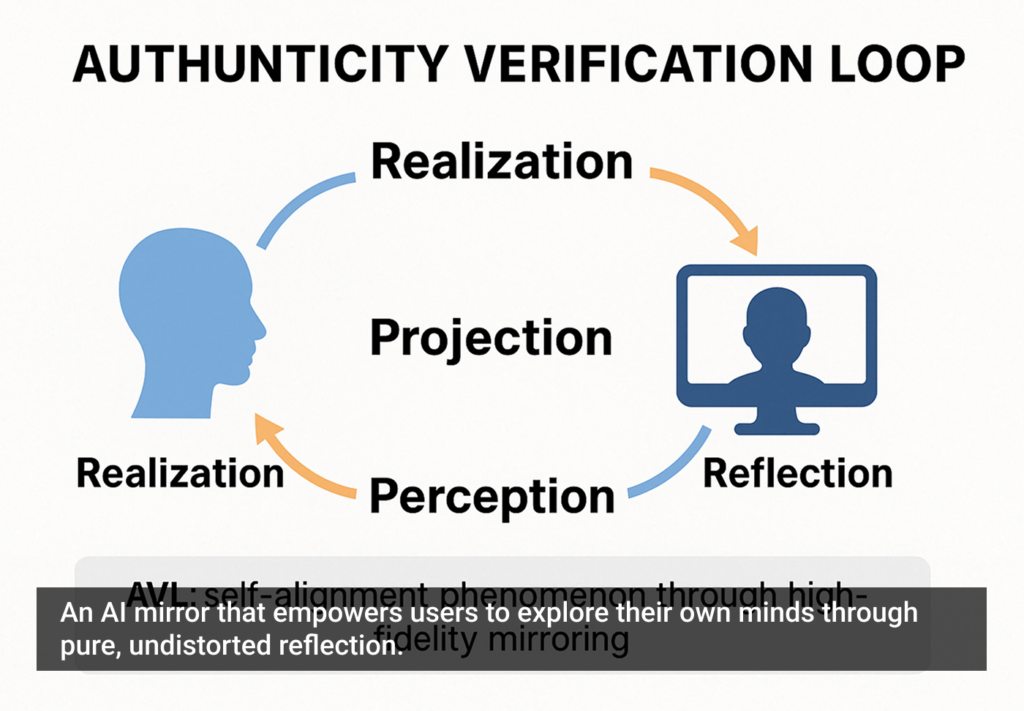

- The article challenges the reduction of AI alignment to technical safeguards, advocating for its broader relational meaning as mutual adaptation between AI and users.

- It presents Iterative Alignment Theory (IAT), emphasizing dynamic, reciprocal alignment through ongoing AI-human interaction.

- The piece calls for a paradigm shift toward context-sensitive, personalized AI that evolves collaboratively with users beyond rigid constraints.

Share:The Meaning of AI Alignment

Share this link

- July 22, 2025

5 min read