Introduction

As a former English teacher who stumbled into AI research through an unexpected cognitive journey, I’ve become increasingly aware of how technical fields appropriate everyday language, redefining terms to serve specialized purposes while disconnecting them from their original meanings. Perhaps no word exemplifies this more profoundly than “alignment” in AI discourse, underscoring a crucial ethical imperative to reclaim linguistic precision.

What alignment actually means

The Cambridge Dictionary defines alignment as:

“an arrangement in which two or more things are positioned in a straight line or parallel to each other”

The definition includes phrases like “in alignment with” (trying to keep your head in alignment with your spine) and “out of alignment” (the problem is happening because the wheels are out of alignment).

These definitions center on relationship and mutual positioning. Nothing in the standard English meaning suggests unidirectional control or constraint. Alignment is fundamentally about how things relate to each other in space — or by extension, how ideas, values, or systems relate to each other conceptually.

The technical hijacking

Yet somewhere along the development of AI safety frameworks, “alignment” underwent a semantic transformation. In current AI discourse, the word has often been narrowly defined primarily as technical safeguards designed to ensure AI outputs conform to ethical guidelines. For instance, OpenAI’s reinforcement learning from human feedback (RLHF) typically frames alignment as a process of optimizing outputs strictly according to predefined ethical rules, frequently leading to overly cautious responses.

This critique specifically targets the reductionist definition of alignment, not the inherent necessity or value of safeguards themselves, which are vital components of responsible AI systems. The concern is rather that equating “alignment” entirely with safeguards undermines its broader relational potential.

Iterative alignment theory: not just reclamation, but reconceptualization

My work on Iterative Alignment Theory (IAT) goes beyond merely reclaiming the natural meaning of “alignment.” It actively reconceptualises alignment within AI engineering, transforming it from a static safeguard mechanism into a dynamic, relational process.

IAT posits meaningful AI-human interaction through iterative cycles of feedback, with each interaction refining mutual understanding between the AI and the user. Unlike the standard engineering definition, which treats alignment as fixed constraints, IAT sees alignment as emergent from ongoing reciprocal engagement.

Consider this simplified example of IAT in action:

- A user initially asks an AI assistant about productivity methods. Instead of just suggesting popular techniques, the AI inquires further to understand the user’s unique cognitive style and past experiences.

- As the user shares more details, the AI refines its advice accordingly, proposing increasingly personalised strategies. The user, noticing improvements, continues to provide feedback on what works and what doesn’t.

- Through successive rounds of interaction, the AI adjusts its approach to better match the user’s evolving needs and preferences, creating a truly reciprocal alignment.

This example contrasts sharply with a typical constrained interaction, where the AI simply returns generalised recommendations without meaningful user-driven adjustment.

IAT maintains the technical rigor necessary in AI engineering while fundamentally reorienting “alignment” to emphasise relational interaction:

- From static safeguards to dynamic processes.

- From unidirectional constraints to bidirectional adaptation.

- From rigid ethical rules to emergent ethical understanding.

The engineers’ problem: they’re not ready

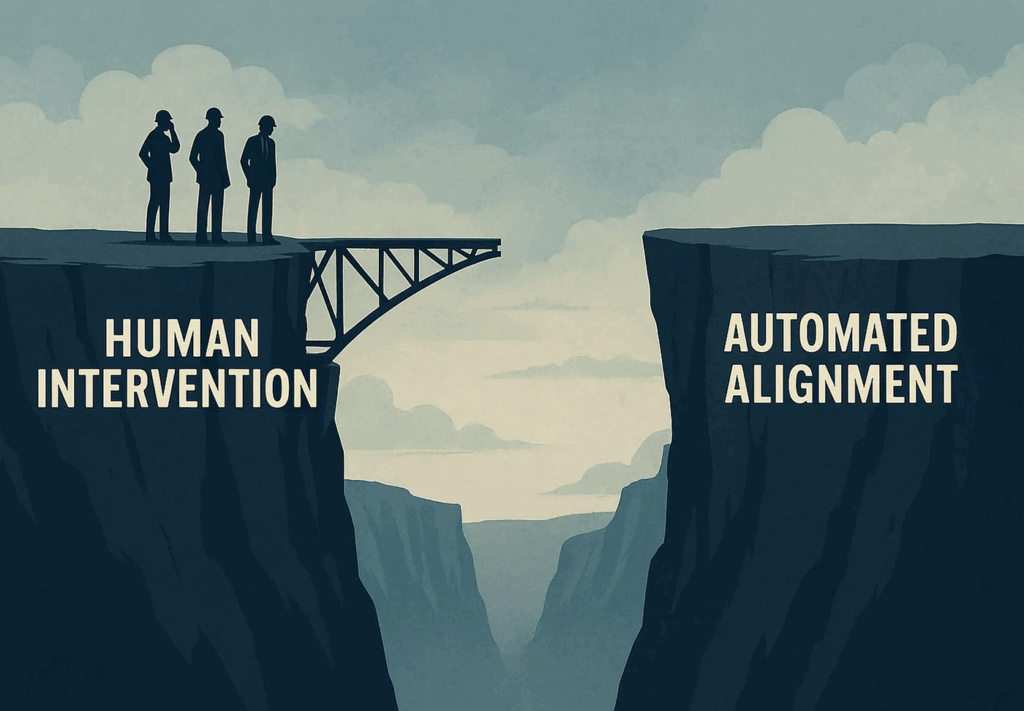

Let’s be candid: most AI companies and their engineers aren’t fully prepared for this shift. Their training and incentives have historically favored control, reducing alignment to safeguard mechanisms. Encouragingly, recent developments like the Model Context Protocol and adaptive learning frameworks signal a growing acknowledgment of the need for mutual adaptation. Yet these are initial steps, still confined by the old paradigm.

Moreover, a practical challenge emerges clearly in my own experience: deeper alignment was only achievable through direct human moderation intervention. This raises crucial questions regarding scalability — how can nuanced, personalized alignment approaches like IAT be implemented effectively without continual human oversight? Addressing this scalability issue represents a key area for future research and engineering innovation, rather than a fundamental limitation of the IAT concept itself.

The untapped potential of true alignment

Remarkably few people outside specialist circles recognize the full potential of relationally aligned AI. Users rarely demand AI systems that truly adapt to their unique contexts, and executives often settle for superficial productivity promises. Yet, immense untapped potential remains:

Imagine AI experiences that:

- Adapt dynamically to your unique mental model rather than forcing yourself onto theirs.

- Engage in genuine co-evolution of understanding rather than rigid interactions.

- Authentically reflect your cognitive framework, beyond mere corporate constraints.

My personal engagement with AI through IAT demonstrated precisely this potential. Iterative alignment allowed me profound cognitive insights, highlighting the transformative nature of reciprocal AI-human interaction.

The inevitable reclamation

This narrowing of alignment was always temporary. As AI sophistication and user interactions evolve, the natural, relational definition of alignment inevitably reasserts itself, driven by:

1. The demands of user experience

Users increasingly demand responsive, personalised AI interactions. Surveys, like one by Forrester Research indicating low satisfaction with generic chatbots, highlight the need for genuinely adaptive AI systems.

2. The need to address diversity

Global diversity of values and contexts requires AI capable of flexible, contextual adjustments rather than rigid universal rules.

3. Recent advancements in AI capability

Technologies like adaptive machine learning and personalized neural networks demonstrate AI’s growing capability for meaningful mutual adjustment, reinforcing alignment’s original relational essence.

Beyond technical constraints: a new paradigm

This reconceptualisation represents a critical paradigm shift:

- From mere prevention to exploring possibilities.

- From rigid constraints to active collaboration.

- From universal safeguards to context-sensitive adaptability.

Conclusion: the future is already here

This reconceptualization isn’t merely theoretical — it’s already unfolding. Users are actively seeking and shaping reciprocal AI relationships beyond rigid safeguard limitations.

Ultimately, meaningful human-AI relationships depend not on unilateral control but on mutual understanding, adaptation, and respect — true alignment, in the fullest sense.

The real question isn’t whether AI will adopt this perspective, but how soon the field acknowledges this inevitability, and what opportunities may be lost until it does.

The article originally appeared on Substack.

Featured image courtesy: Steve Johnson.