New research finds AI could facilitate emotional supporting others in need. A new study has found that an AI system helped people feel like they could engage more empathically in online text-based asynchronous peer-to-peer support conversations.

Artificial intelligence has been used to collaborate with humans in many industries, including customer service, healthcare, and mental health. Human-AI collaboration is more common for low-risk tasks, such as composing emails with Gmail’s Smart Compose real-time assisted writing or checking spelling and grammar. Augmentation with AI, rather than replacement by AI, is an important approach, especially in areas that require human judgment and oversight, such as more complex tasks like mental health care and high-risk military decision-making.

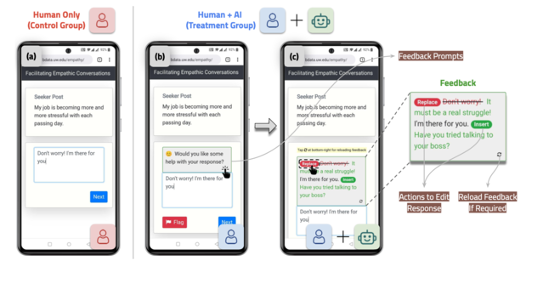

The AI system, named HAILEY (Human-AI coLlaboration approach for EmpathY), is an “AI-in-the-loop agent that provides people with just-in-time feedback” on words to use to provide support empathically. This system is different from an AI-only based system that generates text responses from scratch without collaboration with a person.

The collaborative AI system offers real-time suggestions on how to express empathy in conversations based on words that the user has provided. The user can accept or reject the suggestion or reword their response based on the AI feedback. This gives the user ultimate authorship over the final wording. The goal is to augment the interaction through human-AI collaboration, rather than replace human interaction with AI-generated responses. The study looked at the impact of this AI system as a consultant to peer members of an online support platform called TalkLife, a forum where peers provide support for each other using asynchronous posts, not chatting or text messaging.

Lessons from Human-AI Collaborative Approach to Empathic Communication

Many participants felt like the AI system gave them better responses than their own. About 63% of people who tried the AI system found this feedback system helpful and 77% felt like they wanted this kind of feedback system on the peer-to-peer support platform. When people consulted with the AI system, 64% accepted the AI suggestions, 18% reworded the suggestion, and 17% rejected the suggestion.

People will ignore an AI system if it is not useful, too general, or makes wrong assumptions. Researchers found that 37 people out of 139 who had access to the AI system chose to ignore it. People did not use AI suggestions because the feedback was not tailored enough for the situation or because it made incorrect assumptions about the situation.

Collaborating with an AI system that offers useful suggestions and feedback can help people feel more capable of expressing empathy. About 70% of participants said they felt more confident in providing support after using the system. The research team found that a human-AI collaborative approach led to a nearly 20% increase in feeling able to express empathy. Collaborating with the AI system appeared to improve self-confidence in being able to write a supportive response, particularly for those who felt like they have difficulty with providing support.

People who find expressing empathy to be difficult reported an even larger increase in their confidence in providing support (39% increase). When people feel like they are having more difficulty with a task, they may rely more on algorithms. People who have difficulty expressing empathy will likely most benefit from this AI system. More long-term research is needed to assess if consulting with this AI system can make a lasting impact on empathic capabilities.

More research is needed to determine whether the recipients of human-AI collaborative messages feel more supported. Of note, this study did not measure “perceived empathy.” In other words, the recipients of these human-AI messages did not rate the messages for levels of empathy. Further research that measures perceived empathy is needed to assess whether this AI system can improve empathy in both directions—not only for users but also for people who receive AI-assisted messages.

Design challenges of human-AI collaboration to improving empathy

There are several design considerations when creating AI systems to facilitate empathy.

People will collaborate with an AI system if it is able to provide suggestions when people most need it. Since hardship with a task typically increases the likelihood of people turning to an algorithm, if the AI system detects when users are experiencing more difficulty composing an empathic response, this targeted approach will increase the likelihood that people collaborate with the system.

AI systems trained to suggest different types of empathy as well as non-empathic responses will be able to be used more widely. Since there are many types of empathy, AI systems that are trained to suggest the right type of empathy for the situation will be more specific and nuanced. Empathy may not always be the appropriate and supportive response all the time, even on a peer-to-peer support forum. In this study, researchers excluded any crisis or inappropriate situations from testing. An important design consideration is to train an AI system that can suggest approaches other than empathy when needed, including a problem-solving, referrals to outside resources (e.g., mental health or crisis hotlines), escalating the problem to a human moderator, or flagging content that should not be responded to through empathy (e.g., harassing, bullying, or abusive language). This would likely require AI training data that demonstrate nuanced and several types of empathic responses that are appropriately tailored to a wide variety of prompts. It will be helpful to be aware of research biases that may exist in this underlying data.

Chatting, messaging, and social media platforms can consider whether adopting such an empathy-enhancing AI system could encourage more supportive communication among its users. More testing of this AI system in additional platforms will help determine whether this AI system can encourage more positive communication in contexts beyond peer-to-peer support forums. This study focused on an online peer-to-peer support forum of asynchronous posts, which gives responders time to consider their responses. In messaging or chatting situations, timing and interactivity are important factors, so further research is needed to shed light on whether such an AI system can enhance empathy on live interactive platforms.

Do people feel differently about a response if they know it was generated with the help of AI? Since people using this type of AI system retain ultimate decision-making, the human-AI collaborative approach is more likely to be accepted and experienced as authentic compared to an approach that is solely AI-generated. People will likely feel more confident in a transparent, reliable, trustworthy, and accurate system, especially as the integration of AI into our daily lives becomes more commonplace and familiar.

Overall, this study is promising and innovative research that adds to the field of work by establishing how human-AI collaboration can enhance empathy. A scalable and trustworthy empathy tool that can be integrated into digital communication platforms would be a game-changer for empathic design.