Save

The field of artificial intelligence has achieved remarkable milestones in recent years, with models demonstrating impressive language proficiency. However, a pivotal question persists around whether these systems can truly grasp sophisticated reasoning skills on par with human cognition.

This extensive survey (Sun et al. 2023) paper dives deep into the intersection between state-of-the-art foundation models and the complex task of reasoning. It aims to assess how modern AI systems are stacking up in emulating the human mind’s analytical capabilities.

The introduction puts reasoning in context by distinguishing two modes of thinking conceptualized as “System 1” and “System 2”. System 1 represents rapid, emotion-influenced, mostly instinctive decision-making. In contrast, System 2 signifies slower and more deliberate analytical thinking, carefully evaluating arguments and weighing consequences using logical reasoning.

The paper makes it clear that while System 1 is undoubtedly powerful, the singular ability to carry out logical analysis places System 2 as the pinnacle of higher-order human thinking. Mastering it would indicate a pivotal leap for AI towards deeper intelligence.

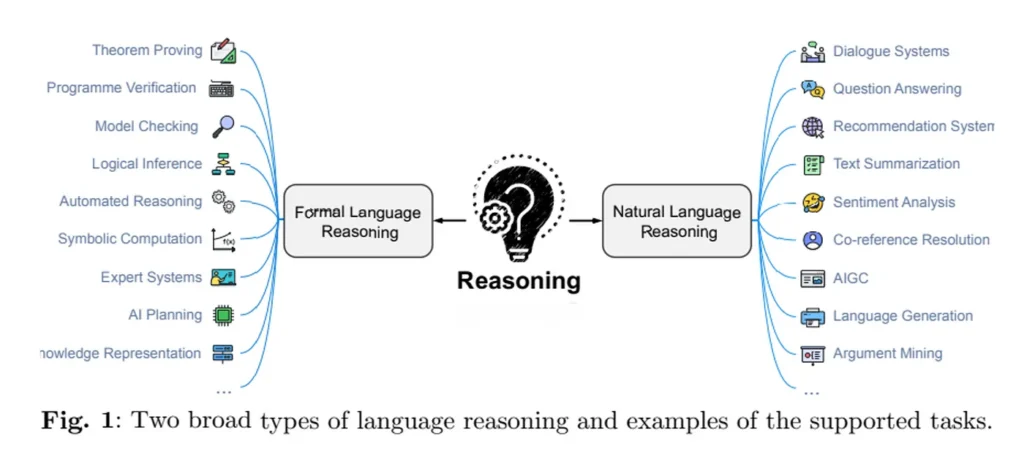

Reasoning itself has two main forms:

- Formal reasoning: Strictly rule-based analysis using mathematical logic

- Natural language reasoning: More intuitive reasoning that handles ambiguity and everyday situations

By evaluating the latest advancements where Large Language Models (LLMs) are applied to different reasoning tasks, the survey aims to assess progress and possibilities while identifying open challenges around physically achieving System 2 style thinking in AI.

The scope covers formal rule-based analysis like software verification as well as more flexible applications like open-ended question answering. By tying together recent research efforts, the paper hopes to stimulate exploration around developing systems that more closely emulate multifaceted human reasoning abilities.

Background

The paper establishes an in-depth understanding of reasoning by exploring various definitions and perspectives. It describes reasoning as a multifaceted concept enabling the analysis of information and drawing of meaningful conclusions or arguments.

Some key points covered:

Diverse Definitions

Reasoning carries specific connotations in philosophy (modeling conclusions from incomplete knowledge), logic (methodical inference based on premise relations) and natural language processing (deriving new assertions from knowledge integration).

Classification of Reasoning

Prominent categorizations include deduction, induction and abduction.

Deduction involves strict, logical deduction to conclusively prove conclusions based on premises.

Induction generalizes patterns from evidence without absolute certainty.

Abduction generates potential hypotheses aiming to best explain observed information based on the available facts.

Mathematical Representations

Reasoning can be expressed mathematically in various frameworks like propositional logic, probability theory and graph theory.

Practical Applications

When developing AI systems, strict mathematical categorizations are less useful than flexible approaches tackling real-world reasoning challenges, which are often too complex for traditional formalisms.

Categories of Reasoning

There are two broad categories of reasoning:

Formal Language Reasoning: Uses strict logical rules and mathematical analysis. Includes theorem proving, program verification, etc.

Natural Language Reasoning: More flexible style in line with human cognition. Handles everyday situations. Enables question-answering and dialogue systems.

Formal Language Reasoning

This style of reasoning involves strict adherence to logical rules and systems. Conclusions are derived through rigorous mathematical analysis rather than flexible interpretations.

Some areas where formal reasoning is applied:

- Theorem Proving: Establishing mathematical theorems as logically valid based on axioms and inference rules.

- Program Verification: Formal methods to prove the correctness of software by showing it meets its specifications.

- Model Checking: Verifying finite-state model systems adhere to desired properties.

- Logic Inference: Drawing new logical conclusions from a set of premises through deductive reasoning.

- Automated Reasoning: Using computational logic to automate reasoning tasks like mathematical proof generation.

Overall, formal reasoning leverages structured logic and mathematically-grounded analysis for unambiguous, systematic inference.

Natural Language Reasoning

Unlike the rule-based approach above, natural language reasoning deals with the ambiguity and uncertainty intrinsic to human languages.

It aims to handle real-world situations for a smoother user experience aligned with human cognition.

Some application areas include:

- Dialogue Systems: Bidirectional communication between humans and AI agents.

- Question Answering: Systems allowing users to query information.

- Recommendation Systems: Suggesting personalized content or products to users.

Through flexible analysis more attuned to users’ contextual needs, natural language reasoning facilitates intuitive interfaces and productive human-computer interaction.

The two categories represent different reasoning philosophies for AI — one maximizing mathematical precision versus supporting organic human collaboration. Both hold immense value.

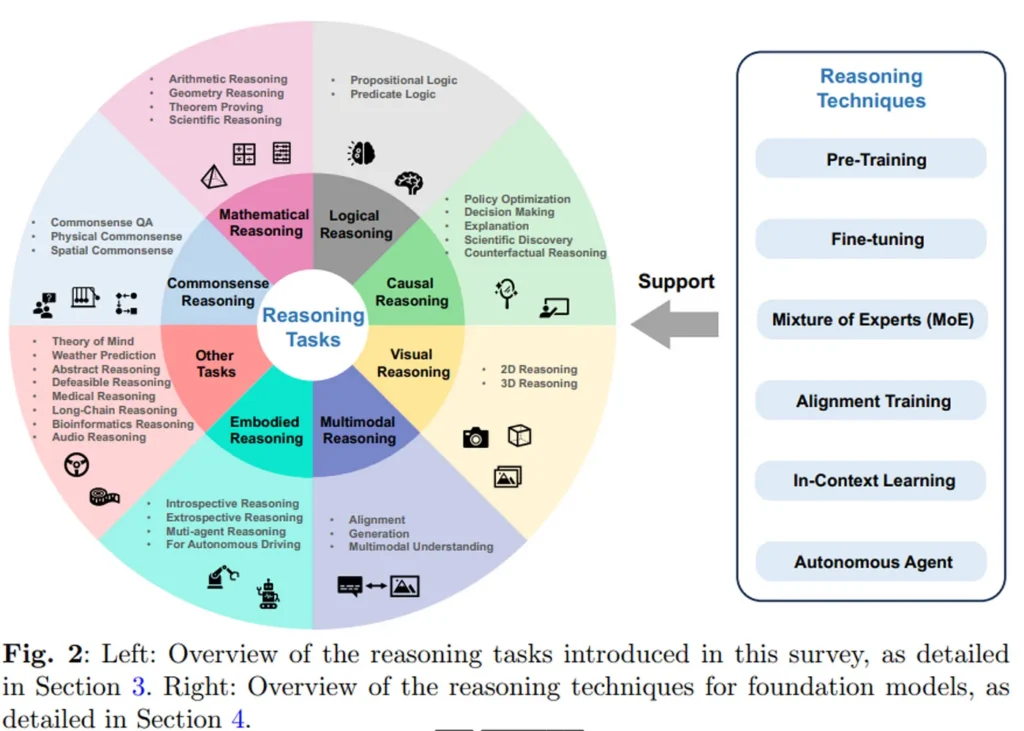

Reasoning Tasks

Commonsense Reasoning

This involves the understanding and application of intuitive knowledge about everyday situations that humans implicitly possess.

Relevant techniques include leveraging pre-trained language models and fine-tuning them with commonsense knowledge graphs. Benchmark datasets used for evaluation include abstractions of everyday situations requiring inference of unstated details.

Datasets: Social IQA, PIQA, COMMONSENSEQA

Challenges: Requires broader world knowledge and handling ambiguity in natural language

Mathematical Reasoning

Encompasses mathematical problem solving abilities including arithmetic, algebra, geometry, theorem proving and quantitative analysis.

Methods used involve reformulating word problems into executable programs. Benchmark datasets feature algebra questions, geometry challenges involving diagrams and math olympiad problems.

Datasets: MathQA, Dolphin18K, Geometry3K, AQuA

Challenges: Mathematical notation interpretation, quantitative analysis, multi-step logic

Logical Reasoning

Covers the process of making deductions through formal logic constructs like propositional and predicate logic.

Techniques include combining neural networks with symbolic logic reasoning. Relevant benchmarks test abilities in logical deduction, implication determination and validity assessment.

Datasets: ProofWriter, FOLIO, LogicalDeduction

Challenges: Faithfulness to formal logic, avoiding fallacies, sound deductions

Causal Reasoning

Involves identifying, understanding and explaining cause-effect relationships between events, actions or variables.

Approaches include causal graphs and integrating language model predictions into statistical discovery algorithms. Benchmarks cover domains like economics, healthcare and policy optimization.

Datasets: Tübingen Cause-Effect Pairs, Neuropathic Pain, CRASS

Challenges: Untangling confounding factors, inference beyond correlation, counterfactuals

Multimodal Reasoning

Reasoning across diverse data types like text, images, audio and video to enable enhanced understanding.

Areas include cross-modal alignment between inputs, multimodal question answering and generation applications.

Datasets: LAMM, LVLM-eHub, PASCAL-50S, ABSTRACT-50S

Challenges: Information alignment across modalities, heterogeneity, grounding

Visual Reasoning

Requires analyzing and manipulating visual inputs like images and video to draw inferences.

Tasks covered involve geometric analysis, 3D spatial processing, anatomical assessments, satellite image comprehension and more based on visual inputs.

Datasets: CLEVR, PTR, GQA, PARTIT Challenges: Spatial/relational understanding, 3D reasoning, fine-grained details

Embodied Reasoning

Where AI agents equipped with perception, planning and interaction capabilities are deployed in environments requiring navigation, physical simulation and human collaboration.

Datasets: RoboTHOR, VirtualHome, BEHAVIOR-1K Challenges: Interactive environments, long-time dependencies, sample efficiency

Defeasible Reasoning

- Conclusions can be overturned or revised based on new evidence or information.

- Allows handling uncertainty and incomplete knowledge.

- Datasets like δ-SNLI and δ-SOCIAL provide defeasible reasoning challenges.

Datasets: δ-SNLI, δ-SOCIAL, BoardgameQA Challenges: Handling uncertainty and inconsistencies, revising beliefs

Counterfactual Reasoning

- Considers hypothetical or alternative scenarios and their potential outcomes.

- Fundamental for causal understanding and decision making.

- CRASS benchmark tests counterfactual reasoning skills.

Datasets: CRASS Challenges: Hypotheticals, causal implications, decisions from simulations

Theory of Mind (ToM) Reasoning

- Involves attributing mental states like beliefs, intents and emotions to others.

- Enables interpreting and predicting behaviors.

- Speculation that some models may have developed ToM abilities.

Datasets: No specific dataset mentioned

Challenges: Interpreting complex social behaviors, false beliefs, representing others’ minds

Weather Forecasting Reasoning

- Predicting weather conditions and events using meteorological data.

- Leverages reasoning to identify patterns and make inferences.

- Advances like MetNet-2 and Pangu-Weather demonstrate this area.

Datasets: Custom meteorological data

Challenges: Spatial dynamics, chaos theory, probabilistic forecasting

Code Generation Reasoning

- Transforming natural language descriptions into executable code.

- Requires comprehending specifications and semantics.

- Datasets like APPS, HumanEval and MathQA-Python used.

Datasets: APPS, HumanEval, MathQA-Python

Challenges: Mapping specifications to executable logic, context switching

Long-Chain Reasoning

- Connecting and processing complex information across extended sequences.

- Limited in earlier models, but capacities now improving with large models.

- Vital for tasks like planning, decision making and question answering.

Datasets: Various QA/text datasets

Challenges: Maintaining focus across long sequences, episodic memory, complexity

Foundation Model Techniques

The wide spectrum of reasoning abilities required from AI systems necessitates a diverse range of approaches for developing sophisticated reasoning capacities. The techniques explored for foundation models can be applied across the multifaceted landscape of reasoning tasks.

Pre-training forms the basis for acquiring reasoning skills by extracting extensive patterns from large datasets. For commonsense reasoning, pre-training on a blend of multimodal data sources provides the breadth of world knowledge. For mathematical or logical reasoning, exposure to substantial mathematical and logical data enables learning formal rules.

Fine-tuning allows adapting these learned patterns towards specialized reasoning tasks like visual reasoning which requires interpreting images or embodied reasoning where an agent reasons about environments. Fine-tuning on task-specific benchmarks transfers inductive biases.

In-context learning proves valuable where diversified reasoning is needed for different situations based on contextual cues. For example, generative tasks under multimodal reasoning can leverage demonstrations to output apt responses. Prompt engineering also stimulates causal or counterfactual reasoning by controlling hypotheticals.

Mixture-of-experts boosts reasoning versatility by integrating modules focused on distinct reasoning types. Separate experts could handle mathematical versus textual reasoning. This modularization also aids embodied reasoning which combines visual, linguistic and spatial reasoning.

Alignment training importantly shapes all types of reasoning towards human preferences through feedback. Evaluating social conventions for commonsense reasoning or safety for agents requires reflecting human judgments.

For In-context learning : Enhancing with Knowledge Graphs

Knowledge graphs emerge as a pivotal tool for amplifying reasoning capabilities by providing structured world knowledge to complement statistical learning. These graphs comprise entities representing real-world concepts, connected by semantic relationships. Their inherent capacity to capture relational knowledge between abstract concepts in machine-readable form lends them well to augmenting reasoning.

Incorporating knowledge graphs furnishes numerous advantages for multi-faceted reasoning across AI systems:

- They aid commonsense reasoning by supplying extensive everyday facts and concepts models may lack exposure to, addressing knowledge gaps.

- The structured representations allow models to parse complex contextual relationships more effectively through graph analysis algorithms. This benefits causal, logical and explanatory reasoning.

- The graph linkage between disparate entities facilitates connecting insights across documents or data silos. This proves valuable for long-chain reasoning.

- Multimodal knowledge graphs fuse varied data types like text, images and audio providing a substrate for enhanced multimedia reasoning.

- Temporal graphs with time-based concepts aid prediction through learning dynamics. Dynamic knowledge graphs that aggregate emerging information from sources like news and social media can therefore refine reasoning.

Research on injecting knowledge graphs into foundation models shows promising results. Techniques like pre-training on graphs using self-supervision objectives followed by fine-tuning is an active area. Graph neural networks that directly operate on graph-structured data can also be integrated. More broadly, the synergistic fusion of symbolic knowledge resources with statistical learning offers an exciting direction for reasoning advancements.

Challenges and Future Directions

Despite the progress, open challenges remain around robustness, human alignment, efficiency, multilinguality and avoiding pitfalls like hallucination of information.

Understanding Model Limitations

While recent advancements with foundation models are promising, it is important to objectively acknowledge their current limitations in reasoning abilities. As complex neural network systems, their decision-making processes lack transparency and can manifest unanticipated behaviors. Models still face issues with generating fabricated information unsupported by evidence, known as hallucination, especially when reasoning about unfamiliar situations beyond their training data. Work is required to improve model calibration, enabling them to accurately convey confidence levels and predict their own errors. Robust testing protocols are vital for systematically evaluating reasoning capacity on diverse, novel examples while avoiding dataset biases. There remains significant scope for innovation around interpretability, robustness and calibration.

Multi-faceted Evaluation

Quantifying progress requires standardized evaluations spanning different facets of reasoning, including deductive logic, causal analysis, spatial cognition, everyday reasoning, social aptitude and moral judgments. Current benchmarks have significant gaps, for instance lacking coverage of abstract reasoning. Metrics should also go beyond superficial responses to test in-depth, step-by-step reasoning. Diversified data, rigorous testing protocols and multi-dimensional metrics can sharpen insight into capabilities. User studies are also vital for deployed systems to gather feedback, enabling continuous adaptation.

Prioritizing Safe Development

With rapid scaling underway, anticipating and controlling risks becomes pivotal through techniques like technical audits, red teaming, monitoring for unintended behaviors and fail-safe mechanisms. Concerted efforts to incorporate ethical considerations throughout the development lifecycle — from data curation to deployment — rather than just evaluation can enhance trustworthiness. Overarching governance frameworks providing model oversight may prove beneficial for risk management as systems grow more autonomous.

Supporting Multilinguality

A heavy emphasis on English risks limiting reasoning’s universal value. Expanding language diversity in training datasets and benchmarks can make models more inclusive. Transfer learning and machine translation offer interim solutions but risk diluting cultural nuances. Native language processing should be the long-term priority.

Anthony Alcaraz

Chief Product Officer at Fribl, an AI-powered recruitment platform committed to pioneering fair and ethical hiring practices. With recruiting topping the list of concerns for many CEOs and companies spending upwards of $4,000 on average per hire, the need for innovation is clear. All too often, the arduous recruiting process leaves both employers and applicants frustrated after 42 days of effort with uncertainty if the right match was made... At Fribl, we are leading the charge to transform this status quo, starting with reinventing the screening process using our proprietary GenAI technology enhanced by symbolic AI.

- The article explores the landscape of reasoning in AI, delving into its types, challenges, and future directions, highlighting the crucial role of knowledge graphs and diverse evaluation metrics.

- The author emphasizes the need for safe, ethical, and multilingual development in the evolving field of artificial intelligence.