Facebook connects you, your friends, and your community. In exchange, it serves you advertisements it believes will be of interest to you based on your activities on the platform. It’s a common, lucrative business model — and most of the time, users are happy with the trade.

Occasionally, however, there’s a public outcry, usually in response to a scandal over data misuse. And whenever that’s happened, Meta CEO Mark Zuckerberg has defended their policy, stating that years of internal research shows that users overwhelmingly prefer relevant ads. But a few years ago, when Apple built stronger user controls over data sharing into iOS, most users chose to opt-out of Facebook’s data collection. For Meta, this meant pivoting to other ways of serving relevant ads — but it also took a huge chunk out of their stock value.

What happened? After all, Meta is known as a prestigious employer, having both a large research staff and a highly competitive hiring process. The internal research that Zuckerberg references has never been publicly shared, but it’s fair to assume it was done to a high standard of quality. Yet repeated findings in one context — internal research — failed to replicate in another, and this had huge consequences for the company.

As UX Researchers, our goal is to inform decisions with quality research. But even with the best of intentions, research sometimes fails. Depending on the severity of the failure, and how integral those findings were to company strategy, the consequences can be grave.

There are three major ways a UX research study can fail: the study wasn’t sound, the findings were wrong, or the findings don’t apply as widely as hoped. In this article, we’ll look closely at common mistakes under each of these categories and explore ways to address them.

The study wasn’t sound

In 2008, Walmart ran a customer survey. There was one clear takeaway: customers wanted less clutter in the stores.

The company went right to work, simplifying store layouts and presenting streamlined options. They cleared and slimmed the aisles, reduced displays, and minimized selection. Having spent millions to refurbish stores, customer satisfaction soared — but there was a swift and dramatic loss in sales. By some estimates, it ended up costing Walmart almost $2 billion in potential revenue.

One analyst suspected that Walmart leadership was fishing for data to support a hypothesis they had: to compete with Target, with its sleek and sophisticated stores, Walmart needed to follow suit. And if a researcher is motivated to do so, it’s not hard to design a study that will guarantee a predetermined result.

Placing one’s thumb on the scale is one example of this first category of error: conducting a study that has fundamental flaws. This can manifest in other ways. You may have unclear objectives. You could have too few participants, or you may have recruited a biased sample. We can also introduce measurement error by asking flawed, inappropriate, or leading questions.

In a technical sense, we would describe all of these as problems related to internal validity. They compromise the integrity of the research.

Of the three major failures, this sin is the deadliest. It doesn’t matter how good your analysis or report is if the study is flawed from the beginning. And though unforeseen issues can later compromise the later stages of a project, the researcher has the most control at this point — and therefore, the most responsibility.

Researchers need to develop both an intuitive feel as well as a clear process for nipping these mistakes in the bud. Formal instruction on experimental design and threats to internal validity can help.

There was a problem with the findings

During the 2008 and 2012 US presidential elections, Nate Silver accurately forecasted not just Obama’s victory, but how many individual districts and states would vote. Historically, pollsters would look at one or a few recent polls. Silver’s probabilistic approach involved including as many as possible and weighting each by past accuracy and poll quality.

In 2016, however, Silver’s publication 538 gave Hillary Clinton a 71% chance of winning the presidency. They summarized their forecast saying, “Clinton is probably going to win, and she could win by a big margin.” When Trump won, Silver pointed out that events that are only 30% likely to happen will still happen 30% of the time. Unfortunately, many of 538’s readers misinterpreted the forecast, and Silver’s credibility suffered.

Communicating the bounds of one’s certainty is difficult — and the time to get it right is before the forecasted event.

Our work can also suffer from issues related to probability and uncertainty. And there are many ways to draw or convey an incorrect conclusion from an otherwise solid study. We might think we’ve found something that was never really there (a false positive), or we might fail to find something that we should have (a miss). And when we describe a real finding, we might think it’s more or less important than it actually is.

When our takeaways don’t reflect the underlying reality, it leads stakeholders into bad decisions. Researchers must be vigilant in their analyses, challenging their findings and considering alternative explanations. And we should be careful to explain how certain or uncertain a finding is.

The study and findings were sound, but only in its context

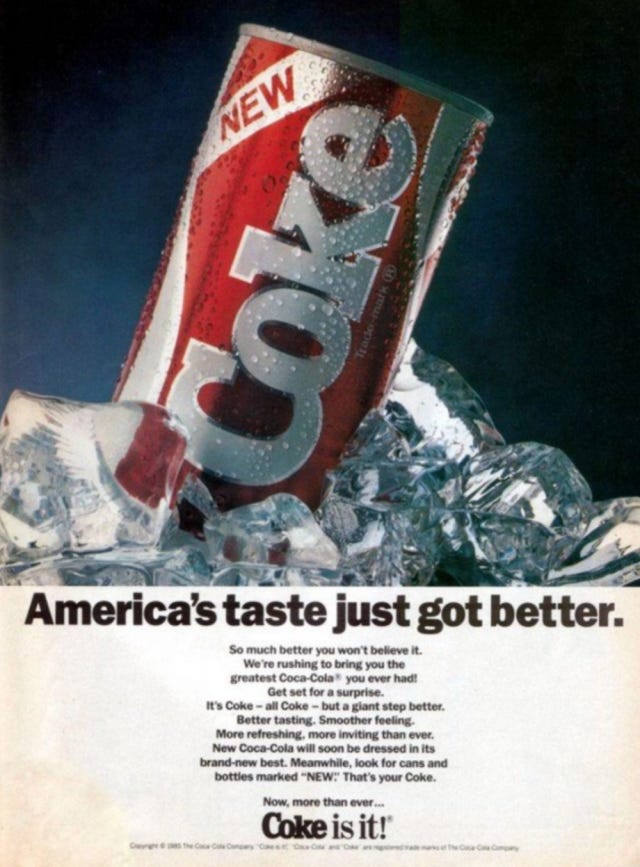

In the early 1980s, Coca-Cola was steadily losing market share to its sweeter competitor, Pepsi. To try and boost sales, they decided to test a refreshed recipe for their flagship beverage. The results from extensive market research were conclusive: consumers had an overwhelming preference for the new, sweeter Coke. It seemed so obvious that the company should move forward with the refresh that some distributors even threatened to protest if they didn’t.

Upon release, the market reacted strongly to New Coke — but not in the way the company had anticipated. The outrage and boycotts were so intense that Coca-Cola quickly back-pedaled, retracting the product and putting the original back on shelves.

Many have since speculated on how Coke’s research could have misled them so badly. Some point to the nature of the taste tests, where participants got what amounted to a single sip, rather than the full serving of a can or bottle. Others point to customer loyalty. New Coke was released as a wholesale replacement of the original recipe rather than another option, when customers had built up nearly a century of brand associations.

In either case, what was true in the laboratory didn’t remain true in the real world.

UX research studies and their findings can be sound in themselves, but if they don’t carry over into the context we’re interested in — that of our actual users — our work has little value. Just about any element of our study could differ in a meaningful way from real life: its timing, the way we framed our questions and tasks, our participants’ goals and motivations, the presence of a researcher-observer, or the experience itself.

When findings fail to generalize, the problem is rooted in the study’s external validity. Researchers must carefully consider the context of their studies and replicate the conditions they are interested in as much as possible. And when we’re reporting our findings, we must consider the possible contexts in which they may not remain true.

Conclusion and summary

There are many ways a UX research study can go wrong, but the root cause of the failure rolls up into one of three major categories:

- Problems related to the research plan and design. A study with unclear or biased objectives or measurements is flawed from the beginning. Here, the researcher has the most control and responsibility.

- Problems of analysis and communication. We can fail to find something important or report the illusion of a finding that wasn’t truly there. We can also misjudge the strength of a finding and mislead others in how we present it.

- Problems of generalization. The research design and analysis may be technically flawless, but the findings may never occur outside of our study context. It fails to tell us anything meaningful about our actual users.

In a technical sense, these relate to internal validity, probability and uncertainty, and external validity, respectively. Whenever and however a UX research study fails, it can undermine our credibility and harm our organizations.

To prevent these problems, UX Researchers should consider them during each phase of the project and use systems (like checklists and getting feedback) to keep them in check. If a past study has suffered from one of these problems, diagnosing it can help you decide whether to toss or redo it.

This article was originally published on The ¼″ Hole.