Save

Advances in artificial intelligence are poised to deepen our understanding through metacognitive reflection. This piece discusses how AI not only accelerates the research process by identifying patterns and clustering insights but also prompts deeper thought on overlooked or underappreciated findings.

One of the best parts of building experiments in public is how the ideas can evolve through others. When we recently shared a video of how AI can help metacognition in the classroom, someone reached out to us to say that this might also be relevant in UX research.

Though this might be obvious to outsiders, we were knee-deep in thinking about classroom interactions, so we missed this idea. Taking a step back, it actually does make a lot of sense.

In any research, metacognition — thinking about your own thinking — has always been crucial for ensuring your outputs are well-considered and lack bias. In design, this capacity is what enables designers to course-correct when they are leaning too much into their own worldviews. It’s a critical skill for creating user-centered designs. With advances in AI, there’s growing potential to combine AI’s ability to process vast amounts of qualitative data with metacognitive reflection. However, it’s important to approach these possibilities thoughtfully.

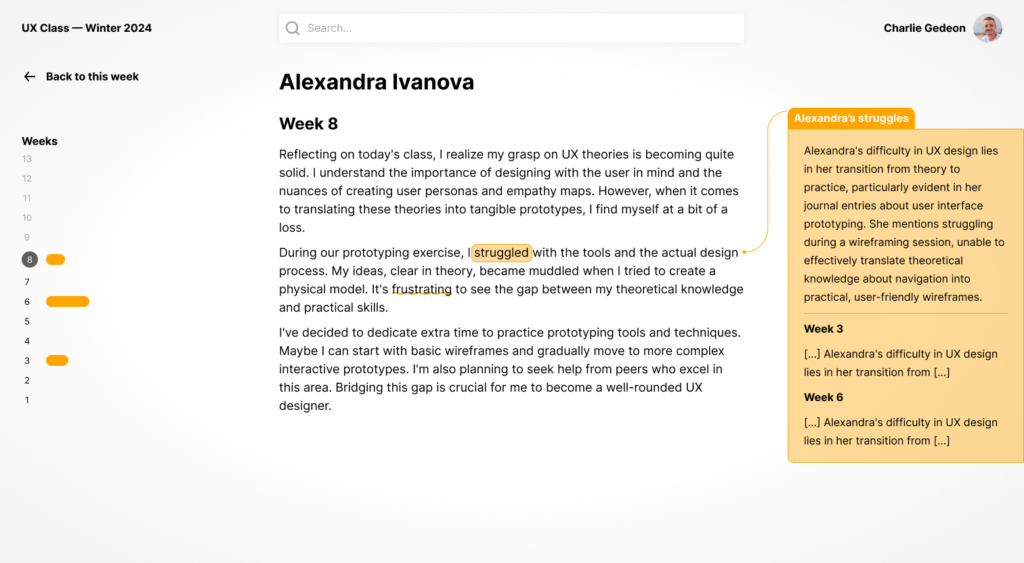

Dovetail, one of our favorite UX research tools, has shared its vision of AI, emphasizing AI’s capacity to accelerate research by clustering insights and automating theme detection. This efficiency could shorten the time between research and its demonstration of value, potentially increasing companies’ willingness to invest in UX. However, with this speed comes the risk of bias or missed insights. While Dovetail clearly states the value of human oversight, we were curious about what the oversight can look like and how it would behave.

We see AI’s role in UX research going beyond acceleration — it has the potential to help researchers think deeper, by not only identifying patterns but also prompting reflection on missed or underappreciated insights.

The role of reflection in UX

Reflection is crucial in understanding why a UX researcher may reach certain conclusions. While AI can detect patterns in feedback, simply adding disclaimers about its inaccuracies isn’t enough to ensure reflection. We need interfaces that actively encourage researchers to think critically about their own decisions and recommendations.

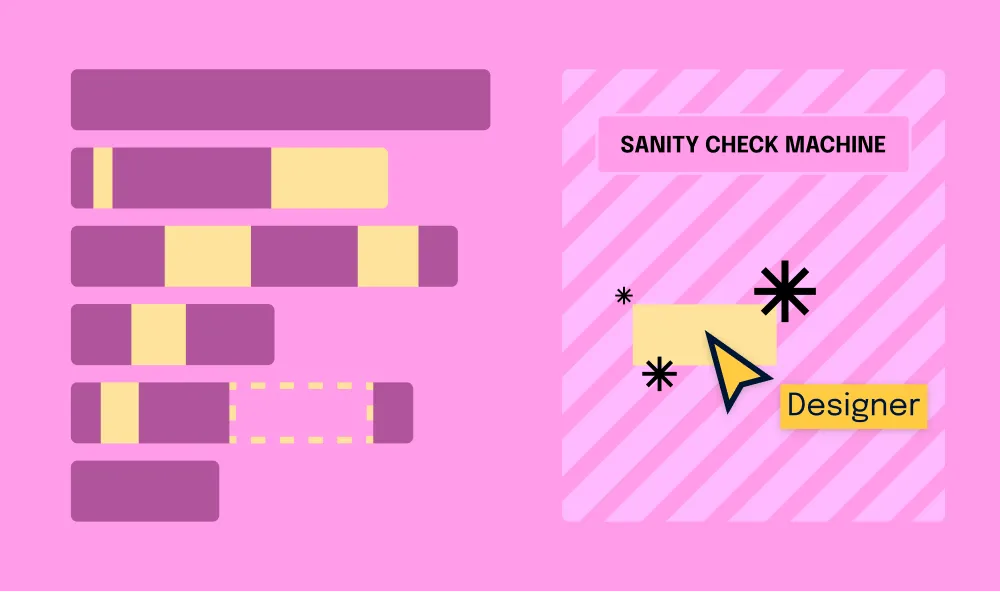

In the video above, exploring an interface for learning, we explored how targeted reflection surveys help students challenge their assumptions. What if UX tools offered similar reflection checkpoints, acting as a “sanity check” for researchers before they finalize their recommendations?

Integrating reflection tools directly into AI-powered interfaces would prompt researchers to question their assumptions and refine their thinking before moving forward.

AI as a reflective partner

We know that AI can detect patterns and suggest themes that researchers might overlook. For example, it can identify sentiment trends or recurring pain points across user groups. But AI, while fast, has its own limitations — such as biases introduced through training data. That’s where the partnership between human researchers and AI becomes crucial. In another experiment, we show how a combination of tagging and connections can become a type of “Inspector” for words, like those that browser developers use when coding.

The AI would explain what it’s saying but defer to human judgment since it’s essential for interpreting the deeper context. The goal is to ensure that the analysis remains grounded in the real-world experiences of users.

AI and metacognitive growth in UX research

While AI holds promise for UX research, we remain cautiously optimistic. AI can help researchers identify overlooked themes and encourage reflective thinking, but human insight is irreplaceable in understanding the deeper meaning behind user feedback. Like the “big data” hype before it, it’s important we don’t delude ourselves about the objectivity of what machines give us. Jer Thorp says it best in his post, “You Say Data, I Say System”.

Any path through a data system starts with collection. As data are artifacts of measurement, they are beholden to the processes by which we measure. This means that by the time you look at your .CSV or your .JSON feed or your Excel graph, it has already been molded by the methodologies, constraints, and omissions of the act of collection and recording.

Simply by measuring the data, we are transforming it in imperceptible ways. What we’re very excited about is a new category of interfaces for analyzing qualitative data with unprecedented clarity. There may be a way to make the subtle transformations that happen when we collect qualitative information more explicit. While we don’t think that any analysis tool will ever be perfect or free of biases, we do believe that there’s a fresh opportunity for us to deepen our understanding of that which can’t be counted.

The article originally appeared on Pragmatics Studio.

Featured image courtesy: Allan MacDonald.

Charlie Gedeon

Charlie Gedeon is a designer and educator focused on how technology shapes understanding, learning, and collaboration. He sees design as a way to think together and challenge what we take for granted. Charlie creates experiences that reward curiosity and invite reflection, driven by the belief that better tools help us ask better questions. He shares his thoughts his blog and YouTube channel.

- The article talks about how AI can speed up data analysis and encourage people to think more deeply about biases and missed insights, which can improve the quality of user-centered design.

- It shows that AI-powered UX research tools need to include reflection checkpoints. These checkpoints let researchers critically assess their assumptions and conclusions.

- The piece highlights the collaboration between AI’s ability to recognize patterns and human judgment to make sure the research outcomes are meaningful and consider the context.