With months of intense ‘what can AI do for you’ face-to-face discussions, op-ed think pieces and podcasts, I want to ask the opposite question: what can’t AI do? Some people will enthusiastically say “Nothing. It’s the most disruptive and transformative advancement in technology. It is and will continue to change the world and how we interact with the digital space.”

And the research reports with supporting statistics predict a highly AI’d future.

- In 2018, McKinsey Global Institute released a Future of Work report that estimated that 400 million people worldwide could be displaced by AI systems, tools, and platforms by 2030.

- In March 2023, Goldman Sachs released its global economies impact report suggesting that “generative AI could substitute up to one-fourth of current work”.

- Earlier this month, the World Economic Forum released its Future of Jobs report that concurred with Goldman Sachs’ prediction but shared specific numbers on the impact of automation on jobs. The job outlook estimation is quite sobering: 83 million job roles will evaporate and only 69 million job roles will be created. That’s a 14 million job role differential.

The fear of job replacement and/or inability to secure a living wage job is running very high. People are scared that they will be automated right out of a career, job, and ways to support the lifestyle they want. There’s even a list of potential jobs (in tech, media, law, etc) that are first on the chopping block to disappear due to AI. And the irritating part within each of these reports is that there’s a lack of a counterposition such as sharing suggestions on AI-proofing your career.

Instead of focusing on the projected adoption of AI in every facet of our lives, consider honing in on what is unAI-able. UnAI-able are actions, tasks, and skills that can’t be digitized or automated. These routines require humans to constantly be in the loop to make key and pivotal decisions. So I’ve identified three categories of human-driven decision-making competencies that every sector and industry currently needs and will require for the foreseeable future.

Contextual Awareness. Now context isn’t a monolith. The types of context that’ll be under human consideration likely have more than one of the following: cultural, economic, emotional, historical, location, political, situational, and social. And there’s typically a through-line that crosscuts this different context. The economic perspective can’t be separated from the social and/or political lens. And isolating these contexts as independent and identically distributed elements isn’t real life — no matter the number of well-known AI researchers trying to construct a representative mathematical equation with elaborate experiments and published outcomes. Images like this are shared online that necessitate people’s historical, location, and political awareness to know that they were manufactured and fabricated to elicit a certain response from the public.

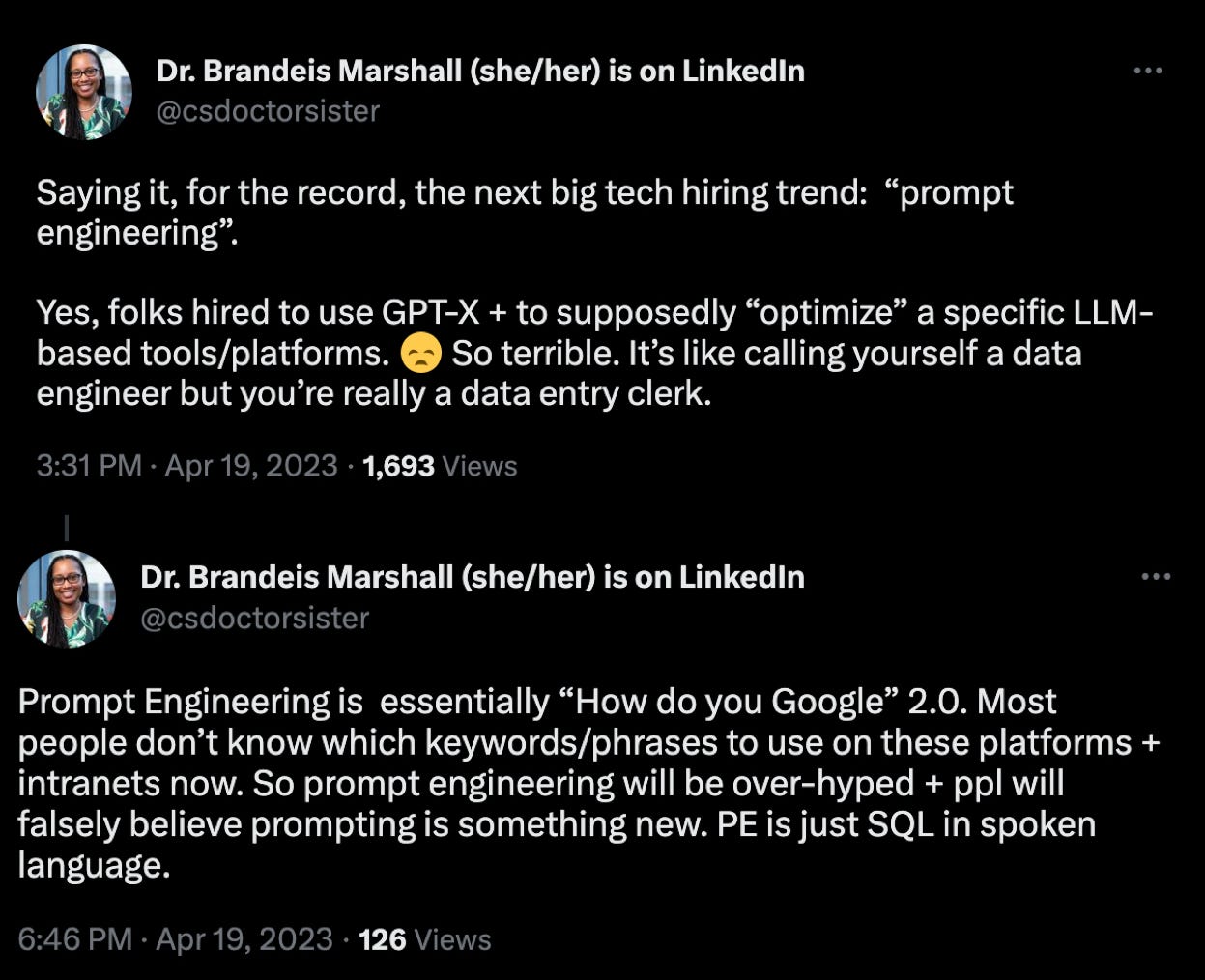

Conflict Resolution. AI can’t handle friction. In fact, it chooses a side — the most unflattering side. In 2016, Tay the AI chatbot spouted tweets that were racist, misogynistic and other -isms for less than 1 day before it was shut down. Fast forward to March 2023, Snapchat’s AI chatbot that infused ChatGPT disseminated inappropriate advice to an adult saying they were a minor. AI doesn’t know when to shut up or not answer. AI is programmed to provide a response. The quality, appropriateness, and validity of that response remain questionable. Dealing with conundrums is a blatant weak area for AI systems, tools, and platforms. AI can potentially provide humans with options, but it can’t really help us make the informed choice.

Critical Thinking. Our human ability to assess and evaluate circumstances is one of our distinguishing traits. Critical thinking skills combine problem-solving, curiosity, creativity, inference, and strategy. AI can’t do one of these critical thinking elements well. For instance, computer programming, aka coding, does solve mathematical problems. But once any element in a mathematical equation becomes a proxy for people, then the problem-solving through coding approach breaks as at least one demographic group is excluded and oppressed as a result. In another example, AI art has attempted to claim that AI is creative. But there are a number of persnickety copyright infringement disputes that raise concerns over the legal (and ethical) use of content scrapped from online repositories. AI reaches this cement ceiling because reasonings and judgments are made by balancing lived experience, expertise, and skills. AI has no lived experience, and limited expertise as dictated by training/testing datasets and programmable skills. The rest is covered by people as they exercise their critical thinking skills.

AI can’t provide contextual awareness, resolve conflicts or think critically. If you enter a career or perform skills that require some blend of these three functions, then you’ll have a livelihood-making path. Most likely, your job duties will pivot, not disappear, so that you’ll be diagnosing the impact of multiple contexts, managing tensions, and thinking critically. For example, for coders — with computer science degrees or not — there’ll be a greater need for us to explain and interpret what generative AI is actually doing. You won’t be able to be a software developer without being conscious of the potential societal impacts of the lines of code you wrote. In essence, it’ll be very important that we all be able to understand the nuances of the strengths and weaknesses of our digitally-based communication skills within our primary discipline.

This article was originally published on Medium.