Fascinating advancements in the field of Artificial Intelligence (AI) never cease to amaze us. As intelligent as they are, AI tools do make things up, from time to time. Chances are, you may have already encountered what’s known as AI hallucinations— a phenomenon where a large language model (LLM), often a generative AI tool, presents false information, despite lacking the knowledge to know the answer with certainty. It means the AI can say nonsensical things while giving the user the impression that it knows what it’s talking about.

AI’s quirky side has real-life implications

AI hallucinations can take many forms. In some situations, the AI may create a sentence that contradicts the previous one or generate factual contradictions where it presents false information as authentic. Sometimes there could be calculations hallucinations with simple calculation errors, or it could even mess with the sources— ever clicked on those extremely convincing links presented as sources only to realize they don’t exist? At times, these mishaps are easy to spot, and at other times, not so much.

The cause for hallucinations could be anything from insufficient training data to lack of context provided by the user, and the implications could range from the spread of misinformation to serious consequences such as medical misdiagnosis. Researchers have discovered that LLM Model hallucinations can even be exploited by bad actors to disseminate malicious code packages among unsuspecting software developers. Needless to say, it’s an important problem to tackle.

Where does design fit in here?

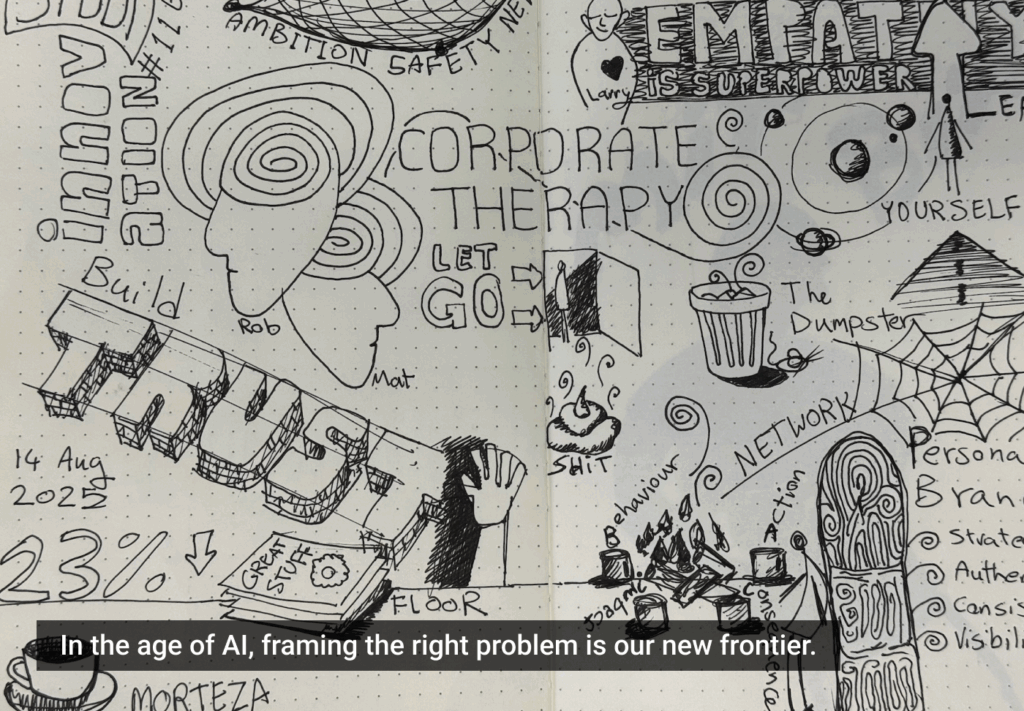

While many solutions that aim to potentially prevent such hallucinations point towards the use of high-quality training data, data templates, or prompt engineering, there’s a lot that design could potentially do to allow users to decide how much trust and dependability they can place on AI. That is to say, design can help by visually showing how certain the AI is about the information it provides you with— the AI’s confidence level if you will. This involves clarifying whether the AI relies on provided data or is merely attempting to answer without certainty, thereby minimizing the risk of false information.

In this context, let’s think of design not as a solution to prevent AI hallucinations but rather as something that gives the user a considerable degree of control over what they take away from their interactions with AI, a tangible manifestation of reliability. Besides, good design, being intuitive and resourceful, acts as the much-needed human intervention, as digital interactions are increasingly becoming more algorithmic and machine-like.

How can design help with the hallucination problem?

The power of design is such that a symbol can speak a thousand words; you just have to be smart with it. One may wonder how exactly design can help make our interactions with AI-powered tools better, or in this case, how design can help with AI hallucinations in particular. This idea can be best illustrated with a few examples as we go along.

Every time we enter a prompt into a generative AI system like ChatGPT, there is nothing indicative that reassures us that the results are indeed valid or appropriate. Especially if we are venturing into unfamiliar territories, our lack of expertise in a particular topic could make it worse. Many questions could cloud our understanding — how accurate is this data? Can I trust it? Is it based on verified data sources? Is AI making this up? According to a study by Tidio, almost one-third (32%) of users spot AI hallucinations by relying on their instincts, while 57% cross-reference with other resources. Isn’t it ironic that we seek the system’s help to gain a certain piece of information and now we have to really make sure that what it gets back to us makes sense? That’s double the effort, and that’s not helpful.

Now, imagine if every time ChatGPT generated a response to our prompt, it came with a progress bar or a meter to visually convey the AI’s confidence level. A full meter for high confidence, a partially filled one for a lower level of confidence, you get the idea. Something like this allows users to easily gauge the certainty of the generated response through a simple visual representation. This is only one of the numerous ways in which design can be employed to convey the AI’s confidence level to the user.

Icons or symbols can also be utilized to represent different levels of confidence- a checkmark, for instance, could indicate high confidence, whereas a crossmark could imply low confidence. Caution symbols or warning symbols could effectively alert the users to exercise caution while using the information- the AI could be hallucinating, now you know.

Effective use of colors is another application of visual design that could offer an intuitive way for users to assess the reliability of the AI’s responses. Think of how we associate green with progress and positive outcomes, and how red demands our attention to matters of importance. For the in-between, there are colors like yellow and orange. In short, color coding is an inherently efficient way to convey crucial information without saying much.

The potential of design in tackling AI-related challenges

AI-related threats are often overstated, as technology itself is not the primary issue; it’s human intent and actions that create the bigger challenges. Artificial Intelligence is, after all, a tool developed and used by human beings, fundamentally to catalyze productivity and efficiency. Its applications are products of human intent and intellect. Now, consider the undeniable influence of design in our everyday lives— the way we feel, think, and make decisions. As passengers on the road, we take cues from the traffic signals; as customers, we derive meanings from the packaging of products; the list goes on and on. As Paul Rand puts it rightly, “Everything is design. Everything!” Design is an inexplicable part of our lives and we cannot underestimate its potential to guide human actions in interactions with advanced technologies- AI is no exception.