Save

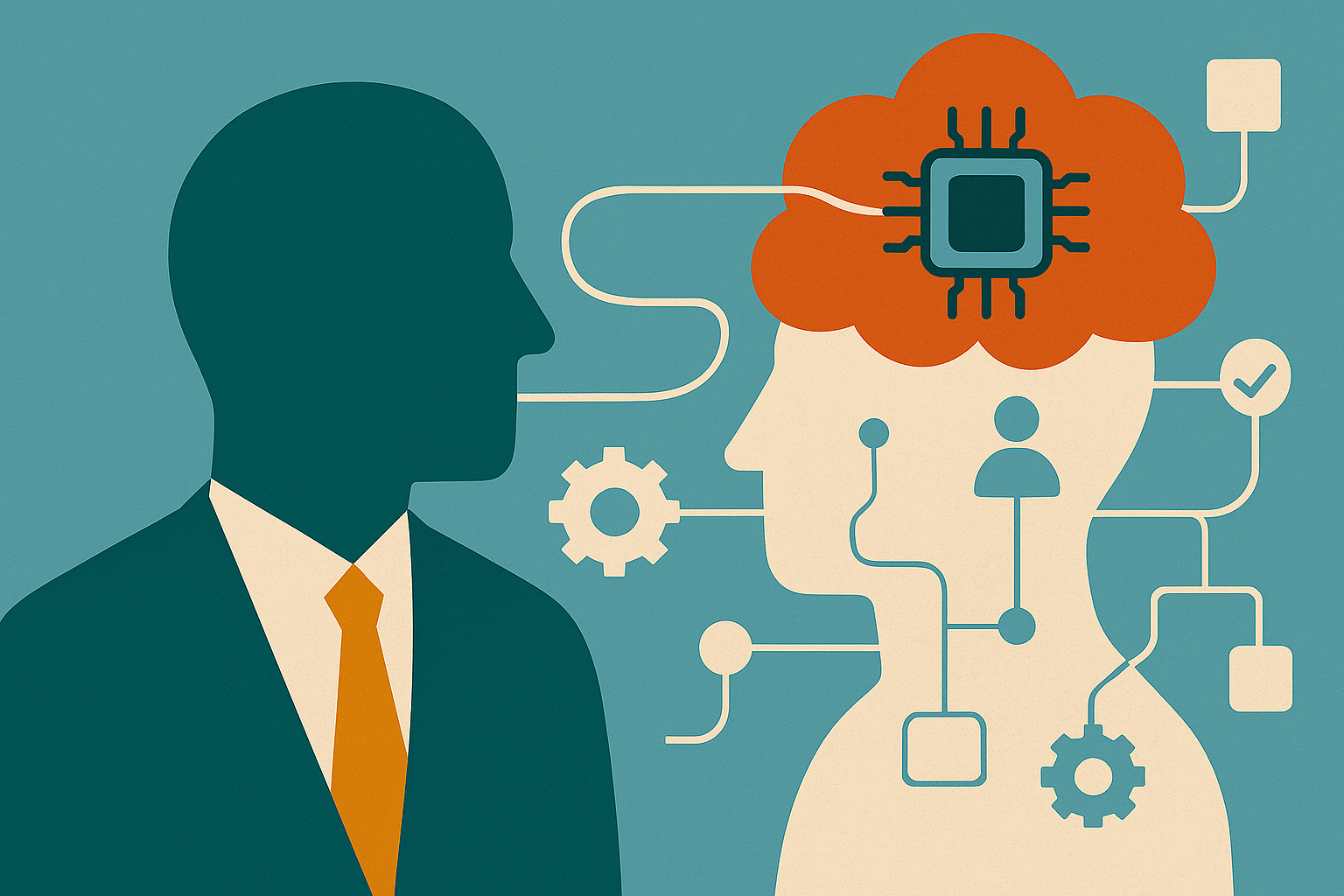

The term OAGI (Organizationally-Aligned General Intelligence) was introduced by Robb Wilson, founder of OneReach.ai, and co-author of Age of Invisible Machines. It represents a critical evolution in the way enterprises think about AI—not as something general and abstract, but as something organizationally embedded, orchestrated, and deeply aligned with your company’s people, processes, and systems.

OAGI is a recurring theme on the Invisible Machines podcast and throughout the thought leadership featured in UX Magazine, where the focus is on turning automation into collaboration between people and AI.

1. You Don’t Need AGI, You Need OAGI

If you’re a product leader in a large company, you already know the pain of complexity: disconnected systems, slow workflows, overlapping tools, and governance hurdles. “AGI” may promise human-level intelligence—but you don’t need artificial philosophers. You need artificial teammates who understand your org’s DNA.

That’s what OAGI offers: AI that’s designed from the ground up to work with your existing systems, data, policies, and people.

2. Why It’s the Next Frontier for Product Owners

Domain alignment. OAGI doesn’t try to figure out your org from scratch—it’s built using your own data, processes, and internal logic. That means higher trust, fewer surprises, and smoother compliance.

Orchestration at scale. Your product teams already juggle APIs, tools, UX flows, and services. OAGI provides a centralized intelligence layer that coordinates across automations, agents, and conversational interfaces.

Actionable autonomy. Instead of static workflows or brittle bots, OAGI enables intelligent agents that learn, adapt, and act—freeing product owners to focus on outcomes, not integrations.

3. What Product Owners Should Prioritize Now

- Map your internal intelligence fabric. Understand your org’s people, processes, tools, goals, and workflows. This becomes the foundational “knowledge scaffold” for OAGI.

- Adopt orchestration platforms built for enterprise AI agents. Look for auditability, security, governance, and versioning. This is where platforms like OneReach.ai stand out.

- Pilot high-leverage use cases. Start with things like HR approvals, customer support triage, or dev-ops alert handling. Prove ROI early.

- Plan for evolvability. OAGI is not a one-and-done install. You’ll iterate continuously—refining knowledge graphs, updating models, and evolving capabilities.

4. OAGI vs AGI: Control, Risk, and Value

- Control. AGI is broad and unpredictable. OAGI stays within the guardrails of your business design.

- Risk. Enterprises need auditability and compliance. OAGI allows you to retain visibility and governance.

- Value Realization. OAGI can deliver measurable productivity and cost savings now—while AGI remains speculative.

5. How to Engage Stakeholders

- Executives: Frame OAGI as incremental, safe automation with fast ROI—reducing cycle times, error rates, and support costs.

- Tech/IT: Emphasize enterprise-grade orchestration frameworks, audit trails, version control, and access governance.

- Line-of-business teams: Showcase how OAGI-powered interfaces reduce complexity and deliver faster results via natural-language interactions.

OAGI Is How You Win the AI Transition

The leap from isolated automations to intelligent orchestration is already underway. Product owners who embrace OAGI aren’t just improving operations—they’re redefining how their organizations work. As Robb Wilson puts it in Age of Invisible Machines, “The future isn’t about replacing humans with AI. It’s about creating systems where both can thrive.”

The question isn’t whether your company will adopt AI. It’s whether you’ll lead the shift to AI that’s purpose-built for your organization.

UX Magazine Staff

UX Magazine was created to be a central, one-stop resource for everything related to user experience. Our primary goal is to provide a steady stream of current, informative, and credible information about UX and related fields to enhance the professional and creative lives of UX practitioners and those exploring the field. Our content is driven and created by an impressive roster of experienced professionals who work in all areas of UX and cover the field from diverse angles and perspectives.