What Is Figproxy?

Figproxy is a utility that allows bidirectional communication between Figma and physical hardware for prototyping interactions that involve screens and physical elements like motors, lights and sensors. It’s designed to talk to hardware prototyping platforms like Arduino.

Some potential use cases include:

- Kiosks – Soda Machines, Jukeboxes, Movie Ticket Printers, ATMs

- Vehicle UI – Control lights, radio, seats etc.

- Museum Exhibits – Make a button or action that changes what is on the screen

- Home Automation – Prototype a UI to trigger lights, locks, shades etc. And make it actually work

- Hardware “Sketching” – Quickly test out functionality with a physical controller and digital twin before building a more complicated physical prototype

- Games – Make a physical spinner or gameplay element that talks to a Figma game

It’s really great if you have UX designers working in Figma already and want to quickly connect a design to hardware.

It’s also really valuable if you want to get a prototype working in a matter of hours, not days. It’s intended to be utilized when building-to-think right after a brainstorm sketch, before you spend a lot of time refining the design.

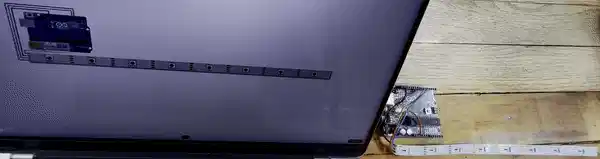

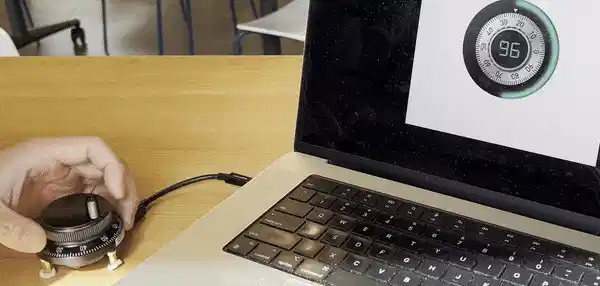

As a demonstration, I have supplied a couple of examples in the Github Repo: One where I have a Figma prototype that allows you to choose the colors that an LED strip lights up. The other is a knob that controls an on-screen representation in real time.

How It Works

Figma does not support communication from a prototype to other software in its API. Because we can’t go the official route, Figproxy uses two different “hacks” to achieve communication.

Speaking Out (Figma → Arduino)

Note: I will be using “Arduino” as shorthand for any hardware that can speak over a serial connection. There are a lot of platforms that can communicate over serial, but Arduino is the most common in this space.

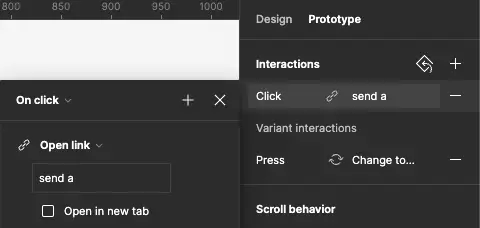

When you specify for Figma to go to a link, Figproxy looks at the link and if it starts with “send” (and not, for instance “http://”) we know it is intended to be routed to hardware.

In Arduino, you can listen for a character and perform some action like this:

if (Serial.available() > 0) {

// get incoming byte:

char incomingByte = Serial.read();

//in Figma the "Turn LED On" button sends "a", "Turn LED Off" sends "b"

if(incomingByte=='a'){

digitalWrite(LED_BUILTIN, HIGH);

}else if(incomingByte=='b'){

digitalWrite(LED_BUILTIN, LOW);

}

}If there is more complex data you need to send, you can send a string like “hello world!:

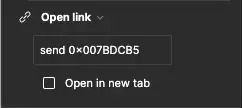

You can even send hexadecimal characters by preceding the string with “0x”

Speaking In (Arduino → Figma)

In Arduino, you can send a character like this:

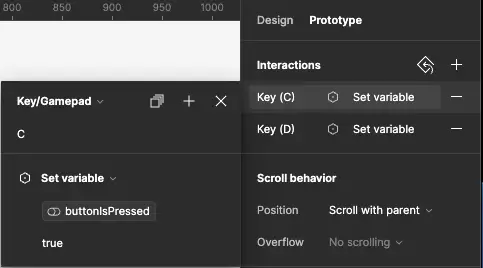

Serial.print('c');To get data into Figma, Figproxy sends characters as keypress events.

Why I Made It

At IDEO I work on a lot of physical product designs that incorporate displays. I commonly work with UX designers whose tool of choice for rapid iteration of experiences is Figma.

I was recently working on a project that was a kind of kiosk – a touch screen that has external hardware elements. We already had a phenomenal UI prototype built in Figma, and I had built some of the digital hardware elements out with Arduino to deliver an interactive model we refer to as an “experience prototype.”

I wanted to link the external LED animations to the UI prototype, so some LED behaviors could be choreographed to the moment in the user flow. I was floored when I came to the realization that this was not possible. Protopie did not import the Figma screens properly and would have resulted in days of re-work and making the UI team switch software. I also tried the fantastic software Blokdots (co-created by ex-IDEO’er Olivier Brückner) but this only allows hardware to talk to the “design view” of Figma as opposed to what I needed which was communication to the “prototype view.”

I’ve got a bit of a soft spot for making prototyping tools for hardware so honestly I was a bit excited that no-one had figured this one out yet. After digging into the Figma API, I realized why Blokdots hadn’t done it yet – Figma doesn’t support any communication to and from the prototype view in their API. I had to figure out a workaround. After looking at what prototypes could do, I had the idea to make a proxy browser and Figproxy started taking shape.

Try It Out

Detailed instructions for installation, examples and use can be found in the Github Repository here. The Figma files for the examples are here. I hope you like it!